...making Linux just a little more fun!

October 2010 (#179):

- Mailbag

- 2-Cent Tips

- Talkback

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- Henry's Techno-Musings: User Interfaces, by Henry Grebler

- Away Mission - PayPal Innovate, by Howard Dyckoff

- A Nightmare on Tape Drive, by Henry Grebler

- Making Your Network Transparent, by Ben Okopnik

- Time Management for System Administrators, by René Pfeiffer

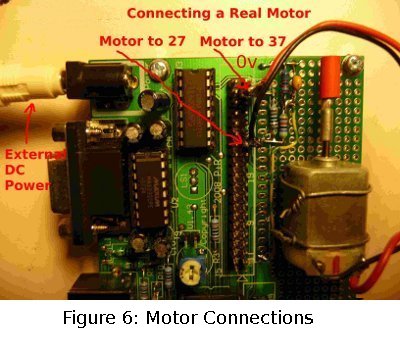

- Controlling DC Motors from your Linux Box, by P. J. Radcliffe

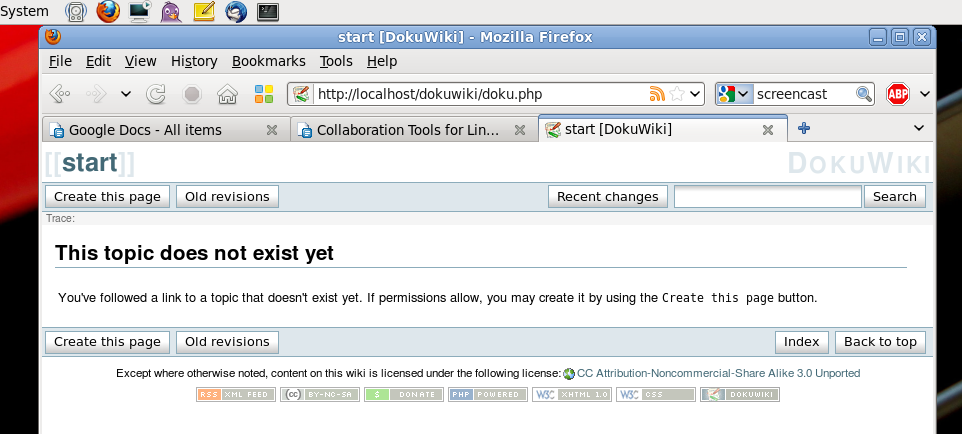

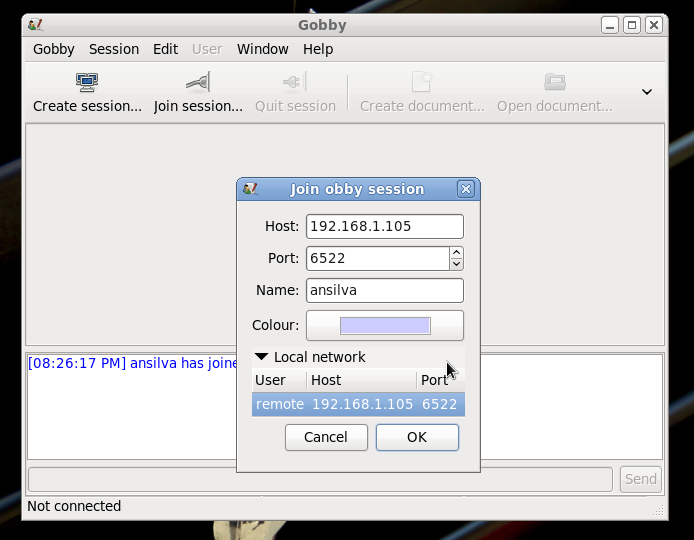

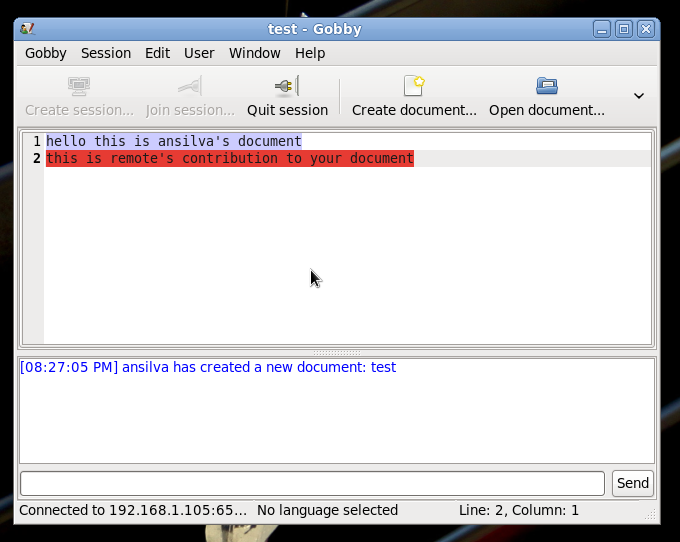

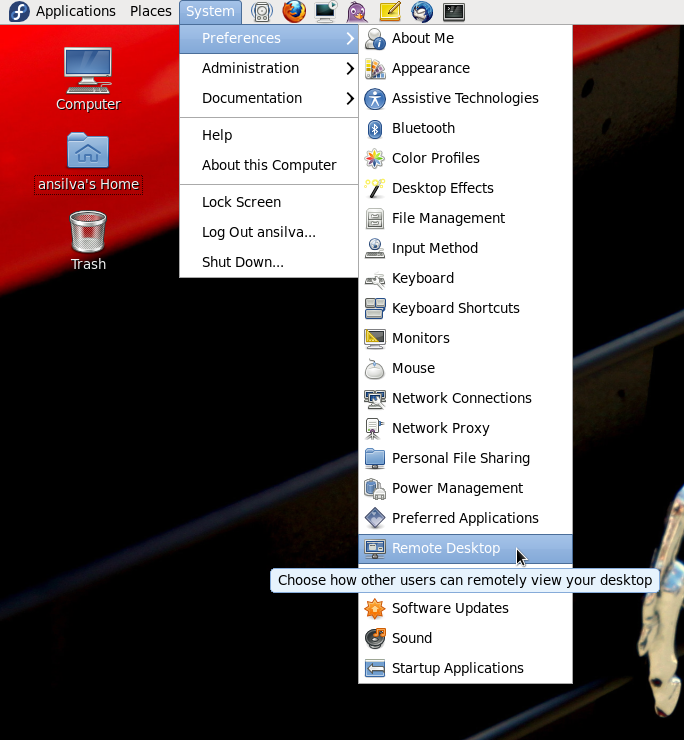

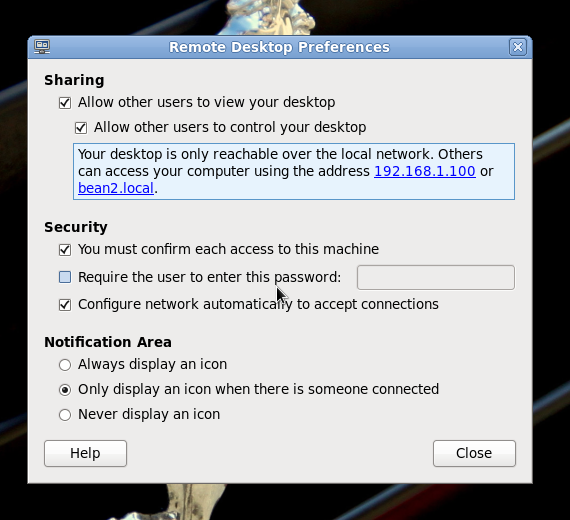

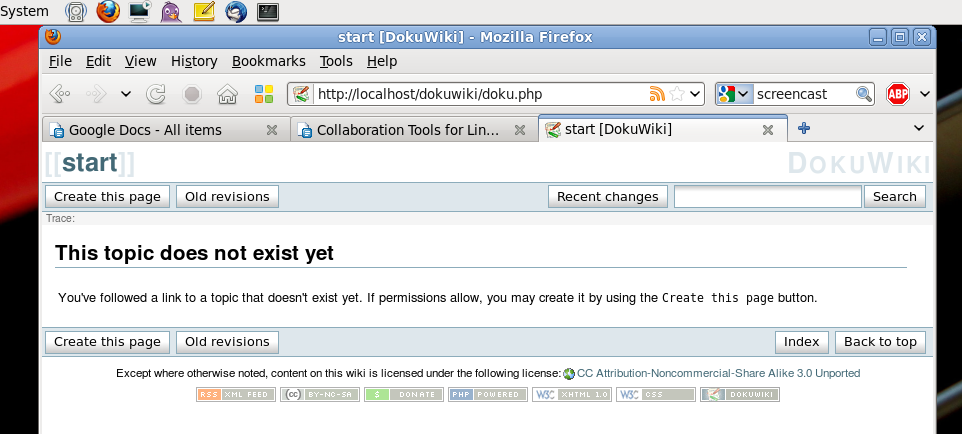

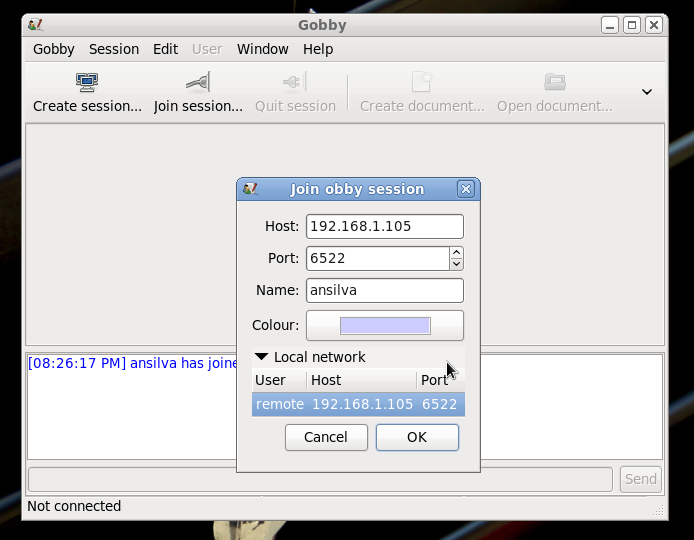

- Collaboration Tools for Linux, by Anderson Silva

- HelpDex, by Shane Collinge

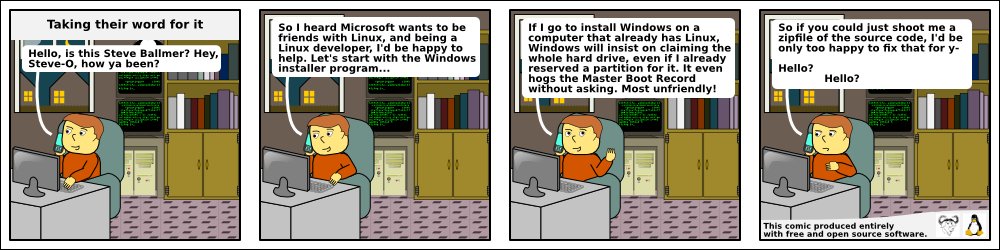

- Doomed to Obscurity, by Pete Trbovich

- Ecol, by Javier Malonda

- XKCD, by Randall Munroe

- Almost-but-not-quite Linux..., by Ben Okopnik

- The Linux Launderette

Mailbag

This month's answers created by:

[ Anderson Silva, Ben Okopnik, S. Parthasarathy, Joey Prestia, Kapil Hari Paranjape, René Pfeiffer, Mulyadi Santosa, Kiniti Patrick ]

...and you, our readers!

Our Mailbag

Image size vs. file size

Ben Okopnik [ben at linuxgazette.net]

Tue, 28 Sep 2010 20:04:15 -0400

I've always been curious about the huge disparity in file sizes between

certain images, especially when they have - oh, more or less similar

content (to my perhaps uneducated eye.) E.g., I've got a large list of

files on a client's site where I have to find some path between a

good average image size (the pic that pops up when you click the

thumbnail) and a reasonable file size (something that won't crash

PHP/GD - a 2MB file brings things right to a halt.)

Here's the annoying thing, though:

ben at Jotunheim:/tmp$ ls -l allegro90_1_1.jpg bahama20__1.jpg; identify allegro90_1_1.jpg bahama20__1.jpg

-rwxr-xr-x 1 ben ben 43004 2010-09-28 19:43 allegro90_1_1.jpg

-rwxr-xr-x 1 ben ben 1725638 2010-09-28 14:37 bahama20__1.jpg

allegro90_1_1.jpg JPEG 784x1702 784x1702+0+0 8-bit DirectClass 42kb

bahama20__1.jpg[1] JPEG 2240x1680 2240x1680+0+0 8-bit DirectClass 1.646mb

The first image, which is nearly big enough to cover my entire screen,

is 42k; the second one, while admittedly about 3X bigger in one

dimension, is 1.6MB+, over 40 times the file size. Say *what*?

And it's not like the complexity of the content is all that different;

in fact, visually, the first one is more complex than the second

(although I'm sure I'm judging it by the wrong parameters. Obviously.)

Take a look at them, if you want:

http://okopnik.com/images/allegro90_1_1.jpg

http://okopnik.com/images/bahama20__1.jpg

So... what makes an image - seemingly of the same type, according to

what "identify" is reporting - that much bigger? Does anybody here know?

And is there any way to make the file sizes closer without losing a

significant amount of visual content?

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (22 messages/37.41kB) ]

Tesseract 3.00 Released

Jimmy O'Regan [joregan at gmail.com]

Fri, 1 Oct 2010 03:33:55 +0100

Tesseract, the Open Source OCR engine originally created at

Hewlett-Packard and now developed at Google, has released a new

version.

Tesseract release notes Sep 30 2010 - V3.00

?* Preparations for thread safety:

? ? * Changed TessBaseAPI methods to be non-static

? ? * Created a class hierarchy for the directories to hold instance data,

? ? ? and began moving code into the classes.

? ? * Moved thresholding code to a separate class.

?* Added major new page layout analysis module.

?* Added HOCR output.

?* Added Leptonica as main image I/O and handling. Currently optional,

? ?but in future releases linking with Leptonica will be mandatory.

?* Ambiguity table rewritten to allow definite replacements in place

? ?of fix_quotes.

?* Added TessdataManager to combine data files into a single file.

?* Some dead code deleted.

?* VC++6 no longer supported. It can't cope with the use of templates.

?* Many more languages added.

?* Doxygenation of most of the function header comments.

As well as a number of new languages, bugfixes, and man pages.

Languages supported are:

Bulgarian, Catalan, Czech, Chinese Simplified, Chinese Traditional,

Danish, Danish (Fraktur), German, Greek, English, Finnish, French,

Hungarian, Indonesian, Italian, Japanese, Korean, Latvian, Lithuanian,

Dutch, Norwegian, Polish, Portuguese, Romanian, Russian, Slovakian,

Slovenian, Spanish, Serbian, Swedish, Tagalog, Thai, Turkish,

Ukrainian, Vietnamese

--

<Leftmost> jimregan, that's because deep inside you, you are evil.

<Leftmost> Also not-so-deep inside you.

LG hosting

Ben Okopnik [ben at linuxgazette.net]

Mon, 20 Sep 2010 17:26:36 -0400

Hello, Gang -

After a number of years of providing hosting services for LG (and a

number of others), our old friend T. R. is, sadly, shutting down his

servers. Whatever his plans for the future may be, he has my best wishes

and the utmost in gratitude for all those great years; if there was such

a thing as a "Best Friends and Supporters of LG" list, he'd be right at

the top.

(T.R. - if we happen to be in the same proximity, the beer's on me. Yes,

even the realy good stuff.)

I've arranged for space on another host, moved the site over to it, and

have just finished all the configuration and alpha testing. Please check

out LG in its new digs (at the same URL, obviously), and let me know if

you find any problems or anything missing.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (9 messages/13.03kB) ]

GPG secret key

Prof. Parthasarathy S [drpartha at gmail.com]

Wed, 22 Sep 2010 10:38:22 +0530

Is there a neat way to export and save my GPG/PGP secret key (private

key) on a USB stick ?

I have to do this, since I am often changing my machines (most of which

are given on loan by my employers). The secret key goes away with the

machine, and I am obliged to generate a new key pair each time. I did a

Google search and did not succeed.

I know I can save/export my public key, but GPG/PGP refuse to let me use

a copy of my secret key.

Any hint, or pointers would be gratefully appreciated.

Many thanks,

partha

--

-------------------------------------------------------------------

Dr. S. Parthasarathy | mailto: drpartha at gmail.com

Algologic Research & Solutions |

78 Sancharpuri Colony, Bowenpally P.O.| Phone: + 91 - 40 - 2775 1650

Secunderabad 500 011 - INDIA |

WWW-URL: http://algolog.tripod.com/nupartha.htm

My personal news bulletins (blogs) ::

http://www.freewebs.com/profpartha/myblogs.htm

-------------------------------------------------------------------

[ Thread continues here (3 messages/2.96kB) ]

wanna learn about hard disk internals the virtual way?

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Sat, 4 Sep 2010 22:54:21 +0700

Sometimes, people are scared to get a screw driver and check what's

inside the hard drive. Or maybe simply because we're too lazy to read

manuals.

So, what's the alternative? How about a simple flash based tutorial?

http://www.drivesaversdatarecovery.com/e[...]-first-online-hard-disk-drive-simulator/

It's geared toward disaster recovery, but in my opinion it's still

valuable for anyone who would like to see how the hardware works.

PS: Thanks to PC Magazine which tells a short intro about the company:

http://www.pcmag.com/article2/0,2817,2361120,00.asp

--

regards,

Mulyadi Santosa

Freelance Linux trainer and consultant

blog: the-hydra.blogspot.com

training: mulyaditraining.blogspot.com

[ Thread continues here (5 messages/9.55kB) ]

Brother, can you spare a function?

Ben Okopnik [ben at linuxgazette.net]

Fri, 17 Sep 2010 09:14:23 -0400

I don't know why this kind of thing keeps coming up. I never wanted to be

a mathematician, I'm just a simple programmer!

(One of these days, I'm going to sail over to an uninhabited island and

stay there for six months or so, studying math. This is just

embarassing; any time a problem like this comes up, I feel so stupid.)

I've been losing a lot of weight lately, and wanted to plot it on a

chart. However, I've only been keeping very sparse records of the

change, so what I need to do is interpolate it. In other words, given a

list like this:

6/26/2010 334

8/12/2010 311.8

8/19/2010 308.4

9/5/2010 300.0

9/9/2010 298.6

9/14/2010 297.2

9/16/2010 293.6

I need to come up with a "slope" function that will return my weight at

any point between 6/26 and 9/16. The time end of it is no problem - I

just convert the dates into Unix "epoch" values (seconds since

1970-01-01 00:00:00 UTC) - but the mechanism that I've got for figuring

out the weight at a given time is hopelessly crude: I split the total

time span into X intervals, then find the data points preceding and

following it, and calculate the "slope" between them, then laboriously

figure out the value for that point. What I'd really, really like to

have is a function that takes the above list and returns the weight

value for any given point in time between Tmin and Tmax; I'm sure that

it's a standard mathematical function, but I don't know how to implement

it.

Can any of you smart folks help? I'd appreciate it.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (24 messages/45.54kB) ]

Current Issue calculation

Joey Prestia [joey at linuxamd.com]

Mon, 06 Sep 2010 20:34:43 -0700

Hi Tag,

I am trying to rework a script that currently uses an external file to

keep track of what issue the Linux Gazette is on. I would like to do

this with out relying on an external file (feels cleaner that way) and

just calculate this from within the script using maybe the month and

year from localtime(time) from within Perl. Using the month and year I

thought this would be an easy task but it turns out its more difficult

than I thought. I will probably need some formula to do It to since I

will be running it from cron. Can you make any suggestions on how I

might attempt this? I have tried to figure a constant that I could use

to get it to come out correct with no luck. What works for one year

fails when the year changes when you add the month to the year.

# Get Issue

my (@date,$month,$year,$issue);

@date = localtime(time);

$month=($date[4])+1;

$year=($date[5])+1900;

$issue= $year - 1841 + $month ;

print "Month = $month Year = $year Issue = $issue\n";

Joey

[ Thread continues here (5 messages/6.26kB) ]

Installing Linux Modules

Kiniti Patrick [pkiniti at techmaxkenya.com]

Sun, 26 Sep 2010 07:29:53 -0400

Hi Gang,

I have a question on how to go about installing kernel modules without

going through the entire process of recompiling a new kernel.

In question is the agpgart module which i want to have as a loadable

module. As of now my the agpgart only exists as header files, and dont have

the modules ".ko" file yet.

Below is the output command from locate agpgart.

$ locate agpgart

/usr/include/linux/agpgart.h

/usr/src/kernels/2.6.31.5-127.fc12.i686.PAE/include/linux/agpgart.h

Thanks in advance.

Regards,

--

Kiniti

[ Thread continues here (3 messages/6.96kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 179 of Linux Gazette, October 2010

2-Cent Tips

2-cent Tip: Checking remote hosts for changes

Ben Okopnik [ben at linuxgazette.net]

Mon, 20 Sep 2010 19:07:05 -0400

On occasion, I need to check my clients' sites for changes against the

backups/mirrors of their content on my machine. For those times, I have

a magic "rsync" incantation:

rsync -irn --size-only remote_host: /local/mirror/directory|grep '+'

The above itemizes the changes while performing a recursive check but

not copying any files. It also ignores timestamps and compares only file

sizes. Since "rsync" denotes changes with a '+' mark, filtering out

everything else only shows the files that have changed in size - which

includes files that aren't present in your local copy.

This can be very useful in identifying break-ins, for example.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

2 cent tip: reading Freelang dictionaries

Jimmy O'Regan [joregan at gmail.com]

Sun, 5 Sep 2010 14:58:30 +0100

Freelang has a lot of (usually small) dictionaries, for Windows. They

have quite a few languages that aren't easy to find dictionaries for,

so though the coverage and quality are usually quite low, they're

sometimes all that's there.

So, an example: http://www.freelang.net/dictionary/albanian.php

Leads to a file, dic_albanian.exe

This runs quite well in Wine (I haven't found any other way of

extracting the contents). On my system, the 'C:\users\jim\Local

Settings\Application Data\Freelang Dictionary' translates to

'~/.wine/drive_c/users/jim/Local\ Settings/Application\ Data/Freelang\

Dictionary/'. The dictionary files are inside the 'language'

directory.

Saving this as wb2dict.c:

#include <stdlib.h>

#include <stdio.h>

int main (int argc, char** argv)

{

char src[31];

char trg[53];

FILE* f=fopen(argv[1], "r");

if (f==NULL) {

fprintf (stderr, "Error reading file: %s\n", argv[1]);

exit(1);

}

while (!feof(f)) {

fread(&src, sizeof(char), 31, f);

fread(&trg, sizeof(char), 53, f);

printf ("%s\n %s\n\n", src, trg);

}

fclose(f);

exit(0);

}

The next step depends on the contents... Albanian on Windows uses

Codepage 1250, so in this case:

./wb2dict Albanian_English.wb|recode 'windows1250..utf8' |dictfmt -f

--utf8 albanian-english

dictzip albanian-english.dict

(as root cp albanian-english.* /usr/share/dictd/

cp albanian-english.* /usr/share/dictd/

add these lines to /var/lib/dictd/db.list :

database albanian-english

{

data /usr/share/dictd/albanian-english.dict.dz

index /usr/share/dictd/albanian-english.index

}

/etc/init.d/dictd restart

and now it's available:

dict agim

1 definition found

From unknown [albanian-english]:

agim

dawn

--

<Leftmost> jimregan, that's because deep inside you, you are evil.

<Leftmost> Also not-so-deep inside you.

[ Thread continues here (10 messages/18.69kB) ]

2-cent Tip: A better screen, byobu

afsilva at gmail.com [(afsilva at gmail.com)]

Mon, 27 Sep 2010 17:58:59 -0400

The screen command with pre-set status bar, and cool (easy to remember)

shortcuts, like F3, F4, F5 and a configurable menu on the F9 button.

yum install byobu

Got a nice screenshot at:

http://www.mind-download.com/2010/09/better-screen-byobu.html

AS

An HTML attachment was scrubbed...

URL: <http://lists.linuxgazette.net/private.cg[...]nts/20100927/26297595/attachment.htm>

[ Thread continues here (2 messages/1.74kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 179 of Linux Gazette, October 2010

Talkback

404s from 2 cent-ip, mailbag, etc (issue 178)

Thomas Adam [thomas at xteddy.org]

Wed, 1 Sep 2010 23:38:39 +0100

Hello,

A bunch of the "Thread continues ..." links aren't working in issue #178.

Examples:

http://linuxgazette.net/178/misc/lg/load_average_vs_cpus.html

http://linuxgazette.net/178/misc/lg/2_cent_tip___counting_your_mail.html

etc.

Also, it seems my name no longer appears in the list of mailbag

contributors, and hasn't done for a while now. Not for many issues. I

don't -think- I've changed my name, although at the weekends...

-- Thomas Adam

"Deep in my heart I wish I was wrong. But deep in my heart I know I am

not." -- Morrissey ("Girl Least Likely To" -- off of Viva Hate.)

[ Thread continues here (4 messages/7.63kB) ]

Talkback:178/silva.html

afsilva at gmail.com [(afsilva at gmail.com)]

Sat, 18 Sep 2010 22:38:48 -0400

Not sure if it is normal to send a talkback to my own article, but here it

is:

I have gotten a couple of comments/notes since my article "Common problems

when trying to install Windows on KVM with vir-manager" came out related to

tip #2 (turning off selinux). Yes, I understand it's recommended that

selinux stay turned on in 'enforcing' mode in Fedora. Yet, if I do this, I

get the following error when trying to startup the virtual machine using a

SDL virtual display.

Traceback (most recent call last):

File "/usr/share/virt-manager/virtManager/engine.py", line 878, in

run_domain

vm.startup()

File "/usr/share/virt-manager/virtManager/domain.py", line 1321, in

startup

self._backend.create()

File "/usr/lib64/python2.6/site-packages/libvirt.py", line 333, in create

if ret == -1: raise libvirtError ('virDomainCreate() failed', dom=self)

libvirtError: operation failed: failed to retrieve chardev info in qemu with

'info chardev'

As a 'somewhat reasonably'  responsible member of the Fedora community, I

have filed a bug on Red Hat's bugzilla about it (

https://bugzilla.redhat.com/show_bug.cgi?id=635328). Until that is resolved,

or someone else is able to show me another way to get sound working on such

a VM, I stand by my tip to turn off selinux.

responsible member of the Fedora community, I

have filed a bug on Red Hat's bugzilla about it (

https://bugzilla.redhat.com/show_bug.cgi?id=635328). Until that is resolved,

or someone else is able to show me another way to get sound working on such

a VM, I stand by my tip to turn off selinux.

Thanks,

Anderson Silva

--

http://www.the-silvas.com

An HTML attachment was scrubbed...

URL: <http://lists.linuxgazette.net/private.cg[...]nts/20100918/75dd5f8c/attachment.htm>

Talkback: Discuss this article with The Answer Gang

Published in Issue 179 of Linux Gazette, October 2010

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to [email protected]. Deividson can also be reached via twitter.

News in General

Oracle Supports Java, Solaris but not Developer Community Control

Oracle Supports Java, Solaris but not Developer Community Control

Technology Titan Oracle just held its Oracle Open World

mulit-user-group conference and released roadmaps and announcements

impacting several projects in the Open Source sphere.

These updates include new releases for MySQL, Solaris, and Oracle

Linux, new Oracle engineered servers and appliances, as well the next

generation of the SPARC processor architecture with its partner

Fujitsu. However, the future relationship between Oracle and

developer institutions like the Java Community Process and OpenSolaris

user groups were left uncertain and with hints of an adversarial turn.

Oracle seems to be backing away from OpenSolaris as a developer or

small shop operating system by no longer sharing development source

code with OpenSolaris distros. A panel of Oracle executives, speaking

to the press, declined to discuss OpenSolaris but did state that new

Solaris source code would eventually become open-sourced under the

CDDL.

Oracle is altering Sun's prior relationship with the Open Source

community while making its Solaris offerings more commercial and more

like its Linux offerings, which are based on customer support

contracts.

Back in August, an internal management memo to Solaris engineers was

leaked to the OpenSolaris email distribution. While stressing

Oracle's commitment to make Solaris "...a best-of-breed technology for

Oracle's enterprise customers" and decision to hire the "top operating

systems engineers in the industry," the memo described how Oracle

would no longer share source code and builds on a regular basis,

except with key partners. Instead, source code would be made available

only after new version releases of Solaris:

"We will distribute updates to approved CDDL or other open source-

licensed code following full releases of our enterprise Solaris

operating system. In this manner, new technology innovations will show

up in our releases before anywhere else. We will no longer distribute

source code for the entirety of the Solaris operating system in

real-time while it is developed, on a nightly basis.

"Anyone who is consuming Solaris code using the CDDL, whether in

pieces or as a part of the OpenSolaris source distribution or a

derivative thereof, would therefore be able to consume any updates we

release at that time, under the terms of the CDDL, LGPL, or whatever

license applies.

"We will have a technology partner program to permit our industry

partners full access to the in-development Solaris source code through

the Oracle Technology Network (OTN). This will include both early

access to code and binaries, as well as contributions to us where that

is appropriate."

For the complete memo, visit:

http://unixconsole.blogspot.com/2010/08/internal-oracle-memo-leaked-on-solaris.html.

Oracle Outlines Core SPARC, Solaris and ZFS Innovations

Oracle Outlines Core SPARC, Solaris and ZFS Innovations

During his keynote presentation at Oracle OpenWorld 2010, Oracle

Executive Vice President John Fowler showcased technology innovations

and outlined the value of hardware and software engineered to work

together as in Oracle's high-end ExaData servers.

Reiterating Oracle's commitment to SPARC, Fowler introduced the

industry's first 16-core processor, the SPARC T3, and SPARC T3

systems, which deliver optimized system performance for

mission-critical applications. He unveiled eight world-record

benchmark results, running the new SPARC T3 server family.

Fowler also unveiled the next-generation Sun ZFS Storage Appliance

product line that provides unified storage solutions for deploying

Oracle Database and data protection for Oracle Applications.

"We are focused on providing co-engineered systems - Oracle hardware

and software engineered to work together - to continually drive better

performance, availability, security and management, which translates

into business value for our customers," said Fowler.

Oracle Outlines Next Major Release of Oracle Solaris

Oracle Outlines Next Major Release of Oracle Solaris

Besides increasing its investment in the Oracle Solaris operating

system, Oracle is preparing for Oracle Solaris 11 in 2011 by releasing

Solaris 11 Express in 2010 to provide customers with access to the

latest Solaris 11 technology.

Oracle Solaris 11 will contain more than 2,700 projects with more than

400 inventions. Oracle Solaris 11 is expected to reduce planned

downtime by being faster and easier to deploy, update and manage, and:

- Nearly eliminate patching and update errors with new

dependency-aware packaging tools;

- Build a custom stack of Solaris and Oracle Software in a physical or

virtual image to enforce enterprise quality and policy standards;

- Reduce maintenance windows by eliminating the need for up to 50

percent of system restarts;

- Recover systems in tens of seconds versus tens of minutes with Fast

Reboot;

- Receive proactive and preemptive support that reduces service

outages from known issues via My Oracle Support telemetry integration

with the Oracle Solaris fault management architecture.

Oracle Solaris 11 is being engineered with new capabilities for

building, deploying and maintaining Cloud systems. Oracle Solaris 11

will be optimized for the scale and performance requirements of

immediate and future Cloud-based deployments, and will scale to tens

of thousands of hardware threads, hundreds of terabytes of system

memory, and hundreds of Gigabits of I/O.

The first Oracle Solaris 11 Express release, expected by the end of

calendar year 2010, will have an optional Oracle support agreement.

This release is expected to be the path forward for developers,

end-users and partners using previous generations of Solaris and

OpenSolaris releases.

Over 1,000 SPARC and x86 systems from other hardware providers have

been tested and certified by Oracle. Solaris 11 also will be powering

the newly announced Oracle Exadata X2-2 and X2-8 Database Machines, as

well as the Oracle Exalogic Elastic Cloud machine.

Oracle Outlines Plans for Java Platform

Oracle Outlines Plans for Java Platform

During the opening keynote of JavaOne 2010, Thomas Kurian, executive

vice president, Oracle Product Development outlined plans for the

future of the Java platform and showcased product demonstrations

illustrating the latest Java technology innovations. Kurian's

presentation covered four key areas of Java technology:

- Java Standard Edition (Java SE) - optimizing it for new application

models and hardware; including extended support for running new

scripting languages, increased developer productivity and lower

operational costs. Kurian discussed the roadmap for JDK 7 and JDK 8,

which will be based on OpenJDK, and highlighted some of the key

OpenJDK projects.

- Java on the Client - Oracle is enhancing the programming model with

JavaFX, to deliver advanced graphics, high-fidelity media and new HTML

5, JavaScript and CSS Web capabilities, along with native Java

platform support.

- Java Enterprise Edition (Java EE) - Java EE will become more modular

and programming more efficient with improvements such as dependency

injection and reduced configuration requirements. Product

demonstration highlighted how the Java EE 6 Web Profile reduces the

size of the Java runtime for light-weight web applications, reducing

overhead and improving performance.

- Java on Devices - Oracle will modernize the Java mobile platform by

delivering Java with Web support to consumer devices. Oracle is also

including new language features, small-footprint CPU-efficient

capabilities for cards, phones and TVs, and consistent emulation

across hardware platforms.

While 1.1 billion desktops run Java, 3 billion mobile phones run Java,

and 1.4 billion Java Cards are manufactured each year. This is a very

big market that impacts computing at every level.

"Oracle believes that the Java community expects results. With our

increased investment in the Java platform, a sharp focus on features

that deliver value to the community, and a relentless focus on

performance, the Java language and platform have a bright future,"

said Kurian. "In addition, Oracle remains committed to OpenJDK as the

the best open source Java implementation and we will continue to

improve OpenJDK and welcome external contributors."

However, in its presentations, Oracle said very little about the

well-established Java Community Process (JCP) that has directed the

evolution of Java. Although many developers were concerned about this

apparent diminishment of the JCP, most seemed pleased with the strong

on-going commitment for Java by Oracle and its support of OpenJDK.

Android Poised to Overtake Blackberry, iPhone Market Share

Android Poised to Overtake Blackberry, iPhone Market Share

The rapid growth of mobile devices running Google's Android operating

system will continue at the expense of the other leading smartphone

platforms, BlackBerry, iPhone, and even Windows Mobile, according to

market share data compiled by the comScore marketing service.

For the quarter ending in July, comScore found that Android-based

devices improved their share of the overall smartphone market, growing

to 17 percent from 12 percent. comScore researchers found that

Microsoft lost 2.2 percent of total smartphone market share while RIM

tumbled 1.8 percent and Apple, despite launching the vaunted iPhone 4

in June, shed 1.3 percent.

RIM was the leading mobile smartphone platform in the U.S. with 39.3

percent share of U.S. smartphone subscribers, followed by Apple with

23.8 percent share. Google saw significant growth during the period,

rising 5.0 percentage points to capture 17.0 percent of smartphone

subscribers. Microsoft accounted for 11.8 percent of Smartphone

subscribers, while Palm rounded out the top five with 4.9 percent

The July report found Samsung to be the top handset manufacturer

overall with 23.1 percent market share, while RIM led among smartphone

platforms with 39.9 percent market share.

For more information, see:

http://www.comscore.com/Press_Events/Press_Releases/2010/9/comScore_Reports_July_2010_U.S._Mobile_Subscriber_Market_Share.

DeviceVM Adds MeeGo to Splashtop Instant-on Product

DeviceVM Adds MeeGo to Splashtop Instant-on Product

DeviceVM, a provider of instant-on computing software, previewed the

next-generation of its Splashtop instant-on platform at the Intel

Developer's Forum in San Francisco. The flagship Splashtop product has

already shipped on millions of notebooks and netbooks worldwide from

leading PC OEMs including Acer, ASUS, Dell, HP, Lenovo, LG, Sony and

others. The company will offer a MeeGo-compliant version of the

popular companion OS to all existing OEM customers, while enabling

current users of Splashtop-powered systems to take advantage of a

seamless upgrade in the first half of 2011.

First introduced in 2007, the flagship Splashtop product is a

Linux-based instant-on platform that allows users to get online,

access e-mail, and chat with friends seconds after turning on their

PCs. The MeeGo project combines Intel's Moblin and Nokia's Maemo

projects into one Linux-based, open source software platform for the

next generation of computing devices.

By embracing MeeGo as the foundation for Splashtop, application

developers have the possibility to distribute their software to

millions of potential users leading to greater adoption of the MeeGo

platform. DeviceVM will also consider pre-bundling popular

applications along with distribution of the MeeGo-based Splashtop. In

moving to a MeeGo-based platform, users will now be able to download,

install and run hundreds of apps currently available from the Intel

AppUp Center.

"Since the launch of Splashtop in late 2007, we have received

thousands of requests from application developers to release an SDK,"

said Mark Lee, CEO and co-founder of DeviceVM. "By embracing MeeGo and

moving Splashtop to be fully compliant with the specifications

shepherded by the Linux Foundation, we will effectively open up

Splashtop to allow developers to deliver high-value applications to

audiences across a range of computing devices."

Demonstrations of the new Meego-based Splashtop product were seen

during the IDF expo.

DeviceVM is an active Linux proponent, and earlier this year announced

the election of CEO and co-founder Mark Lee to the Linux Foundation

Board of Directors.

The MeeGo-based Splashtop is already being made available to leading

PC OEMs currently shipping Splashtop on a range of device types.

Consumer and commercial end-users will be able to upgrade to the new

Splashtop in the first half of 2011.. For more information, visit

http://www.splashtop.com.

NetApp and Oracle Agree to Dismiss IP Lawsuits

NetApp and Oracle Agree to Dismiss IP Lawsuits

NetApp and Oracle have agreed to dismiss their pending mutual patent

litigation, which began in 2007 between Sun Microsystems and NetApp.

Oracle and NetApp want to have the lawsuits dismissed without

prejudice. The terms of the agreement are currently confidential.

Sun released the code to the ZFS or Zettabyte file system used in

Solaris to its developer community in 2005, but claimed to have

developed it in-house years earlier. NetApp sued later, claiming

that many of the features were similar to the WAFL (Write Anywhere

File Layout) file system technology used by NetApp. There were also

further IP patent suits regarding the Sunscreen technology that NetApp

acquired in 2008.

No theft of code was alleged by either side. The conflict was around

commercial use of similar ideas and design.

"For more than a decade, Oracle and NetApp have shared a common vision

focused on providing solutions that reduce IT cost and complexity for

thousands of customers worldwide," said Tom Georgens, president and

CEO of NetApp. "Moving forward, we will continue to collaborate with

Oracle to deliver solutions that help our mutual customers gain

greater flexibility and efficiency in their IT infrastructures."

Broadcom releases open-source driver for its wireless chipsets

Broadcom releases open-source driver for its wireless chipsets

Broadcom has announced the initial release of a fully-open Linux

driver for it's latest generation of 11n chipsets. The driver, while

still a work in progress, is released as full source and uses the

native mac80211 stack. It supports multiple current chips (BCM4313,

BCM43224, BCM43225) as well as providing a framework for supporting

additional chips in the future, including mac80211-aware embedded

chips.

This is a major shift in policy by a dominant networking vendor.

In a blog entry on Linux.com, Linux Foundation Executive Director Jim

Zemlin wrote:

"We are extremely happy to see this change for multiple reasons. One:

it's obviously good to have more technology available to use; we want

technology to "just work" with Linux and since Broadcom is a major

technology supplier their absence from the mainline kernel was

significant. Two: we have been working with our Technical Advisory

Board on this issue for the last few years to educate vendors on

Linux' model and why it's in their interest to open source their

drivers."

The README and TODO files included with the sources provide more

details about the current feature set, known issues, and plans for

improving the driver.

The driver is currently available in staging-next git tree, available

at:

git://git.kernel.org/pub/scm/linux/kernel/git/gregkh/staging-next-2.6.git

in the drivers/staging/brcm80211 directory.

Conferences and Events

- 9th USENIX Symposium on Operating Systems Design and

Implementation (OSDI '10)

-

October 4-6 2010, Vancouver BC, Canada

The 9th USENIX Symposium on Operating Systems Design and

Implementation (OSDI '10) will take place October 4-6, 2010, in

Vancouver, BC, Canada.

Join us for OSDI '10, the premier forum for discussing the design,

implementation, and implications of systems software. This year's

program has been expanded to include 32 high-quality papers in areas

including cloud storage, production networks, concurrency bugs,

deterministic parallelism, as well as a poster session. Don't miss the

opportunity to network with researchers and professionals from

academic and industrial backgrounds to discuss innovative, exciting

work in the systems area.

http://www.usenix.org/osdi10/lgb

- JUDCon 2010

-

October 7-8, Berlin, Germany

http://www.jboss.org/events/JUDCon.html.

- Silicon Valley Code Camp 2010

-

October 9-10, Foothill College. Los Altos, CA

http://www.siliconvalley-codecamp.com/.

- 17th Annual Tcl/Tk Conference (Tcl'2010)

-

October 11 - 15, 2010, Chicago/Oakbrook Terrace, Illinois, USA

We are pleased to announce the 17'th Annual Tcl/Tk Conference (Tcl'2010).

Learn from the experts and share your knowledge. The annual Tcl/Tk

conference is the best opportunity to talk with experts and peers,

cross-examine the Tcl/Tk core team, learn about what's coming and how

to use what's here.

The Tcl Conference runs for a solid week with 2 days of tutorials

taught by experts and 3 days of refereed papers discussing the latest

features of Tcl/Tk and how to use them.

The hospitality suite and local bars and restaurants provides plenty of

places to discuss details, make new friends and perhaps even find a new

job or the expert you've been needing to hire.

17th Annual Tcl/Tk Conference (Tcl'2010)

- Foundation End User Summit 2010

-

October 12-13, Hyatt Regency, Jersey City, New Jersey

http://events.linuxfoundation.org/component/registrationpro/?func=details&did=40.

- Strange Loop 2010 Developer Conference

-

October 14-15th, Pageant Hotel, St. Louis, MO.

http://strangeloop2010.com/Interop.

- Enterprise Cloud Summit

-

October 18-19, New York, NY

http://www.interop.com/newyork/conference/cloud-computing-summit.php.

- Interop NY Conference

-

October 18-22, New York, NY

http://www.interop.com/newyork/PayPal.

- Innovate 2010 Conference

-

October 26-27, Moscone West, San Francisco, CA

https://www.paypal-xinnovate.com/index.html.

- CSI Annual 2010

-

October 26-29, Washington, D.C.

http://csiannual.com/.

- Linux Kernel Summit

-

November 1-2, 2010. Hyatt Regency Cambridge, Cambridge, MA

http://events.linuxfoundation.org/events/linux-kernel-summit.

- ApacheCon North America 2010

-

1-5 November 2010, Westin Peachtree, Atlanta GA

The theme of the ASF's official user conference, trainings, and expo is

"Servers, The Cloud, and Innovation," featuring an array of educational

sessions on open source technology, business, and community topics at the

beginner, intermediate, and advanced levels.

Experts will share professionally directed advice, tactics, and lessons learned

to help users, enthusiasts, software architects, administrators, executives,

and community managers successfully develop, deploy, and leverage existing and

emerging Open Source technologies critical to their businesses.

ApacheCon North America 2010

- QCon San Francisco 2010

-

November 1-5, Westin Hotel, San Francisco CA

http://qconsf.com/.

- ZendCon 2010

-

November 1-4, Convention Center, Santa Clara, CA

http://www.zendcon.com/.

- 9th International Semantic Web Conference (ISWC 2010)

-

November 7-11, Convention Center, Shanghai, China

http://iswc2010.semanticweb.org/.

- LISA '10 - Large Installation System Administration Conference

-

November 7-12, San Jose, CA

http://usenix.com/events/.

- ARM Technology Conference

-

November 9-11, Convention Center, Santa Clara, CA

http://www.arm.com/about/events/12129.php.

- Sencha Web App Conference 2010

-

November 14-17, Fairmont Hotel, San Francisco CA

http://www.sencha.com/conference/.

Distro News

WeTab tablet first with MeeGo open operating system

WeTab tablet first with MeeGo open operating system

In September, WeTab GmbH announced its tablet computer, the WeTab,

developed in cooperation with Intel, just preceeding the IFA

international trade fair for consumer electronics in Berlin and IDF in

San Francisco. The WeTab, which went on to the German market in

September, is the first tablet worldwide based on MeeGo.

WeTab OS, the WeTab operating system, is based on the free Linux

distribution MeeGo and integrates runtime environments for various

other technologies. In addition to native Linux apps, many other

applications can run on the WeTab, including Android apps, Adobe Air

applications and MeeGo apps. Several apps that may be interesting for

the user have been compiled and are available on the WeTab Market,

from where they can be loaded directly onto the WeTab. This means that

developers can program in the languages they are familiar with and

users can choose from an abundance of very different applications.

The Web-browser plays a special role here and is based on the free

HTML rendering engine WebKit, enabling fast surfing and including

suport for HTML5, Adobe Flash and Java.

"Working intensively with Intel, we have developed the WeTab OS with

MeeGo to meet the requirements of a tablet user in the best way

possible. The tablet runs extremely fast and, in addition to native

apps, also provides direct access to countless Web-based apps", says

Stephan Odörfer, Managing Director of 4tiitoo AG, which is are also

involved in this joint venture with WeTab GmbH.

Wolfgang Petersen, Director of Intel Software and Services Group at

Intel Deutschland GmbH, says: "The WeTab is the first tablet based on

MeeGo and the Intel Atom processor. MeeGo is designed for a broad

range of devices. Implementing MeeGo on the WeTab shows just how the

operating system can be adapted for use on a tablet."

WeTab GmbH is a joint venture between 4tiitoo AG and Neofonie GmbH.

You can find more information at http://www.wetab.mobi, http://www.intel.com and

http://www.meego.de.

Linpus Lite for MeeGo netbook edition adds Touch Support

Linpus Lite for MeeGo netbook edition adds Touch Support

Linpus announced in September further enhancements to its Meego-based

version of its Linpus Lite, which is optimized for the Intel Atom

processor. Linpus Lite is their consumer device operating system

designed for a better mobile Internet experience.

Linpus originally brought one of the first MeeGo-based operating

systems to market in time for Computex. They have since upgraded it

with a number of improvements. First, they have included better

categorization of the social networking and recent object panel in

Myzone. Linpus has created a tab for each of the different sites and

for recent objects, making it easier to find your messages.

Second, they have also added touch support and dual user interfaces.

You have the choice of two interfaces: MeeGo and Linpus' Simple Mode.

You can switch easily between these two modes by one tap on an icon in

Myzone or in Simple Mode.

Linpus' version has also added a number of other enhancements:

- Extremely fast boot - now under 10 seconds

- More social network support in Myzone - Flickr and MySpace

- Online support - Linpus commercial grade LiveUpdate function to

deliver device-specific patches, upgrades and new applications to your

system

- Media Player - added support for audio and video streaming

- Power management - idle mode and auto-suspend net power savings of

15 to 20%

- Network Manager - more 3G modems and device-to-device file transfer,

VPN, PPPoE and WPA2-enterprise support;

- Linpus Windows Data Applications - for dual-booting with Windows;

- Peripheral support - extensive support especially for graphics,

including the Intel full series graphics and most of NVIDIA and ATI;

- Input method - multi-language input method and international

keyboard support through iBus;

- File Manager - a Windows-like experience means that the partitions

of USB drives are now alphabetically labeled.

Linpus (http://www.linpus.com) has worked on open source solutions across

numerous platforms and products, garnering a reputation for

engineering excellence as well as highly intuitive user interfaces.

The MeeGo project combines Intel's Moblin and Nokia's Maemo projects

into one Linux-based, open source software platform for the next

generation of computing devices.

Software and Product News

Oracle Enhances New Solaris Release

Oracle Enhances New Solaris Release

Building on its leadership in the enterprise operating system market,

Oracle today announced Oracle Solaris 10 9/10, Oracle Solaris Cluster

3.3 and Oracle Solaris Studio12.2. 10 of the top 10

Telecommunications Companies, Utilities and Banks use Oracle Solaris.

Oracle Solaris is now developed, tested and supported as an integrated

component of Oracle's "applications-to-disk" technology stack, which

includes continuous major platform testing, in addition to the Oracle

Certification Environment, representing over 50,000 test use cases for

every Oracle Solaris patch and platform released.

Oracle Solaris 10 9/10 provides networking and performance

enhancements, virtualization capabilities, updates to Oracle Solaris

ZFS and advancements to leverage systems based on the latest SPARC and

x86 processors.

The Oracle Solaris 10 9/10 update includes new features, fixes and

hardware support in an easy-to-install manner, preserving full

compatibility with over 11,000 third-party products and customer

applications.

Oracle Solaris is designed to take advantage of large memory and

multi-core/processor/thread systems and enable industry-leading

performance, security and scalability for both existing and new

systems.

Oracle Solaris Cluster 3.3 builds on Oracle Solaris to offer the most

extensive, enterprise high availability and disaster recovery

solutions.

Enables virtual application clusters via Oracle Solaris Containers in

Oracle Solaris Cluster Geographic Edition and integrates with Oracle

WebLogic Server, Oracle's Siebel CRM, MySQL Cluster and Oracle

Business Intelligence Enterprise Edition 11g for consolidation in

virtualized environments.

Provides the highest level of security with Oracle Solaris Trusted

Extensions for mission-critical applications and services.

Supports InfiniBand on public networks and as storage connectivity and

is tightly integrated and thoroughly tested with Oracle's Sun Server

and Storage Systems.

Oracle Solaris Studio 12.2 provides an advanced suite of tools

designed to work together for the development of single,

multithreaded, and distributed applications. With its integrated

development environment (IDE), including a code-aware editor,

workflow, and project functionality, Oracle Solaris Studio helps

increase developer productivity.

Oracle Solaris 10 9/10 features include:

Networking and database optimizations for Oracle Real Application

Clusters (Oracle RAC).

Oracle Solaris Containers now provide enhanced "P2V" (Physical to

Virtual) capabilities to allow customers to seamlessly move from

existing Oracle Solaris 10 physical systems to virtual containers

quickly and easily.

Increased reliability for virtualized Solaris instances when deployed

using Oracle VM for SPARC, also known as Logical Domains.

Oracle Solaris ZFS online device management, which allows customers to

make changes to filesystem configurations, without taking data

offline.

New Oracle Solaris ZFS tools to aid in recovering from problems

related to unplanned system downtime. Operating system, database and other Oracle Solaris patches are now

verified and coordinated to provide the highest levels of quality,

confidence and administrative streamlining.

New Release of Oracle Secure Global Desktop

New Release of Oracle Secure Global Desktop

Further enhancing its comprehensive portfolio of desktop

virtualization solutions, Oracle released Oracle Secure Global Desktop

4.6 in September. Secure Global Desktop is an access solution that

delivers server-hosted applications and desktops to nearly any client

device, with higher security, decreased operational costs, and

increased mobility.

With a highly secure architecture, Oracle Secure Global Desktop helps

keep sensitive data in the datacenter behind the corporate firewall,

and not on end user systems or in the vulnerable demilitarized zone

(DMZ), and can only be accessed by authenticated users with

appropriate privileges.

Oracle Secure Global Desktop enables an additional layer of security

for accessing sensitive enterprise applications, beyond using a web

browser alone, by providing a highly secure Java-based Web client that

does not retain cookies or utilize Web page cache files that could be

exploited.

The new release lowers administration overhead by delivering secure

access to server-hosted applications and desktops from a wide variety

of popular client devices. Applications and desktops that run on

Windows, Oracle Solaris, Oracle Enterprise Linux, and other UNIX and

Linux versions are supported.

Oracle Secure Global Desktop 4.6 is part of "Oracle Virtualization," a

comprehensive desktop-to-datacenter virtualization portfolio, enabling

customers to virtualize and manage their full hardware and software

stack from applications to disk.

Oracle Secure Global Desktop 4.6 enhances the security and centralized

management of applications in the datacenter by delivering:

- Greater browser flexibility: The Secure Global Desktop client has

the flexibility to be used with nearly any Java-enabled browser to

access server-hosted browser instances where the server-hosted

application requires a different browser, plug-ins, or settings;

- Enhanced availability: The Array Resilience feature automatically

re-establishes connections to the server array after a primary server

or network failure to provide higher levels of availability;

- Enhanced application launch control for users: Dynamic Launch

reduces administration overhead by giving end users greater control

over launching applications;

- Easier integration with third party infrastructure: Integration

with third party virtual desktop infrastructure (VDI) connection

brokers, in addition to the existing integration with Oracle Virtual

Desktop Infrastructure;

- Dynamic Drive Mapping allows users to "hot-plug" hard disk drives

and USB drives on their PCs and utilize them in Oracle Secure Global

Desktop sessions;

- Configurable directory services and password management:

Administrators can configure individual settings for multiple

directory services, such as Oracle Internet Directory, Microsoft

Active Directory and other LDAP servers.

"The new capabilities delivered in Oracle Secure Global Desktop 4.6

underscore our focus on making applications easier to deploy, manage

and support in virtualized datacenter environments," said Wim

Coekaerts, senior vice president, Linux and Virtualization

Engineering, Oracle. "Oracle Secure Global Desktop 4.6 is the latest

of many new desktop virtualization products and capabilities recently

announced including new releases of Oracle VM VirtualBox, Sun Ray

Software and Oracle Virtual Desktop Infrastructure. This demonstrates

Oracle's continued commitment to providing a comprehensive desktop to

datacenter portfolio."

For more information, visit

http://www.oracle.com/us/technologies/virtualization/index.htm.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Henry's Techno-Musings: User Interfaces

By Henry Grebler

"I've bought us a new potato peeler. Doesn't it look fantastic!"

My wife's enthusiasm was boundless. Meanwhile I struggled with the

nuances of her declaration.

Why us? I wondered uncharitably. I was perfectly happy with the

three other potato peelers we already had. Why did we need a new

one? And why would I care what it looked like?

A few days later, I complained, "It doesn't do a good job."

"You're such a curmudgeon. It's beautiful. That's all that matters."

This is our endless debate. For her, style is all. I, on the other

hand, believe that, without function, form is irrelevant.

Sadly (for me), my wife is in the majority. And nowhere is this

demonstrated better than on websites.

I see many jobs for web designers, but I can't get my head around what

people mean. If all these websites are designed, how come they are so

dreadful at what they do?

Perhaps I'm begging the question. Maybe the purpose of each website

is to be a monument to its designer, an item for her folio, a notch on

his belt.

Perhaps, in the vein of "your call is important to us" (newsflash: it

isn't), these websites do indeed reflect the desires of their owners.

These desires start with a complete disdain for their users, whom they

treat with contempt. They seem to want their websites to be vehicles

to push other products.

In the Beginning

Take my bank, Citibank. When I first started using their website for

banking (over 3 years ago), I was extremely impressed. It seemed that

they had found a balance between security and usability. (Of course,

I'm talking about my experience in Australia. I have no knowledge of

how Citibank's websites work anywhere else in the world. I'm guessing

it's the same throughout Australia, but I can't even guarantee that.)

What impressed me the most was their out-of-band confirmation. Before

I transfer money to any party, I must first set that party up as an

account in my profile. I use normal browser procedures to create the

account, but before the account can be activated (ie used), I have to

ratify it. In the past, I could do that in one of 2 ways: ring

Citibank (a not particularly convenient mechanism - but it is only

once per account); or respond to an email.

In the meantime, they have replaced both of these with another

mechanism: they SMS a code which I must manually enter. I think most

banks do that now.

Using SMS rather than email is likely to be more secure. In theory, if

your PC is compromised, the hacker has access to your bank account and

your email. To rip you off now, the hacker would also have to be in

possession of your phone.

That's all basically good news. Now for the not-so-good news.

In the first incarnation of Internet banking, I had to provide my ATM

card number and PIN (pretty much the same as when I went to an ATM).

That seemed to me to be secure enough. If it was adequate protection

for me at the ATM, why was it less than adequate for Internet banking?

Security

I am not an authority on security, but neither am I a complete

stranger.

The theory is that security is about something you have and

something you know. (Technically, there's a lot more to it than

that. There's also something you are. But that doesn't apply

here, so let's move on.) So, when I go to the ATM, what I have

is my bank's card. And what I know is my PIN.

One way that fraud is perpetrated on such a system is that the baddies

capture (usually photograph or film) the details of your card

(basically its number) and use the captured data to create a clone

card. Capturing someone's PIN is often not difficult. There are video

cameras everywhere these days; and most people are almost reckless in

their failure to even try to conceal their activity when they enter

their PIN. Just look around the next time you are in the queue at a

store's checkout.

You should take the view that if your eyes can see what you are keying

when you enter your PIN, then it is susceptible to capture. Ideally,

you should cover your keying hand as you key.

When you pay your bill at the restaurant, the something you have is

your credit card. There really isn't anything that you know. Arguably,

you know how to produce your signature; but then so does any

respectable forger. Once she has obtained your card, she has all the

time in the world to practice forgery. Even better, if a baddy gets

hold of a copy of your card's imprint, he can produce a clone and sign

the clone in his handwriting (with your name).

I guess the weakness in Citibank's first incarnation, is that it

converts something you have (the card) into something you

know (the card's number). When it comes to things you know, two

are not better than one. (Perhaps marginally better.)

There are techniques that achieve a de facto something you

have. "RSA SecurID is a mechanism developed by RSA Security for

performing two-factor authentication for a user to a network

resource." (http://en.wikipedia.org/wiki/SecurID) In essence, it's a

gizmo that displays a number (at least 6 digits). The number changes

every minute. Every gizmo has its own unique sequence of numbers. So,

in theory, unless you are holding the gizmo, you cannot know which

number is correct at any time. Put another way, knowledge of the

correct number is de facto proof that you have that special something

you have.

These techniques are probably expensive. Perhaps the bank thought that

its customers are too stupid to be able to use such a gizmo.

Changes

After some time, Citibank changed the access mechanism. I was invited

(read ordered):

You will be guided to create your own User ID, Password and

three "Security Questions". You will be asked to choose from a

range of questions. Each time you sign on you will be asked

one question.

My guess is that most users access their accounts from a PC running

Microsoft, um, products (I cannot bring myself to write software in

the same sentence as Microsoft). And I guess there was a concern

that many of these machines could be (or had been) compromised. I

generally don't use Microsoft platforms to access the Internet; I run

Linux on my desktop (as any sensible person would). And I NEVER use

Microsoft platforms to access anything to do with dollars.

So I wonder why I have to suffer. Why can't I choose the mechanism

with which I access Citibank's facilities?

Ostensibly, Citibank's brave new world of banking was better than the

previous world. Here's how it worked (and still works at time of

writing).

Disclaimer

By now, the reader must have realised that I have no personal

inside knowledge of Citibank or any of its personnel. This

entire piece is speculation on my part, together with my actual

personal experience. As they say, YMMV. So I'm going to drop

the "my guesses" and "I supposes"; it's all getting too

clunky.

I go to the login screen (affectionately called a "sign on" so as not

spook the cattle). I enter my User ID. I click on the field where I

would normally expect to enter my Password. Up pops a virtual

keyboard. This is supposed to defeat keyboard sniffers. Even if

a sniffer has captured a session during which I entred my User ID and

Password, the only bit that is usable is the User ID (and maybe part

of the Password). The virtual keyboard consists of 3 parts. The

letters of the alphabet are pretty standard; there's a numeric pad to

the right; but there are no numbers on the top row of the keyboard,

only the characters produced when these keys are shifted:

!@#$%^&*()_+

The special characters and digits are not in fixed positions; each

time the virtual keyboard pops up, the order of these keys changes. So

even if a keyboard sniffer detects where you clicked, it does

not establish what you clicked.

The perpetrators of this approach had better be really really sure

that this last assertion is true. Because the virtual keyboard with

the changing keycaps comes at the expense of reducing my password

strength. They have decreased the size of the symbol set. There are 94

ASCII printable characters available to a random password generator.

Citibank's keyboard is case insensitive; there are only 46 characters

to choose from.

Further, I'm not certain it defeats all hacks. If your PC is

compromised, perhaps it is also possible that your browser has been

hijacked. If the baddies present you with their virtual keyboard,

would you notice?

There are other hacks that are theoretically possible.

My suspicion is that the virtual keyboard came from the same school as

many of the security "enhancements" inflicted on people in the US

after 9/11. These "enhancements" are more about creating the

illusion of security than being effective.

It's horrendous to use. For me, when my eyesight was bad, the

characters were almost unreadable. On a normal keyboard, I don't

have to see the characters clearly; it's enough that I know where

they are. But on the virtual keyboard they are never in the same place

twice. I changed my password to use characters that I could more

readily distinguish.

[ ...thus decreasing the password strength even

further (it's not that hard to guess which characters are more

distinguishable with poor eyesight.) This reinforces Henry's point: the Law

of Unintended Consequences is alive, well, and hyperactive, particularly

in the area of UI/security interactions. -- Ben ]

Because my eyesight was so poor, I was in the habit of using my

browser's feature for increasing text size. That should solve the

problem with the virtual keyboard, I hear you say. Missed it by

that

much. When I increased text size in the browser window, each character

in the virtual keyboard was no longer aligned with the box with which it

was associated. In some cases it became even harder to read, even

though it was bigger.

My personal biggest gripe with this mechanism is that it takes me much

longer to enter my password. Instead of a simple swipe-paste, I must

click each character.

My other bank (Commonwealth Bank) has a simple Client Number and

Password. As far as I'm concerned, that's perfectly acceptable and

vastly preferable. Commonwealth Bank also uses SMS for ratification.

Makeover

That brings us to yesterday (March 2010). The folk (web designers?) at

Citibank have given their website a makeover. It has a completely

different look. What's really galling is that the functionality is not

better than it was. However, it is vastly different; so now I have to

learn how to use the new user interface.

Imagine you've bought yourself a shiny new car. (Obviously it wouldn't

happen like this.) You drive it for six months. And then one day you

jump behind the wheel - er, wait! where is the steering wheel? Oh,

they've moved it to the other side of the car. I have to get in the

passenger side to drive. I wonder which one of these pedals is the

brake? Ok, I'm starting to get used to it. Now, indicator? Hmm, where

would they have put the indicator?

I think you get my drift.

And it's not any better than the previous user interface! - which

wasn't great and could have done with some improvement.

But we're back to the potato peeler. If it doesn't peel potatoes, who

cares what it looks like? In my opinion (obviously not worth much),

Citibank's new look is really terrible.

But here we've come full circle also. The one noticeable difference is

that there are many more references to and come-ons for Citibank's other

products. Once again, the customer is treated with contempt - unless

he's about to buy something.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/grebler.jpg)

Henry has spent his days working with computers, mostly for computer

manufacturers or software developers. His early computer experience

includes relics such as punch cards, paper tape and mag tape. It is

his darkest secret that he has been paid to do the sorts of things he

would have paid money to be allowed to do. Just don't tell any of his

employers.

He has used Linux as his personal home desktop since the family got its

first PC in 1996. Back then, when the family shared the one PC, it was a

dual-boot Windows/Slackware setup. Now that each member has his/her own

computer, Henry somehow survives in a purely Linux world.

He lives in a suburb of Melbourne, Australia.

Copyright © 2010, Henry Grebler. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 179 of Linux Gazette, October 2010

Away Mission - PayPal Innovate

By Howard Dyckoff

PayPal's Innovate developer conference comes the San Francisco on October

26-27. Here's a chance to learn how the web collects its money.

This year, they move from the arguably lower-rent San Francisco Concourse,

an augmented warehouse with skylights and carpeting, to the tech conference

standard of the Moscone Center. The first event in 2009 was was a sell-out

and was bursting at the seams. The step up is partly necessitated by the

fact the rooms were not big enough for most of the 2009 sessions.

This year, some of the same formula is present: a 2-day format, ultra-low

price (under $200 if you early-bird it), and major guest speakers like Tim

O'Reilly, founder and CEO of O'Reilly Media, and Marc Andreessen,

co-founder of Netscape Communications.

This year has an extra deal: a conference pass and 2 nights at the Parc 55

Hotel for only $399. And you can save $50 off that if you register by

October 3rd with code INV810.

The food and caffeine was plentiful in 2009, even though it was

mostly sandwiches, chips, and pizza - but really good pizza. They did

not take away the coffee/tea/soda between sessions and that is most

appreciated. At the Moscone Center, catering staff take away food and

drink after the allotted time has passed. Let's see if the coffee urns

stay out this year.

But why PayPal? Because of their PayPal X community which developed when

they opened up their APIs and also because PayPal is empowering the

global micro-payments industry and is experimenting with mobile phone

micro-payments to support aid and development projects in the 3rd world -

bringing hi-tech into lo-tech places. The event is in part about "crafting

the future of money, literally changing the way the world pays." That's a

very ambitious goal.

More specifically, PayPal X is an open global payments platform which

includes developer tools and resources. The developer community around

PayPal X is trying to shape the way the world uses - and thinks about - money.

Those tools can be reviewed and tested at http://x.com. They include payment

APIs, code samples, in-depth documentation, training modules, and much

more.

According to an article in the Economist magazine in 2009, the use of

mobile money via cell phone in the developing world reduces banking and

transportation costs and leads to family wealth increasing by 5-30%.

Transactions are faster, safer, and have better transaction logging. Mobile

payments are becoming the default banking system of those without banks and

credit, accelerating the movement away from cash.

There will be over 50 technical sessions for an expected 2000-2500

attendees. And at these sessions you can learn strategies for monetizing

businesses or see how others are using the PayPal APIs. Last year there

also were chalk talk sessions but they were mostly under-attended.

This link take you to the Innovate 2009 Keynotes and Breakout Sessions:

https://www.x.com/docs/DOC-1584

To get a better sense of the ad-hoc and dynamic nature of the sessions,

check out these 3 session links:

PayPal 101: Payments for Developers: https://www.x.com/videos/1036

The Intersection of Mind and Money: https://www.x.com/videos/1034

Banking the Unbanked: https://www.x.com/videos/1077

There were lots of giveaways: free PayPal Dev accounts, source code

giveaways (the shopping cart PayPal developed for Facebook), T-shirts, etc.

But the most outstanding perk for PayPal Innovate attendees was the 10 inch

ASUS 'eee-pc' netbook given to all who were at the keynote on the first

day. They did run out and gave receipts to the late claimants to get an

eee shipped to them - the luggage tag in the conference bag had a time code

which determined if you were an early bird or a late one. The netbook came

preloaded with PayPal X materials and had a PayPal sticker on the cover. I

suspect that the perks this year will be smaller as Moscone Convention

Center rates must be 2x-3x of the 2009 site. In fact, you might say the

2009 PayPal conference was free and $200-300 fee went into the perks...

no guarantees for 2010, however.

To register or find out more about the Innovate conference, visit:

https://www.paypal-xinnovate.com/index.html

Talkback: Discuss this article with The Answer Gang

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Copyright © 2010, Howard Dyckoff. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 179 of Linux Gazette, October 2010

A Nightmare on Tape Drive

By Henry Grebler

And in the fourth month of my new job, I encountered numerous problems

all related to backup.

Backup! My eyes glaze over. I suppose some people can get enthusiastic

about backup, but not me. Oh, sure, I understand the point of backup.

And I try very hard in my own endeavours to keep lots of backup. (What

would happen to my writing if I lost it all! Oh, the horror.)

Currently, I seem to spend about a third of my time on backup. There

are many aspects to this, and several reasons. Nonetheless, there are

many activities that are not a particularly good match for my

skill-set: operating a Dymo labelmaker; searching for tapes; opening

new tape boxes; removing shrink-wrapping; loading and unloading

magazines; searching for tape cases; loading tape cases; removing

tapes from their covers; inserting tapes into their covers; delivering

tape cases to, and retrieving them from, a nearby site.

I yearn to throw the whole lot out and start again. But, of course,

that is unthinkable.

We have 3 jukeboxes which feed 5 tape drives. About a month ago, the

PX502 started to misbehave. I started scouring documentation,

searching around in the logs and trying various commands. Somewhere I

came across this helpful message:

The drive is not ready - it requires an initialization command

Oh, really? Well, if you are so smart, send the appropriate

initialization command. Or tell me what the initialization command

looks like and ask me to issue it.

Don't you just hate that? It's on a par with those stupid messages

that compilers sometimes produce, something like:

Fatal error: extraneous comma

!! I mean, I wouldn't mind,

Warning: extraneous comma. Ignored.

But "Fatal error"?! Give me a break. That's just punitive.

It seemed to me that the aberrant behaviour was hardware-related. I

chased around to see if the unit was under maintenance. There was some

difficulty in locating the relevant paperwork, but I was given a phone

number and assured it would be OK. It wasn't really OK, but David came

out nonetheless and poked around.

It was one of those visits like when you take your car to the

mechanic - or your kids to their friends. The car and the kids are

suddenly on their best behaviour. The mechanic cannot hear any of

those ominous noises that caused so much consternation. And the other

parents report what sweet children you have.

As you drive away in frustration, the car starts its strange noises

and the kids are attempting to kill each other in the back seat.

Two days later I was back on the phone to David. He assured me that

our support was not with him but with some other crowd, but since he

had started down this path, he would continue and work out some

cross-charging with the other crowd. He told me to run some program

and send him the output.

Apparently, he sent the output on to the US and was informed that one

of the drives needed replacement. They shipped a replacement drive to

David, who came out again a few days later.

And that should have been the end of it.

But no. This is the story of a nightmare; don't look for happy

endings. The happiest part of a nightmare is when you wake up.

The software which coordinates all the backup tasks is Sun's

StorageTek EBS which is really Legato NetWorker under the covers.

After the drive swapout, the StorageTek software showed only a single

drive on the PX502.

I struggled with this for a while, believing it ought to be fairly

straightforward to solve this problem. And this is where I demonstrate

why I earn the big bucks.

When you encounter a problem like this you have the analogue of the

manufacturer's make/buy dilemma. Do you try to fix it yourself? Or do

you call in support? Before you can think about calling for support

you have to answer a few questions.

First, have you investigated the problem? If your opening gambit is,

"It doesn't work," you may find it hard to get useful help. Support

may suggest politely that you try reading the manual.

Second, is the problem hardware or software? In many cases, my answer

is that if I knew that I wouldn't be calling for support.

Nevertheless, you need to be able to engage support, get them

interested enough to want to help you. Otherwise, they'll just give

you a lot of dopey menial tasks.

Finally, do you even have support?

First, I tried the Microsoft approach: if things don't work, try