...making Linux just a little more fun!

July 2010 (#176):

- Mailbag

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- Pixie Chronicles: Part 4 Kickstart, by Henry Grebler

- Tacco and the Painters (A Fable for the Nineties), by Henry Grebler

- Knoppix Boot From PXE Server - a Simplified Version for Broadcom based NICs, by Krishnaprasad K., Shivaprasad Katta, and Sumitha Bennet

- Procmail/GMail-based spam filtering, by Ben Okopnik

- Linux: The Mom & Pop's Operating System, by Anderson Silva

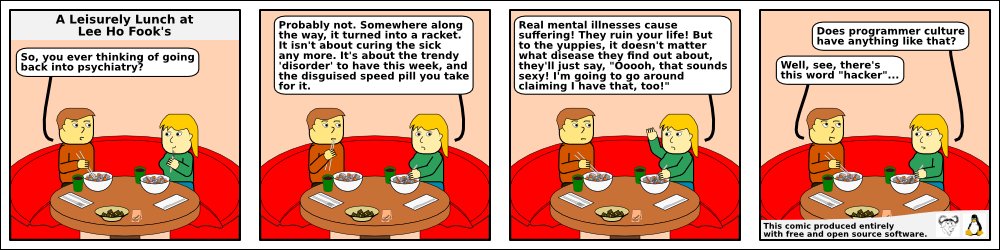

- HelpDex, by Shane Collinge

- Doomed to Obscurity, by Pete Trbovich

- The Linux Launderette

Mailbag

This month's answers created by:

[ Ben Okopnik, S. Parthasarathy, Henry Grebler, Kapil Hari Paranjape, René Pfeiffer, Mulyadi Santosa, Neil Youngman ]

...and you, our readers!

Our Mailbag

FIFO usage

Dr. Parthasarathy S [drpartha at gmail.com]

Tue, 22 Jun 2010 09:09:46 +0530

I have come across this thing called FIFO named pipe. I can see how it

works, but I find no place where I can put it to good use (in a shell

script). Can someone give me a good application context where a shell

script would need to use FIFO ? I need a good and convincing example,

to be able to tell my students.

Thanks TAG,

partha

--

---------------------------------------------------------------------------------------------

Dr. S. Parthasarathy | mailto:drpartha at gmail.com

Algologic Research & Solutions |

78 Sancharpuri Colony |

Bowenpally P.O | Phone: + 91 - 40 - 2775 1650

Secunderabad 500 011 - INDIA |

WWW-URL: http://algolog.tripod.com/nupartha.htm

---------------------------------------------------------------------------------------------

[ Thread continues here (13 messages/23.98kB) ]

2-cent tip: A safer 'rm'

Henry Grebler [henrygrebler at optusnet.com.au]

Sun, 20 Jun 2010 15:45:14 +1000

Hi,

[I wrote what follows nearly a month ago. Since I started working

full-time-plus, I have not been able to respond as promptly as I would

prefer. For many, I suspect, the subject has gone cold. Nevertheless,

...]

As often happens, I have not phrased myself well. [sigh]

I apologise for trying to respond quickly, when clearly a fuller and

more considered response was indicated.

Let me try again.

As I understand it, this is the original statement of the problem:

If you've ever tried to delete Emacs backup files with

rm *~

(i.e. remove anything ending with ~), but you

accidentally hit Enter before the ~ and did "rm *", ...

Silas then goes on to suggest a solution.

Perhaps I took the problem statement too literally. When I wrote

cleanup.sh, it was aimed exclusively at emacs backup files. When I

talked about it, I was thinking exclusively of emacs backup files. I

am not aware of any other place where tildes are used.

If it's a file I've been editing with emacs, I've usually created it.

So I get to choose its name.

The message I am trying to convey to TAG's broad readership is this.

If you are happy with Silas's solution, that's fine. If you are happy

with any of the other solutions provided, that's fine too. I myself

have felt the need to deal with this problem. I offer my approach to

dealing with emacs backup files for your consideration.

Part of my approach is the cleanup script; this is the technical

outcome, the tangible I can offer to others.

More important is a holistic approach to the problem as originally

stated. I took it to mean that certain behaviours are more risky than

others. This is again in the eyes of the beholder. Some people always

alias 'rm' to 'rm -i'. If they do this, they will always be prompted

before deleting any file. If they specify multiple files, there will

be a prompt for each file individually.

Such an approach would have saved Silas.

Again, if such an approach appeals to you, by all means adopt it.

It seems to me ill-advised to adopt a safe behaviour in one

circumstance and a risky behaviour in another. The nett effect will

not be as safe as your safest behaviour, but rather as risky as your

riskiest behaviour. (A chain is no stronger than its weakest link.)

So, first of all, according to my philosophy, don't go creating files

with dangerous characters in them. It's really not important to me to

call a file 'a b' when I can just as easily call it 'a_b'. If I

download a file with spaces in the filename AND I plan to edit it with

emacs, it's worth renaming the file, replacing spaces with underscores

(or any other innocuous character).

And I am strongly recommending this behaviour of mine to others.

If you live largely in a Linux world, you should rarely encounter

filenames containing dangerous characters. If you are creating files,

you can choose to avoid dangerous characters. Or not. It's up to you.

[ ... ]

[ Thread continues here (2 messages/9.65kB) ]

Face detection in Perl

Jimmy O'Regan [joregan at gmail.com]

Sun, 27 Jun 2010 18:46:50 +0100

Since I got commit access to Tesseract, I've been getting a little

more interested in image recognition in general, and I was pleased to

find a Java-based 'face annotation' system on Sourceforge:

http://faint.sourceforge.net

The problem is, it doesn't support face detection on Linux, but it

does have a relatively straightforward way of annotating the image

using XMP tags. Perl to the rescue - there's a module called

Image::ObjectDetect on CPAN... it's a pity the example in the POD is

incorrect.

Here's a correct example that generates a simple HTML map (and nothing else

#!/usr/bin/perl

use warnings;

use strict;

use Image::ObjectDetect;

my $cascade = '/usr/share/opencv/haarcascades/haarcascade_frontalface_alt2.xml';

my $file = $ARGV[0];

my @faces = Image::ObjectDetect::detect_objects($cascade, $file);

my $count=1;

print "<map name='mymap'>\n";

for my $face (@faces) {

print " <area shape='rect' alt='map$count'

href='http://www.google.com' coords='";

print $face->{x}, ", ";

print $face->{y}, ", ";

print $face->{x}+$face->{width}, ", ";

print $face->{y}+$face->{height}, "'/>\n";

}

print "</map>\n";

print "<img src='$file' usemap='mymap'/>\n";

(to install Image::ObjectDetect, you first need OpenCV. On Debian, that's

apt-get install libcv-dev libcvaux-dev libhighgui-dev

cpan Image::ObjectDetect

and you're done

--

<Leftmost> jimregan, that's because deep inside you, you are evil.

<Leftmost> Also not-so-deep inside you.

[ Thread continues here (4 messages/6.48kB) ]

Testing new anti-spam system

Ben Okopnik [ben at linuxgazette.net]

Sun, 6 Jun 2010 13:31:12 -0400

From: John Hedges <[email protected]>

To: TAG <[email protected]>

Subject: RE: Testing new anti-spam system

Hi Ben

I've read with interest the thread on C/R antispam technique.

The gmail solution sounds promising except in one use case - where you open an

account through a website. Unfortunately this often results in a confirmation

email - sometimes even with a username and password - exacly the kind of

information you would not want sniffed. One solution, as suggestesd by Martin

Krafft (http://madduck.net/blog/2010.02.09:sign-me-up-to-social-networking/),

is to use a unique receiver address, such as myname-googlesignup at mydomain.co.uk

for these signups that you can whitelist in anticipation of a response, and

with the advantage that you can blacklist the address in the future if it gets

abused.

Please share with the list if you see fit.

I've enjoyed LG for many years, thanks.

John Hedges

Error when execute cgate_migrate_ldap.pl

Ben Okopnik [ben at linuxgazette.net]

Fri, 4 Jun 2010 08:39:06 -0400

On Fri, Jun 04, 2010 at 12:22:51PM +0800, bayu ramadhan wrote:

> dear linuxgazette,

>

> i've read about migrating mail server to postfix/cyrus/openldap, , ,

>

> i've tried u'r tutorial, , ,

Who's "u'r"? I'm pretty sure it was Rene Pfeiffer who wrote that

article, not "u'r".

HINT: please use standard English, and preferably standard punctuation

as well. As you already know, your English isn't of the best; please

don't make it any more difficult by adding more levels of obscurity to

it.

> but i've some error when execute the perl script,,

>

> i've attach the screenshoot of the error and the script, , ,

Next time, please just copy and paste the error. It's plain text, so

there's no need for screenshots.

The error - which I've had to transcribe from your screenshot (an

unnecessary waste of time) - was

Communications Error at ./cgate_migrate_ldap.pl line 175, <DATA> line 225.

Taking a look at line 175 of the script shows a pretty good possibility

for where the possible problem might be:

173 --> # Bind to servers

174 --> $mesg = $ldap_source->bind($binddn_source, password => 'ldapbppt');

175 --> $mesg->code && die $mesg->error;

That is, you're trying to bind to a server using the same password that

Rene had used in his article. Unless you're using that password, the

connection is going to fail.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (2 messages/3.37kB) ]

mbox selective deletion

Ben Okopnik [ben at okopnik.com]

Sun, 27 Jun 2010 14:20:44 -0400

Hi, Evaggelos -

On Sun, Jun 27, 2010 at 08:44:01PM +0300, Evaggelos Balaskas wrote:

> Hi,

>

> First of all let me introduce my self:

>

> My name is: Evaggelos Balaskas, or ebal for short, and i dont know perl!

Welcome! That's a good Alcoholics-Anonymous-style introduction.

> I wanted to add many subjects to my search/clear function,

> so i used a list.

Not a bad idea so far...

> while ( my $msg = $mb->next_message ) {

> my $flag = 0;

> my $s = $msg->header->{subject};

>

> if ( !$s ) {

> $s = "empty_subject";

> }

If you're trying to set a default value for a variable, Perl has a nifty

way to do that by using "reflexive assigment":

$s ||= "empty_subject";

This essentially says "if $s is true - i.e., contains anything - then

leave it alone; otherwise, assign this value."

> foreach (@subjects) {

> if ( $s =~ $_ ) {

Although that will work, it's not a good idea: the binding operator (=~)

is intended to work with the match operator (/foo/ or m/foo/), and

omitting the latter makes your code fragile (i.e., since the usage is

non-standard, your code could fail with a later version of Perl.) In

addition to that, since $_ is the default binding for regexes, you don't

need to use it explicitly; you could just write

if (/$s/){

and be done with it.

However, there's a larger problem here: you don't need to build a state

machine to determine whether your messages contain one of the above

subjects - you can just use 'grep', or (my preferred method) a hash

lookup. I also noticed that you don't use the default value in "$s"

anywhere after defining it - and if you're just throwing it away, you

don't need to define it in the first place.

# Using grep

my @subjects = ("aaaaaa", "bbbbbb", "ccccc", "ddddd");

while ( my $msg = $mb->next_message ) {

print "$msg\n" unless grep /$msg->header->{subject}/, @subjects;

}

Alternate method, faster lookup with less grinding:

# Using a hash lookup

my %subjects = map { $_ => 1 } ("aaaa", "bbbb", "cccc", "dddd");

while ( my $msg = $mb->next_message ) {

print "$msg\n" unless defined $subjects{$msg->header->{subject}};

}

This latter version will also deal gracefully with undefined subject

lines.

> PS: Plz forgive me for my bad english - not my natural language.

[smile] I'll be sure to "forgive" you - as soon as my Greek is as good

as your English (which, incidentally, is excellent as far as I can see.)

Again, welcome!

--

OKOPNIK CONSULTING

Custom Computing Solutions For Your Business

Expert-led Training | Dynamic, vital websites | Custom programming

443-250-7895 http://okopnik.com

=?utf-8?q?Sierpi=C5=84ski_triangle?=

Jimmy O'Regan [joregan at gmail.com]

Fri, 18 Jun 2010 14:48:14 +0100

A friend just sent me a Sierpi?ski triangle generator in 14 lines of

C++ (http://codepad.org/tD7g8shT), my response is 11 lines of C:

#include <stdio.h>

int main() {

int a, b;

for (a = 0; a < 64; ++a) {

for (b = 0; b < 64; ++b)

printf ("%c ", ((a + b) == (a ^ b)) ? '#' : ' ');

printf("\n");

}

return 0;

}

...but I'm wondering if Ben has a Perl one-liner?

(BTW, CodePad is awesome!)

--

<Leftmost> jimregan, that's because deep inside you, you are evil.

<Leftmost> Also not-so-deep inside you.

[ Thread continues here (15 messages/15.96kB) ]

Knowing from what pc a certain file came

Deividson Okopnik [deivid.okop at gmail.com]

Mon, 21 Jun 2010 11:49:03 -0300

Hello TAG

I have an ubuntu linux machine here thats got a samba shared folder,

writable by anyone.

Is there any way i can know from what IP a certain file came?

Cause one of the windoze machines on my network is spreading some

virus, and I cant figure out what machine is that. It creates an

autorun.inf and a .exe on my ubuntu shared folder, thats the reason I

wanted to try to figure out where it came from.

Thanks

Deividson

[ Thread continues here (4 messages/5.60kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 176 of Linux Gazette, July 2010

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to [email protected]. Deividson can also be reached via twitter.

News in General

Novell wins Final Linux Legal Battle

Novell wins Final Linux Legal Battle

After years of litigation, all of the SCO claims have been denied and

it will be going into bankruptcy hearings in July - which had been

delayed until the final judgement.

As noted on the Groklaw web site, Judge Ted Stewart ruled for Novell

and against SCO. Futhermore, SCO's claims for breach of the implied

covenant of fair dealings were also denied in the June jugement.

Here is the major part of Novell's statement from June 11:

"Yesterday, United States District Court Judge Ted Stewart issued a

Final Judgment regarding the long standing dispute between SCO Group

and Novell. As part of the decision, the Court reaffirmed the earlier

jury verdict that Novell maintained ownership of important UNIX

copyrights, which SCO had asserted to own in its attack on the Linux

computer operating system. The Court also issued a lengthy Findings of

Fact and Conclusions of Law wherein it determined that SCO was not

entitled to an order requiring Novell to transfer the UNIX copyrights

because "Novell had purposely retained those copyrights." In addition,

the Court concluded that SCO was obligated to recognize Novell's

waiver of SCO's claims against IBM and other companies, many of whom

utilize Linux."

One Laptop per Child and Marvell To Redefine Tablet Computing

One Laptop per Child and Marvell To Redefine Tablet Computing

In an agreement that could provide advanced, affordable tablet

computers to classrooms throughout the world, One Laptop per Child

(OLPC), a global organization helping to provide every child in the

world access to a modern education, and Marvell, a leader in

integrated silicon solutions, will jointly develop a family of

next-generation OLPC XO tablet computers based on the Marvell Moby

reference design. This new partnership will provide designs and

technologies to enable a range of new educational tablets, delivered

by OLPC and other education industry leaders, aimed at schools in both

the U.S. and developing markets. Marvell is also announcing today it

has launched Mobylize, a campaign to improve technology adoption in

America's classrooms.

The new family of XO tablets will incorporate elements and new

capabilities based on feedback from the nearly 2 million children and

families around the world who use the current XO laptop. The XO

tablet will require approximately one watt of power to operate

compared to about 5 watts necessary for the current XO laptop. The XO

tablet will also feature a multi-lingual soft keyboard with touch

feedback, and will also feature an application to directly access more

than 2 million free books available across the Internet.

The first tablets in the line will be based closely on the Moby, not

the XO-3 design, and will focus more on children in the developed world.

These will be on display at CES 2011 in January, and available next

year for under $100. The original XO-3 design is still planned for

2012 and will benefit from the experience of both the XO-1.75 and the

Moby efforts.

"The Moby tablet platform - and our partnership with OLPC - represents

our joint passion and commitment to give students the power to learn,

create, connect and collaborate in entirely new ways," said Weili Dai,

Marvell's Co-founder and Vice President and General Manager of the

Consumer and Computing Business Unit. "Marvell's cutting edge

technology - including live content, high quality video (1080p full-HD

encode and decode), high performance 3D graphics, Flash 10 Internet

and two-way teleconferencing - will fundamentally improve the way

students learn by giving them more efficient, relevant - even fun

tools to use. Education is the most pressing social and economic

issue facing America. I believe the Marvell Moby tablet can ignite a

life-long passion for learning in all students everywhere."

Powered by a high-performance, highly scalable, and low-power Marvell

ARMADA 610 application processor, the Moby tablet features gigahertz

processor speed, 1080p full-HD encode and decode, intelligent power

management, power-efficient Marvell 11n Wi-Fi/Bluetooth/FM/GPS

connectivity, high performance 3D graphics and support for multiple

platforms including full Adobe Flash, Android, Windows Mobile and

Ubuntu. The Moby platform also features a built-in camera for live

video conferencing, multiple simultaneous viewing screens and

Marvell's 11n Mobile Hotspot which allows Wi-Fi access that supports

up to eight concurrent users connected to the Internet via a cellular

broadband connection. The ultra low power mobile tablet has a very

long battery life.

Moby is currently being piloted in at-risk schools in Washington, DC,

and Marvell is investing in a Mobylize campaign to improve tech

adoption within US classrooms. This should help OLPC in the developed

world.

For more information, visit http://www.mobylize.org.

One Laptop Per Child To Provide Laptops To High Schools

One Laptop Per Child To Provide Laptops To High Schools

In June, One Laptop per Child (OLPC) was awarded a bid by Plan Ceibal

to provide 90,000 updated XO laptops for high school students in

Uruguay. This is the first time the OLPC XO laptops have been

specifically designed for high school-aged students and represents a

major expansion in its global learning program.

The XO high school laptop (XO-H) is built on the XO-1.5 platform and

based on a VIA processor. it will provide 2X the speed of the XO 1.0,

4X DRAM memory, and 4X Flash memory. The XO-H is designed with a larger

keyboard that is better suited to the larger hands and fingers of older

students. It will feature the learning-focused Sugar user interface on

top of a dual-boot Linux operating system, with Gnome Desktop

Environment that offers office productivity tools.

The XO-H will be delivered with age-appropriate learning programs

adapted to the scholastic needs of secondary schools. A new color

variation for the laptop's case (light and dark blue) will be an

option for the high school model. The XO, specially designed for

rugged environments, is well-suited for remote classrooms and daily

transportation between home and school. The XO uses three times less

electricity than other laptops and is built as a sealed, dust-free

system.

The government of Uruguay through Plan Ceibal has completely saturated

primary schools with 380,000 XOs and will now begin to expand the

highly successful One Laptop per Child learning program to its high

schools. There are 230,000 high school students in Uruguay.

"Until now, the 1.5 million students worldwide using XO laptops had no

comparable computer to 'grow up' to," said OLPC Association CEO

Rodrigo Arboleda. "The XO high school edition laptop demonstrates how

the XO and its software can easily adapt to the needs of its users."

One Laptop per Child (OLPC at http://www.laptop.org) is a non-profit

organization created by Nicholas Negroponte and others from the MIT

Media Lab to design, manufacture and distribute laptop computers that

are inexpensive enough to provide every child in the world access to

knowledge and modern forms of education.

Swype Beta for Android OS

Swype Beta for Android OS

Swype is offering a narrowcast Beta on its updated Android keyboard

application. This application interprets word choices in real time

from a dictionary database as a user slides a finger between letters

in a continuous motion.

The Swype software is very tightly written with a total memory

footprint of under 1 MB.

The patented technology enables users to input words faster and easier

than other data input methods - over 50 words per minute. The

application is designed to work across a variety of devices such as

phones, tablets, game consoles, kiosks, televisions, virtual screens

and more.

A key advantage to Swype is that there is no need to be very accurate,

enabling very rapid text entry. Swype has been designed to run in

real time on relatively low-powered portable devices.

The beta software has built-in dictionaries for English, Spanish, and

Italian. The beta software supports phones with HVGA (480h x 320w),

WVGA (800h x 480w) and WVGA854(854h x 480w) screen sizes (screen size

is detected by the software).

Here are some details on the beta:

* It will be open for a limited time;

* Initially, only English, Spanish, and Italian - more languages to

come;

* Some key features of Swype require OEM integration;

* Limited End User Support - mostly forum.

If your phone came pre-installed with Swype, DO NOT download this beta

(it won't work).

The beta is open to all phones using Android which do not already have

Swype pre-installed. For more information on Swype and the current

beta test, go to: http://beta.swype.com/.

OpenOffice.org 3.2.1 released

OpenOffice.org 3.2.1 released

The OpenOffice.org Community recently released OpenOffice.org 3.2.1,

the newest version of the world's free and open-source office

productivity suite.

OpenOffice.org 3.2.1 is a so-called micro release that comes with

bugfixes and improvements, with no new features being introduced. This

release also fixes major security issues, so users are encouraged to

upgrade to the new version as soon as possible.

This version is the first to be released with the project's new main

sponsor, Oracle, and comes with a refreshed logo and splash screen.

Following OpenOffice.org's usual release cycle, the next feature

release of OpenOffice.org is version 3.3, expected in Autumn 2010.

The OOo Community celebrates its 10th anniversary this year at the

annual OOoCon in Budapest, Hungary, from August 31 to September 3.

OpenOffice.org 3.2.1 is available in many languages for all major

platforms at: http://download.openoffice.org.

Linux Foundation Announces LinuxCon Brazil

Linux Foundation Announces LinuxCon Brazil

Linus Torvalds will speak at first ever LinuxCon Brazil where the

country's developer, IT operations, and business communities will come

together. LinuxCon Brazil will take place August 31 - September 1,

2010 in Sao Paulo, Brazil.

Brazil has long been recognized as one of the fastest growing

countries for Linux adoption. The Brazilian government was one of the

first to subsidize Linux-based PCs for its citizens with PC Conectado,

a tax-free computer initiative launched in 2003. Brazil's active and

knowledgeable community of Linux users, developers and enterprise

executives bring an important perspective to the development process

and to the future of Linux.

"Brazil leads many other countries in its adoption of Linux and is a

growing base of development. The time is right to take the industry's

premier Linux conference to Brazil," said Jim Zemlin, executive

director at The Linux Foundation.

Confirmed speakers for LinuxCon Brazil include Linux creator Linus

Torvalds and lead Linux maintainer Andrew Morton, who will together

deliver a keynote about the future of Linux.

Conferences and Events

- CiscoLive! 2010

-

June 27-July 1, Mandalay Bay, Las Vegas, NV

http://www.ciscolive.com/attendees/activities/.

- SANS Digital Forensics and Incident Response Summit

-

July 8-9 2010, Washington DC

http://www.sans.org/forensics-incident-response-summit-2010/agenda.php.

- GUADEC 2010

-

July 24-30, 2010, The Hague, Netherlands.

- Black Hat USA

-

July 24-27, Caesars Palace, Las Vegas, Nev.

http://www.blackhat.com/html/bh-us-10/bh-us-10-home.html.

- DebConf 10

-

August 1-7, New York, New York

http://debconf10.debconf.org/.

- First Splunk Worldwide Users' Conference

-

August 9-11, 2010, San Francisco, CA

http://www.splunk.com/goto/conference.

- LinuxCon 2010

-

August 10-12, 2010, Renaissance Waterfront, Boston, MA

http://events.linuxfoundation.org/events/linuxcon.

- USENIX Security '10

-

August 11-13, Washington, DC

http://usenix.com/events/sec10.

- VM World 2010

-

August 30-Sep 2, San Francisco, CA

http://www.vmworld.com/index.jspa.

- LinuxCon Brazil

-

August 31-Sept 1, 2010, São Paulo, Brazil

http://events.linuxfoundation.org/events/linuxcon-brazil.

- OOoCon 2010

-

August 31 - Sept 3, Budapest, Hungary

http://www.ooocon.org/index.php/ooocon/2010.

- Ohio Linuxfest 2010

-

September 10-12, Ohio

http://www.ohiolinux.org/.

- Oracle Openworld 2010

-

September 19-23, San Francisco, CA

http://www.oracle.com/us/openworld/.

- Brocade Conference 2010

-

September 20-22, Mandalay Bay, Las Vegas, NV

http://www.brocade.com/conference2010.

- StarWest 2010

-

September 26-Oct 1, San Diego, CA

http://www.sqe.com/starwest/.

- LinuxCon Japan 2010

-

September 27-29, Roppongi Academy, Tokyo, Japan

http://events.linuxfoundation.org/events/linuxcon-japan/.

Distro News

Novell Releases SUSE Linux Enterprise 11 Service Pack 1

Novell Releases SUSE Linux Enterprise 11 Service Pack 1

Novell released SUSE Linux Enterprise 11 Service Pack 1 (SP1) in May,

which delivers broad virtualization capabilities, high availability

clustering, and more flexible maintenance and support options.

SUSE Linux Enterprise 11 SP1 is optimized for physical, virtuali, and

cloud infrastructures and offers numerous advancements, including:

Broad virtualization support, including the latest Xen 4.0 hypervisor

with significantly improved virtual input/output performance, support

for KVM, an emerging open source virtualization hypervisor, and Linux

integration components in Hyper-V - an industry first.

Best open source high-availability solution, with clustering advances

such as support for metro area clusters, simple node recovery with

ReaR, a source disaster recovery framework, and new administrative

tools including a cluster simulator and web-based GUI.

First enterprise Linux distribution with an updated 2.6.32 kernel,

which leverages the RAS features in Intel Xeon processor 7500 and 5600

series.

New technology on the desktop, including improved audio and Bluetooth

support, as well as the latest versions of Firefox, OpenOffice.org and

Evolution, which includes MAPI enhancements for improved

interoperability with Microsoft Exchange.

With SP1, Novell is implementing more flexible support options that

will allow customers to remain on older package releases during the

product's life cycle, and will significantly lower the hurdle to

deploy upcoming service packs. While current releases deliver the most

value with proactive maintenance updates and patches, for customers

that place a premium on stability, this program delivers superior

control and flexibility by allowing them to decide when and how to

upgrade.

Long Term Service Pack Support will continue to be available for

customers that want full technical support and fixes backported to

earlier releases.

IBM and other component, system, and software vendors like AMD,

Broadcom, Brocade, Dell, Emulex, Fujitsu, HP, Intel, LSI, Microsoft,

nVidia, QLogic and SGI leverage Partner Linux Driver Program tools to

support their third-party technology with SUSE Linux Enterprise.

Novell's partners provide more than 5,000 certified applications today

for SUSE Linux Enterprise, twice that of the next closest enterprise

Linux distribution provider.

Software and Product News

Opera 10.6 Beta offers more HTML 5 features

Opera 10.6 Beta offers more HTML 5 features

A new beta version of the popular Opera web browser is available for

testing with added support for new HTML 5. Opera 10.6 will include

support for the HTML 5 video and the WebM codec that Google released

as open source last month. It also includes support for the Appcache

feature of HTML 5 that allows web-based applications to run even when

a computer is no longer attached to the Internet.

Opera 10.6 is also faster than Opera 10.5 on standard web tests and is

informally reported by testers to edge out the current versions of

Firefox and Chrome. Additionally, there are enhancements to the menus

and tab previews.

There is also support for Geolocation applications. Opera allows you

to share your location with apps like Google Maps and can find your

location on mobile devices with a GPS.

Download the 10.6 beta for Linux and FreeBSD here:

http://www.opera.com/browser/next/.

Apache Announces Cassandra Release 0.6 distributed data store

Apache Announces Cassandra Release 0.6 distributed data store

The Apache Software Foundation (ASF) - developers, stewards, and

incubators of 138 Open Source projects - has announced Apache

Cassandra version 0.6, the Project's latest release since its

graduation from the ASF Incubator in February 2010.

Apache Cassandra is an advanced, second-generation "NoSQL" distributed

data store that has a shared-nothing architecture. The Cassandra

decentralized model provides massive scalability, and is highly

available with no single point of failure even under the worst

scenarios.

Originally developed at Facebook and submitted to the ASF Incubator in

2009, the Project has added more than a half-dozen new committers, and

is deployed by dozens of high-profile users such as Cisco WebEx,

Cloudkick, Digg, Facebook, Rackspace, Reddit, and Twitter, among

others.

"The services we provide to customers are only as good the systems

they are built on," said Eric Evans, Apache Cassandra committer and

Systems Architect at The Rackspace Cloud. "With Cassandra, we get the

fault-tolerance and availability our customers demand, and the

scalability we need to make things work."

Cassandra 0.6 features include:

* Support for Apache Hadoop: this allows running analytic queries with

the leading map/reduce framework against data in Cassandra;

* Integrated row cache: this eliminates the need for a separate

caching layer, thereby simplifying architectures;

* Increased speed: this builds on Cassandra's ability to process

thousands of writes per second, allowing applications to cope with

increasing write loads.

"Apache Cassandra 0.6 is 30% faster across the board, building on our

already-impressive speed," said Jonathan Ellis, Apache Cassandra

Project Management Committee Chair. "It achieves scale-out without

making the kind of design compromises that result in operations teams

getting paged at 2 AM."

Twitter switched to Apache Cassandra because it can run on large

server clusters and is capable of taking in very large amounts of data

at a time. Storage Team Technical Lead Ryan King explained, "At

Twitter, we're deploying Cassandra to tackle scalability, flexibility

and operability issues in a way that's more highly available and cost

effective than our current systems."

Released under the Apache Software License v2.0, Apache Cassandra 0.6

can be downloaded at http://cassandra.apache.org/.

Primal Launches Semantic Web Tools

Primal Launches Semantic Web Tools

Paving the way to a Web 3.0 Internet, Primal announced its Primal

Thought Networking platform and beta version of its newest service,

Pages, from the 2010 Semantic Technology Conference in June.

Delivered on the Software as a Service (SaaS) model, the Primal

Thought Networking platform, using semantic synthesis, helps content

producers to expand their digital footprint and improve engagement

with their consumers, at lower cost. For consumers, the platform

delivers a more personalized experience that is based on content

relevant to their thoughts and ideas. Rather than sifting through page

after page of content, the Thought Networking platform's software

assistants automate the filtering processes, delivering more relevant

content.

In addition to the launch of the Thought Networking platform, Primal

also introduced the beta version of its newest offering, Pages. With

Pages, users can now quickly generate an entire Web presence based on a

topic relevant to their interests, which then may be explored,

customized and shared with friends or the general public. The Pages

beta website generator utilizes Semantic Web technology that goes

beyond the simple facilitation of content discovery, pulling

information from sites like Wikipedia, Yahoo!, and Flickr, delivering

relevant and useful content in a centralized view in real-time.

"Semantic synthesis is the ability to create in real time a semantic

graph of what I need. With our growing portfolio of IP and patents,

we have spent the past several years developing an intuitive

technology that annotates a person's thoughts and ideas," said

Primal's Founder and Co-President Peter Sweeney, speaking live from

the Semantic Technology Conference. "On the content manufacturing plane,

we provide content developers with a completely new market of content

but will also enable an entirely untapped group of people who would

not otherwise have the technical aptitude, but have big ideas to

share, to quickly and easily become content developers."

Using Primal's semantic synthesis technology, teachers will be able to

seamlessly build a website of course materials for their students;

hobbyists can create a one-stop, definitive source of information for

their pastime; politicians can build hyper-local, targeted sites

featuring local activities and news in support of their constituents.

Join the Primal community at http://www.primal.com or on Facebook at

http://www.facebook.com/primalfbook.

TRENDnet offers First 450Mbps Wireless N Gigabit Router

TRENDnet offers First 450Mbps Wireless N Gigabit Router

TRENDnet announced in June a first-to-market 450Mbps Wireless N

gigabit router, its model TEW-691GR. Designed for high performance and

quality of service, this router uses three external antennas to

broadcast on the 2.4GHz spectrum.

Three spatial streams per antenna produce a record 450Mbps theoretical

wireless throughput. Multiple Input Multiple Output (MIMO) antenna

technology boosts wireless coverage, signal strength, and throughput

speed. One gigabit Wide Area Network port and four gigabit Local Area

Network ports also offer very fast wired throughput performance.

Wi-Fi Protected Setup (WPS) integrates other WPS supported wireless

adapters at the touch of a button. Instead of entering complicated

encryption codes, simply press the WPS button on the TEW-691GR, then

press the WPS button on a compatible wireless adapter, confirm you

would like to connect, and the devices automatically exchange

information and connect. The router is compatible with IEEE 802.11n

and backward compatible with IEEE 802.11g/b/a devices.

Advanced wireless encryption and a secure firewall offer protection

for digital networks. Router setup is fast and intuitive. WMM Quality

of Service (QoS) technology prioritizes gaming, Internet calls, and

video streams.

"TRENDnet's ability to launch this ground breaking 450Mbps product

ahead of other brands says a lot about our recent growth," stated Pei

Huang, President and CEO of TRENDnet. "We are ecstatic to set a new

performance threshold in the consumer wireless revolution."

The MSRP for the TEW-691GR is U.S. $199.00.

TRENDnet Launches Two High Speed USB 3.0 Adapters

TRENDnet Launches Two High Speed USB 3.0 Adapters

TRENDnet in June announced the availability of PC cards that add USB

3.0 Ports to both desktops and laptops. The adapters add two super

speed 5Gbps USB 3.0 ports that can transfer content from USB 3.0

storage devices, flash drives, and camcorders at 5Gbps speeds, which

are 10 times faster than USB 2.0.

TRENDnet's 2-Port USB 3.0 ExpressCard Adapter, model TU3-H2EC, plugs

into any available ExpressCard port on a laptop computer to provide

two USB 3.0 ports. A power adapter provides full power to all

connected USB 3.0 devices. Backward compatibility allows users to

connect USB 2.0 devices to the USB 3.0 ports.

The 2-Port USB 3.0 PCI Express Adapter, model TU3-H2PIE, connects to

an available PCI Express port on a tower computer. A 4-pin power port

connects to an internal power supply in order to provide full power to

all connected USB 3.0 devices. Backward compatibility allows users to

connect USB 2.0 devices to the USB 3.0 ports.

"USB 3.0 is like HD television - once you experience it, you won't go

back," stated Zak Wood, Director of Global Marketing for TRENDnet.

"The tremendous speed advantage of USB 3.0 (5Gbps) versus USB 2.0

(480Mbps) is driving strong consumer adoption. TRENDnet's user

friendly adapters upgrade existing computers to high speed 5Gbps USB

3.0."

TRENDnet's 2-Port USB 3.0 ExpressCard Adapter, model TU3-H2EC, will be

available from online and retail partners shortly. The MSRP for the

TU3-H2EC is U.S. $59.

TRENDnet's 2-Port USB 3.0 PCI Express Adapter, model TU3-H2PIE, will

be available from online and retail partners shortly. The MSRP for the

TU3-H2PIE is U.S. $49.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Pixie Chronicles: Part 4 Kickstart

By Henry Grebler

Keywords: NFS

The Story So Far

In Part 1 I outlined my plans: to build a server using network

install. However, I got sidetracked by problems. In Part 2 I made some

progress and dealt with one of the problems. In Part 3 I detailed the

first part of the network install -- from start to PXE boot. This part

details the rest of the procedure.

If you've been following me so far, you have a target machine which

uses the PXE process to boot into the PXE boot Linux kernel.

Importantly, you've tried it out and confirmed that this much of the

exercise behaves as desired.

The PXE boot process comes to the point where you see the prompt:

boot:

We have not yet set up enough to proceed to the next stage, for which

I used NFS.

Planning

First decide on an install method. My exercise was with Fedora

10. The possible install media are:

CD or DVD drive

Hard Drive

Other Device (presumably USB)

HTTP Server

FTP Server

NFS Server

Only the last 3 are network installs, which is all we are interested

in here. I chose NFS Server.

Further, I happened to have a machine which was PXE-capable. So my

plan was to turn on the machine and (as near as possible) have it

install all the software without my intervention.

If your machine is not PXE-capable in hardware, it may still be

possible to perform an unassisted install - but you will need to create

a CD or DVD to achieve the PXE part. It might be possible to create a

PXE floppy.

You will of course need a server to serve PXE data and other info over

the network. I chose my desktop as the PXE server.

Components

PXE client (see previous article)

PXE server (see previous article)

tftp

dhcp

pxelinux

kickstart

NFS (see below)

NFS

Since the network method I chose was NFS, I had to set up NFS. I

judged that it would be easier to set up NFS than to set up HTTP; I

was probably a wee bit foolish not going for FTP -- at the time I had

security reservations, but I think for an internal private network

like mine, there is no difference in security between FTP and NFS.

Arguably TFTP would have been even better because it was already in

place having been needed at an earlier step. But it was not available

as an option.

mkdir -p /Big/FedoraCore10/NFSroot

ln -s /Big/FedoraCore10/NFSroot /NFS

Create /etc/exports:

# exports

/NFS 192.168.0.0/24(ro,no_subtree_check,root_squash)

/NFS/CD_images 192.168.0.0/24(ro,no_subtree_check,root_squash)

All I've done here is allow any machine on my local subnet to access

NFS on the server (my desktop -- where I'm typing this).

NB exports do not "inherit". If you export "/NFS" that won't

allow clients to mount "/NFS/CD_images".

mkdir /var/lib/nfs/v4recovery

/etc/rc.d/init.d/nfs start

exportfs -a

exportfs -v

I had all sorts of problems with NFS. I found it useful to test

locally using:

mkdir /mnt/nfs

mount 192.168.0.3:/NFS /mnt/nfs

And if you want to snoop network traffic, you'll need:

tshark -w /tmp/nfs.tshark -i lo

This should behave in a way akin to what the client PC will see when

it tries to NFS-mount directories from the server.

The layout of the NFS directory:

ls -lA /NFS

lrwxrwxrwx 1 root staff 25 Nov 26 22:12 /NFS -> /Big/FedoraCore10/NFSroot

ls -lA /NFS/.

total 44

drwxrwxr-x 3 root staff 4096 Nov 28 17:59 CD_images

lrwxrwxrwx 1 root staff 13 Nov 27 10:31 b2 -> ks.b2.cfg.sck

-rw-rw-r-- 1 henryg henryg 3407 Dec 7 18:28 ks.b2.cfg.sck

The NFS directory also contains many other files left over from numerous

false starts. (Hey, I'm human.)

ls -lA /NFS/CD_images

total 3713468

-rw-rw-r-- 2 henryg henryg 720508928 Nov 26 07:43 Fedora-10-i386-disc1.iso

-rw-rw-r-- 2 henryg henryg 706545664 Nov 26 08:03 Fedora-10-i386-disc2.iso

-rw-rw-r-- 2 henryg henryg 708554752 Nov 26 08:38 Fedora-10-i386-disc3.iso

-rw-rw-r-- 2 henryg henryg 724043776 Nov 26 09:17 Fedora-10-i386-disc4.iso

-rw-rw-r-- 2 henryg henryg 720308224 Nov 26 09:18 Fedora-10-i386-disc5.iso

-rw-rw-r-- 2 henryg henryg 83990528 Nov 26 10:15 Fedora-10-i386-disc6.iso

-rw-rw-r-- 2 henryg henryg 134868992 Nov 26 10:17 Fedora-10-i386-netinst.iso

It is not necessary to mount the CD images; it seems that

anaconda (the program which performs the actual install)

knows how to do that. Sharp-eyed readers will have spotted that the

iso images are hard-linked in the /NFS/CD_images directory. That's

because I first downloaded the images to /Big/downloads thinking I

would need to mount them; later when I was setting up the NFS

directory I finally discovered that I needed the images as not-mounted

files. Rather than copy the files and waste a heap of disk space, or

move the files and risk making something else fail, I chose to

hard-link them and get "two for the price of one".

The kickstart file

I used system-config-kickstart to generate a first kickstart file. I

edited it to get more of the things I wanted and then decided to try

it out. After several go-arounds I got to the point which got me into

trouble (as described in Part 1).

Here is the final kickstart file:

# ks.b2.cfg.sck - created by HMG from system-config-kickstart for b2

## - - debugging - - - - - - - - - -

## :: uncomment the following to debug a Kickstart config file

## interactive

#platform=x86, AMD64, or Intel EM64T

# System authorization information

auth --useshadow --enablemd5

# System bootloader configuration

bootloader --location=mbr

# I guess the "sda" will prevent trashing my USB stick

clearpart --all --initlabel --drives=sda

part /boot --asprimary --ondisk=sda --fstype ext3 --size=200

part swap --asprimary --ondisk=sda --fstype swap --size=512

part / --asprimary --ondisk=sda --fstype ext3 --size=1 --bytes-per-inode=4096 --grow

# Use graphical install

graphical

# Firewall configuration

firewall --enabled --http --ssh --smtp

# Run the Setup Agent on first boot

firstboot --disable

# System keyboard

keyboard us

# System language

lang en_AU

# Use NFS installation media

nfs --server=192.168.0.3 --dir=/NFS/CD_images

# Network information

network --bootproto=static --device=eth0 --gateway=192.168.99.1 --ip=192.168.99.25 --nameserver=127.0.0.1 --netmask=255.255.255.0 --onboot=on

network --bootproto=static --device=eth1 --gateway=192.168.25.1 --ip=192.168.25.25 --nameserver=198.142.0.51,203.2.75.132 --netmask=255.255.255.0 --onboot=on --hostname b2

# Reboot after installation

reboot

#Root password

rootpw --iscrypted $1$D.xoGzjz$kMojNQR7KFddumcLlQPEs0

# SELinux configuration

selinux --enforcing

# System timezone

timezone --isUtc Australia/Melbourne

# Install OS instead of upgrade

install

# X Window System configuration information

xconfig --defaultdesktop=GNOME --depth=8 --resolution=640x480

# Clear the Master Boot Record

#zerombr

%packages

@development-tools

@development-libs

@base

@base-x

@gnome-desktop

@web-server

@dns-server

@text-internet

@mail-server

@network-server

@server-cfg

@editors

emacs

gdm

lynx

-mutt

-slrn

%end

Putting it all together

That's it. Here's a walk-through of what happens for an install.

User connects network cable to target machine and powers up.

PXE gains control and asks network for an IP address and other

information server has for this machine (at this stage

identified by MAC address).

Server sends the requested info.

PXE configures the NIC with the received IP address.

PXE uses tftp to download a Linux kernel.

The linux kernel announces itself with the prompt "boot: ".

User enters b2. User is no longer needed.

Still using tftp, the target machine downloads another Linux

kernel. In accordance with the label b2, it then uses NFS to

download the kickstart file.

The kickstart file specifies that the install should also use

NFS. The installer uses the parameters of the kickstart file

to govern the installation.

When the install is complete, the target machine (now a shiny

new server) reboots.

PXE gains control as before and the steps above are followed.

However, at the "boot: " prompt, either the user simply

presses Enter, or, more likely, because the user is not there,

the boot loader times out. Either way, the default label lhd

is taken: the target machine boots off the recently installed

hard drive.

Some time later, the user reboots and disables the PXE boot

which is no longer needed.

References:

http://docs.fedoraproject.org/install-guide/f10/en_US/sn-automating-installation.html

http://fedoranews.org/dowen/nfsinstall/

http://www.instalinux.com/howto.php

http://ostoolbox.blogspot.com/2006/01/review-automated-network-install-of.html

open source toolbox

Tuesday, January 31, 2006

Review: automated network install of suse, debian and fedora with LinuxCOE

http://nfs.sourceforge.net/

Linux NFS Overview, FAQ and HOWTO Documents

http://docs.fedoraproject.org/mirror/en/sn-server-config.html

http://docs.fedoraproject.org/mirror/en/sn-planning-and-setup.html

http://nfs.sourceforge.net/

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/grebler.jpg)

Henry has spent his days working with computers, mostly for computer

manufacturers or software developers. His early computer experience

includes relics such as punch cards, paper tape and mag tape. It is

his darkest secret that he has been paid to do the sorts of things he

would have paid money to be allowed to do. Just don't tell any of his

employers.

He has used Linux as his personal home desktop since the family got its

first PC in 1996. Back then, when the family shared the one PC, it was a

dual-boot Windows/Slackware setup. Now that each member has his/her own

computer, Henry somehow survives in a purely Linux world.

He lives in a suburb of Melbourne, Australia.

Copyright © 2010, Henry Grebler. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 176 of Linux Gazette, July 2010

Tacco and the Painters (A Fable for the Nineties)

By Henry Grebler

Henry and I were having a conversation via e-mail, and happened upon a

subject of difficult clients. As in many things, he and I see eye to eye on

this issue - but he managed to do me one better: while I was still fuming

just a bit, and thinking about how to make things go better the next time,

Henry sent me this story that captured my (and clearly his) experience

perfectly, and ticked off all the checkboxes I was trying to fill. In fact,

I was bemused and stunned by how well it did so... but that is

traditionally the case with parables. They're timeless, and their lessons

endure.

This tale may not have much to do with Linux, or Open Source in general;

however, part of LG's function, as I see it, is to educate and entertain -

or better yet, to educate while entertaining. For those of you who, like

myself, work as consultants, or plan to go on your own and become

consultants, I recommend this story heartily and without reservation: read

it, understand it, follow it. Someday, it may save your sanity; for the

moment, I hope that it at least tickles your funny bone.

-- Ben Okopnik, Editor-in-Chief

"Here is our cottage," said Tacco. "We'd like it painted nicely."

"Of course," said Ozzy. "We always try to do a good job. Our workers

are very diligent. I'm sure you will be satisfied."

Tacco showed Ozzy all around the cottage. Ozzy asked the occasional

question and made some notes.

"We usually provide an estimate of how long the job will take and

charge time and materials," said Ozzy.

Tacco looked horrified. "No! We cannot agree to that!" he exclaimed.

"We will only proceed with you if you give us a fixed-price quote."

Ozzy looked uncomfortable. "We might be able to provide a fixed-price

quote. But we would need to have everything specified fairly precisely. We

wouldn't be able to permit ad hoc renovations once we start the

work."

"That's all right," said Tacco.

"And I'll bring in a couple of my estimators so that you and they can

agree exactly what the job is," continued Ozzy.

"When can you start? We want this job finished very soon because

people will be wanting to live in this cottage in the very near

future. We expect to have finished refurbishing in a couple of weeks."

They discussed dates for a while and finally agreed that the painting

would be finished in three months, at the end of May. They made an

appointment for Ozzy's estimators to go over the cottage with Tacco.

A few days later, Ozzy asked Tacco to go over the cottage in some

detail and tell the estimators and Ozzy the details of the painting

job: number of coats of paint, colour scheme and so on. The estimators

took measurements of the room sizes and layout.

Back in the office, Ozzy pored over the figures to come up with a

quote for Tacco. He had his secretary type it up and then he checked

all the details. He even had his estimators check his calculations. At

last, he mailed off the quote to Tacco.

A few days later, Tacco rang Ozzy to tell him the good news. Ozzy had

won the business. Tacco was eager to get the work under way. Ozzy's

team of painters would start the following week.

When the painters arrived, Tacco was there to meet them. He led them

to a building.

"This isn't the cottage that we looked at last time," said David, one

of the painters (who was also one of the estimators).

"No," agreed Tacco. "But its very similar to the one you saw. I'm sure

the differences are not important."

David was a bit doubtful, but he was reluctant to appear

uncooperative. So he bit his tongue and led his work-mate, Charlie,

into the cottage.

"It doesn't look like the renovators have finished this cottage," said

Charlie.

"No, they haven't," agreed Tacco. "But they'll be finished by the time

you are ready to start painting."

"But I'm ready to start right now," wailed Charlie. "David and I were

going to start preparing the walls today."

"Well, can't you prepare the walls in those 2 rooms over there and

come back and prepare the walls in here another day?" asked Tacco.

"I can do that," agreed Charlie. "But I was counting on doing all the

preparation in one day."

Tacco gave him a look that suggested that Charlie was being most

unreasonable.

Charlie and David prepared the walls in the two rooms specified and

returned to the office. It was too late to start another job, so they

sat around playing darts.

A few days later, Charlie received a phone call from Tacco saying that

the renovators had finished all their modifications and so the rest of

the cottage was ready.

"When will you be here?" Tacco demanded.

Charlie was in the middle of some work for another customer. But Tacco

was so insistent and Charlie tried so hard to please his customers

that he agreed to go out that same afternoon.

When Charlie got to the cottage, Tacco's twin-brother Racco was there

obviously very busy performing renovations on the cottage.

"How come you're here?" asked Racco abruptly. "I said I'd ring you

when the renovations were finished."

"B-but Tacco rang me this morning," pleaded Charlie.

"Anyway, it shouldn't bother you if the renovations aren't entirely

finished. There must be other things you can go on with because we

finished 4 other rooms yesterday. When you're ready to start work on

the new rooms, we will have finished them."

"But the cottage we first saw had only 4 rooms altogether. It sounds

like there are now 6 or 7 rooms. This is no longer a cottage, it's a

house."

"Stop complaining," demanded Racco. "The rooms we added on aren't very

big and they are basically exactly the same as the ones we showed

you."

"I'm not supposed to do any more work until all the renovations are

finished," said Charlie.

"Oh well. Since you're here I'll get Jocco to finish these

renovations. It'll only take about 5 minutes. Can you wait?"

Charlie felt trapped. 5 minutes didn't seem like such a long time. It

would be churlish of him to walk out now.

"All right. I'll wait," he replied.

Charlie tried to go on with some other work that needed to be done. He

noticed that some of the walls that he had prepared last time had had

some further renovations carried out on them. I guess I had better

redo the preparations on these walls, he thought.

Just then Racco came back. "Look, is it all right if I just take some

measurements in here?" he asked, and without waiting for a reply began

to measure the walls.

Charlie was non-plussed. Now there was nothing he could go on with. I

guess I'll get myself a coffee, he decided.

As he was coming back with the coffee, he overheard one of Racco's workers

saying, "Those workers of Ozzy's are bludgers. All they ever

do is sit around and drink coffee."

She betrayed no embarrassment at being overheard. Charlie was almost

in tears and turned away so as to hide his distress. He made a

production of drinking his coffee to calm himself.

An hour later, Racco came back and informed Charlie that the

renovations were now complete. He gave Charlie a few scribbled pages.

"These are the room numbers and the labels on the corresponding

keys," he explained to Charlie. "All the rooms are, of course, locked.

Check that each key works in the corresponding lock. Let me know if

there are any problems and I will have our Service Department provide

a new key."

Charlie looked at the list in dismay. There were 136 keys!

He put the list aside and decided he would deal with that another day.

For now, he would try to finish the preparation on 2 of the original

rooms.

After half an hour, he had nearly finished the first room. Charlie

found it most satisfactory to divide a room into sections. Each

section consisted of an area of wall 2 metres wide, from floor to

ceiling. He was just starting the last section when he heard a

crashing noise and found that the plaster sheet had fallen to the

ground and smashed to pieces.

He went to see Racco. Racco was very annoyed. "You must have hit it

with a sledge hammer - our plasterers guarantee that our walls can

withstand normal wear and tear."

They went to inspect the damage; all the while Racco muttered about

incompetent fools. "I'm going to have to get someone to rebuild this

wall," he complained.

"Look how strong these walls are," he announced, banging on another

section with his fist. "They're all built to the same standard," he

continued, banging on another wall. This time he banged a little

harder. "We've been guaranteed that they can withstand even the

heaviest pounding by the strongest fist. Of course, they're not

intended to withstand a sledge hammer."

Proudly, he banged on each panel in turn. Suddenly, there was a loud

crack, and the panel disintegrated. Racco swore loudly.

"Those bloody cretins in Building Maintenance," he yelled, inspecting

the underlying frame. "I told them to check the frames and replace any

rotten ones. It's not our fault. We only attach the plaster. If the

frames are rotten, the nails don't hold and the plaster falls off. It

happens all the time.

"Shouldn't bother you though. You can continue working in one of the

other rooms. They're all the same so it shouldn't matter which room

you work on next."

Charlie tried hard to regroup. Things seemed to have gotten completely

out of hand. When had this exercise first left the rails, he wondered.

Which was the exact moment when things had started to go wrong? It was

an impossible question.

He left the building, thoroughly dejected.

The next day, Charlie and David went back to the house. "I'll start on

some of the new rooms," said David. "You see if you can't finish the 2

original rooms."

Charlie started mixing paints. This is better, he thought. He wasn't

too keen on preparation, although he understood the need for it. He

felt there was little to show for an awful lot of hard work. But

painting was dead easy, and the customers were always cheered when

they saw how nice a room looked once the painting had been finished.

They never seemed to understand the connection between fastidious

preparation and a stunning final result. But Charlie understood.

As he worked, he started to hum and his spirits soared. Then David

came into the room where Charlie was painting. He looked pale.

"What's up?" asked Charlie.

"I've just tried the doors on half-a-dozen rooms," replied David. "None

of the keys fit!"

"What do you mean?" asked Charlie. "You must have to jiggle them a

bit. Keys get stuck sometimes."

"No," cried David. "The keys DON'T FIT - I can't even get them in the

keyhole! They're the wrong sort of key for the lock!"

Charlie went back with David to check. Sadly David was right. They

went to see Racco.

"I'm not responsible for keys - that's Security Services. We have no

control over keys and locks." Racco paused for a moment. "Look, the

renovations are finished. We'll get Security Services to cut new keys.

They shouldn't take long. You'll have the new keys and you can try

them out," he concluded with some satisfaction.

"Gimme a break," cried Charlie. "Are you expecting me to try 136

keys?"

"Well, I'm going to arrange to get them cut!" shouted Racco. "You

don't expect me to test them as well, do you? Look, by the time you

need to start work, all the keys will be done. Just tell me which room

you want to start work on and I'll get that key immediately. I can't

be fairer than that, can I?"

On their return to the office, Charlie complained to Ozzy, who rang

Racco. The response was staggeringly quick: there would be a

Meeting. Not just a meeting or a Meeting, but in fact A MEETING.

There was movement at the station, for the word had passed around...

...So all the cracks had gathered to the fray.

All the tried and noted riders from the stations near and far

Had mustered at the homestead overnight,

For the bushmen love hard riding where the wild bush horses are,

And the stock-horse snuffs the battle with delight.

'The Man from Snowy River', A. B. Paterson

Everyone from Tacco's organisation was at THE MEETING. David and

Juliette were the only two representatives from Ozzy's office.

David tried to explain the situation.

Racco jumped to his feet. "That's bullshit!" he shouted. "It is not

our fault. Those guys from Ozzy's office don't know their job. They do

everything wrong. They couldn't even cut the keys for the locks. We

had to do that!"

Flacco was Tacco's boss. When she heard this outburst from Racco, her

eyebrows shot up. She seemed to be in a conciliatory mood.

"I suggest that Ozzy's people give us an Impact Statement," she

proposed. Turning to Juliette, she continued, "Produce a list

detailing things like the time the plaster fell off the walls, and how

much time was lost. We'll look at it."

Back at Ozzy's office, the staff went into a huddle.

Finally, Borodin spoke.

"We are not going to produce a detailed Impact Statement. Here is the

impact. We were asked to start this job in February. It was understood

that for us to do our painting, the renovations had to be finished. It

is now June. The renovations are still not complete. The impact is the

total time from February to June.

"Further, the impact continues until the renovations are complete. That

means 'completely complete'. Not complete in these 5 rooms and 80% in

the remaining 10. We want to go in on one day, start at one end and

finish the preparation all the way through to the other. Without bits

of plaster hitting the floor. Without discovering that the keys don't

work on Friday. Without discovering that the 7th of July is a Buddhist

holiday.

"We reject entirely the question, 'But isn't there something you can

go on with?' The issue is not whether some part of the building can be

accessed. The only issue is whether the building is completely

accessible. If it isn't, if renovations are still being performed,

people cannot start occupying the building, let alone us painting it."

He folded down a second finger.

"We were asked to give a fixed-price quote on painting a four-room

cottage. We were shown one cottage; we are being asked to paint an

entirely different cottage. And it isn't a cottage any more. It's been

renovated into a 16-room bloody mansion! And the renovations have

still not been completed.

Borodin brought down a third finger.

"Further, there does not appear to have been any design involved in

producing the mansion. The reality is, that we have been the guinea

pigs: when we have pointed out a problem, like the fact that there

were 4 bathrooms upstairs but no toilets, they have gone away,

scratched their heads and come up with a "solution". Which is why

there are so many extra rooms now. The building has grown, but it was

never planned.

"So we have no guarantee that it will ever be finished. Because I

don't think anyone knows what the building is meant to do. Since there

appears to be no definition of "finished", how will anyone recognise the

endpoint if it should ever miraculously appear?"

Borodin reached for his fourth finger.

"There is a serious problem to do with size. Tacco's crew are hoping

to accomodate 120 people in that mansion. But we have checked with

council. They will not permit more than 46 people on that block. We

have pointed that out to Macco. He says he knew there was a limit. In

fact, his understanding was that there was a by-law limiting

occupancy to 32 people.

"What is Tacco's response? Is there any point in us painting enough

rooms for 120 people when only 46 can be accommodated? We could argue

that that is Tacco's problem. But, ..." Borodin left the thought

hanging.

He opened his hand and took hold of his thumb.

"We have told Tacco that we expect to be able to finish the painting

in eight days. Originally, we quoted 39 days. Tacco might say to us,

'How can you claim an impact of the entire time when you originally

quoted 39 days and now you claim you are only 8 days from finishing?'

"The answer is simple. We are claiming an impact of the entire time

because we cannot claim an impact of more than the entire time. If we

could, we would. The real impact is in fact 90 days (or thereabouts):

from February to June. That is the time we have wasted due to Tacco's

incompetence. It may show that we were remiss in our estimation: we

allowed 31 days for Tacco's delays; there were actually 3 times as

many.

"We have actually worked (or tried to) during that time. We have

expended more hours than we budgeted. We have been prevented from

painting other houses. It has cost us even more than it appears on

paper. It is one thing to wear travel time of one hour if one actually

performs 6 hours of useful work at the site. How does one account for

the travel time if one goes out, discovers that one can't work

usefully and comes back an hour later? What about the case where one

goes out, performs 6 hours of hard work doing preparation on a wall,

only to discover the next time we arrive at the site that the wall has

collapsed in the meantime, has been rebuilt, and has to be prepared

again?"

He paused and looked around the room.

"None of what I have said up till now helps to fill the biscuit tin.

"Today is the first day of the rest of the project. We are basically at

the start of the project. There is a difference: usually, at the start

of a project, we don't know how well we can work with the customer. We

tend to assume that the customer will be moderately co-operative and

moderately competent.

"We have now had 3 months' experience with the customer. We know him to

be below par in competence and co-operation. We know that he

attributes absolutely no firmness to deadlines. Worse, he announces

deadlines and, in the same breath, assures us that these will NOT be

met.

"Whatever estimates we made 3 months ago, we now know them to be

naively optimistic. Back then, we expected to be finished in 39 mad-,

er, I mean, man-days. Consequently, we can expect this job to take

much longer than another 39 man-days.

"My guess is that it would be better value for us to cut our losses.

We were barely making money when we quoted 39 man-days. We sure in

hell are gonna lose money if the job takes another 39 plus, say 30%,

... what's that, um, ... 52 man-days.

"Can we expect the job to go better from now on? Let me tell you,

guys: I can't see it; I just don't think that it's likely.

"However, I think there is one slim possibility (even though I can't

see it happening). If, somehow, it can be arranged that someone

important from Tacco's place is in a position where his rooster is on

the solid piece of wood, there might be a chance.

"Then, there might be some resources allocated to this project. So

that, when we come into a room and discover that a wall has fallen

down, we can find someone to tell; and that person has been given the

job of keeping us going as his highest priority; and he has the

ability to either fix the wall himself or he has the resources at his

disposal to get the wall fixed.

"So that, when we get a little lost because there are so many rooms,

we can ask someone to help us navigate around the castle.

"So that there is a full-time person whose responsibility is to learn

how we prepare and paint rooms and who can help us to prepare and

paint rooms. Not because we can't do the job without him - on the

contrary, we can do the job much more quickly without him! But, the

existence of such a person serves a number of goals. First it shows a

commitment on Tacco's part. Secondly, it causes Tacco to feel some of

our pain when things get bogged down (because his person is also out

of action until the project is finished). Finally, one of Tacco's

requirements was that he wanted his staff to be able to perform

touch-up painting after we had finished.

"At the moment, we are the only one's whose roosters are vulnerable."

He stopped. Was there anything else?

"It's a huge shame, really. We started this project with a number of

technical concerns (could our painting machine handle some of the

tricky shapes in Tacco's cottage?). We passed all those tests with

flying colours. We adapted our painting machine and it is now bigger

and better than it used to be. It turns out that we haven't been able

to paint the cottage-house-mansion-castle because it isn't finished.

Technical problems were overcome with breathtaking alacrity.

"So, the last issue is: how will we look if we walk away from this

job? I can't answer categorically. I do know that we have walked away

from other customers who were unreasonable and it doesn't appear to

have hurt us. Generally, it has had a therapeutic effect on our

sanity! Because customers like that mess with your mind. They create

the suspicion that we have not given our all. They attempt to drag us

into the same pit of mediocrity and incompetence in which they lurk.

"My vote would be to call it quits.

"Can we take anything away from this debacle? I think so. I think

there is a lesson here for us for next time.

"Moral: Everyone must stand to lose a rooster."

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/grebler.jpg)

Henry has spent his days working with computers, mostly for computer

manufacturers or software developers. His early computer experience

includes relics such as punch cards, paper tape and mag tape. It is

his darkest secret that he has been paid to do the sorts of things he

would have paid money to be allowed to do. Just don't tell any of his

employers.

He has used Linux as his personal home desktop since the family got its

first PC in 1996. Back then, when the family shared the one PC, it was a

dual-boot Windows/Slackware setup. Now that each member has his/her own

computer, Henry somehow survives in a purely Linux world.

He lives in a suburb of Melbourne, Australia.

Copyright © 2010, Henry Grebler. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 176 of Linux Gazette, July 2010

Knoppix Boot From PXE Server - a Simplified Version for Broadcom based NICs

By Krishnaprasad K., Shivaprasad Katta, and Sumitha Bennet

Why this article?

The intent of this article is to exhaustively capture every step of

booting Knoppix Live CD from a PXE Server. Booting Knoppix from PXE is a

straightforward task. But here we would like to pinpoint some areas where

we faced difficulties in booting Knoppix from a PXE server so that it may be

useful for those who might be facing the same issues. We were attempting to

automate system maintenance tasks such as performing system BIOS updates,

updating firmware of system peripherals such as storage controllers, NICs,

etc. We planned to boot Knoppix from PXE and then customize it to execute

the .BIN upgrade packages (For upgrading system component firmware). We

came across the Knoppix Live CD and its' Howtos but we still hit

roadblocks which had to be sorted out before we could boot Knoppix