...making Linux just a little more fun!

February 2010 (#171):

- Mailbag

- Talkback

- 2-Cent Tips

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- Taming Technology: The Case of the Vanishing Problem, by Henry Grebler

- Random signatures with Mutt, by Kumar Appaiah

- The Next Generation of Linux Games - Word War VI, by Dafydd Crosby

- The Gentle Art of Firefox Tuning (and Taming), by Rick Moen

- Words, Words, Words, by Rick Moen

- Bidirectionally Testing Network Connections, by René Pfeiffer

- Sharing a keyboard and mouse with Synergy (Second Edition), by Anderson Silva and Steve 'Ashcrow' Milner

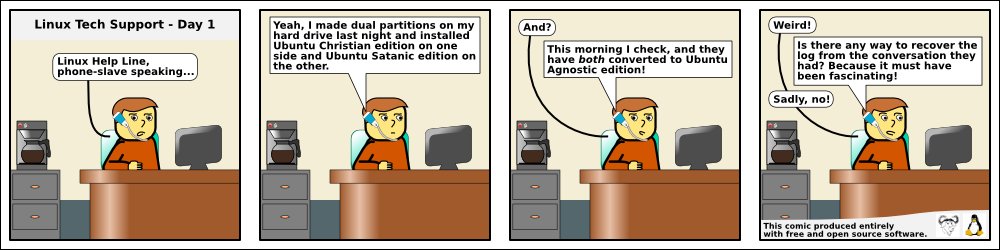

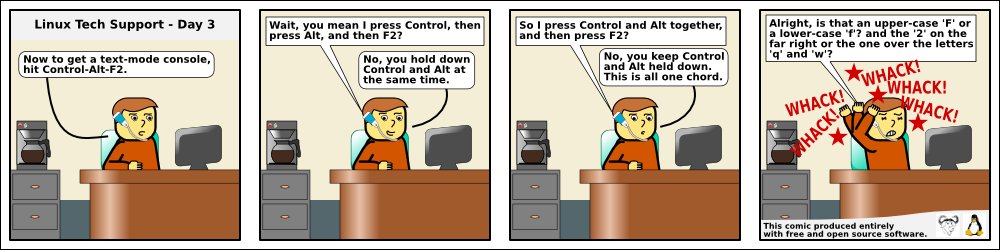

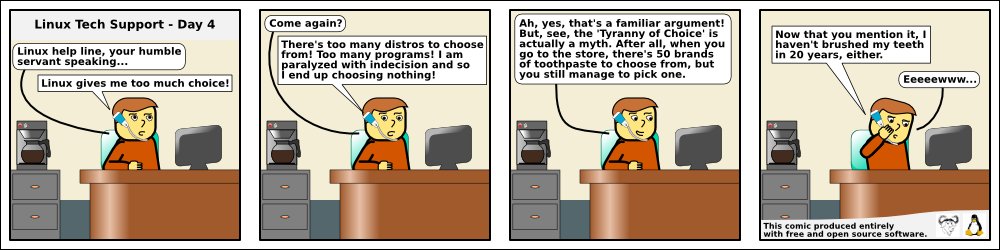

- HelpDex, by Shane Collinge

- XKCD, by Randall Munroe

- Doomed to Obscurity, by Pete Trbovich

- Reader Feedback, by Kat Tanaka Okopnik and Ben Okopnik

Mailbag

This month's answers created by:

[ Amit Kumar Saha, Ben Okopnik, Neil Youngman, Suramya Tomar, Thomas Adam ]

...and you, our readers!

Our Mailbag

apertium-en-ca 0.8.99

Jimmy O'Regan [joregan at gmail.com]

Fri, 15 Jan 2010 19:57:01 +0000

This is a pre-release of apertium-en-ca (because there are some bugs

you can't find until after you've released...)

New things:

Vocabulary sync with apertium-en-es 0.7.0

English dialect support (British and American)

Initial support for Valentian forms

Start of Termcat import - Thanks to Termcat for allowing this, and to

Prompsit Language Engineering for facilitating it.

At the moment, only a few items have been important. They have mostly

been limited to terms which include ambiguous nouns (temps, estació,

etc.)

Caveats:

The vocabulary sync is not complete: specifically, I skipped some

proverbs, and had to omit the verb 'blog'.

The Valentian support is not as complete as apertium-es-ca (on the

other hand, the English dialect support is better than en-es)

This release also includes several multiwords harvested from Francis

Tyers' initial investigations into lexical selection (and others

suggested by it).

--

<Leftmost> jimregan, that's because deep inside you, you are evil.

<Leftmost> Also not-so-deep inside you.

apertium-ht-en 0.1.0

Jimmy O'Regan [joregan at gmail.com]

Sun, 24 Jan 2010 23:15:58 +0000

Announcing apertium-ht-en 0.1.0

In response to a request from volunteers at Crisis Commons

(http://crisiscommons.org), we have put some effort into creating

a Haitian Creole to English module for Apertium. We do not usually

make releases at such an early stage of development, but because of

the circumstances, we think it's better to make available something

that at least partly works, despite its numerous flaws, than to

hold off until we can meet our usual standards.

The present release is for Haitian Creole to English only; much of

the vocabulary was derived from a spell checking dictionary using

cognate induction methods, and is most likely incorrect -- CMU

have graciously provided Open Source bilingual data, but have

deliberately used a GPL-incompatible licence, which we cannot use.

We hope that they will see fit to change this.

Many thanks to Kevin Scannell for providing us with spell checking

data; to Francis Tyers and Kevin Unhammer for their contributions;

and to the Apertium community for their support and indirect help.

I would like to dedicate this releas to the memory of my grandmother,

Anne O'Regan, who passed away on Monday the 18th, just after I began

working on this.

--

<Leftmost> jimregan, that's because deep inside you, you are evil.

<Leftmost> Also not-so-deep inside you.

[ Thread continues here (3 messages/4.68kB) ]

Creating appealing schemas and charts

Peter Hűwe [PeterHuewe at gmx.de]

Thu, 14 Jan 2010 15:35:55 +0100

Dear TAG,

unfortunately I have to create a whole bunch of schematas, charts, diagrams,

flow charts, state machines (fsm) and so on for my diploma thesis - and I was

wondering if you happen to know some good tools to create such graphics.

Of course I know about dia, xfig, (open office draw), gimp, inkscape - but

either the diagrams look really really old-fashioned (dia, xfig) or are rather

cumbersome to create (gimp, inkscape, draw) - especially if you have to modify

them afterwards.

So I'm looking for a handful of neat tools to create some appealing schematas.

Unfortunately I can't provide a good example - but thinks, like colors,

borders,round edges are definitely a good start

I have to admit that it is arguable whether some eye-candy is really necessary

in a scientific paper - however adding a bit of it, really helps the reader to

get the gist of the topic faster.

Thanks,

Peter

[ Thread continues here (5 messages/6.36kB) ]

Find what is created a given directory?

Suramya Tomar [security at suramya.com]

Thu, 28 Jan 2010 15:34:19 +0530

Hey,

I know it sounds kind of weird but I want to know if it is possible to

identify what process/program is creating this particular directory on

my system.

Basically, in my home folder a directory called "Downloads" keeps

getting created at random times. The directory doesn't have any content

inside it and is just an empty folder.

I thought that it was probably being created by one of the applications

I run at the time but when I tried to narrow down the application by

using each one separately and waiting for the directory to be created I

wasn't able to replicate the issue.

I also tried searching on Google for this but seems like no one else is

having this issue or maybe my searches are too generic.

I am running Debian Testing (Squeeze) and the applications I normally

have running are:

* Firefox (3.6)

* Thunderbird (3.0.1)

* Dolphin (Default KDE 4.3.4 version)

* Konsole (3-4 instances)

* EditPlus using wine

* Amarok (1.4.10)

* ksensors

* Tomboy Notes

* xchat

* gnome-system-monitor

BTW, I noticed the same behavior when I was using Ubuntu last year (9.10).

Any idea's on how to figure this out? Have any of you noticed something

similar on your system?

- Suramya

--

-------------------------------------------------

Name : Suramya Tomar

Homepage URL: http://www.suramya.com

-------------------------------------------------

************************************************************

[ Thread continues here (7 messages/8.29kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 171 of Linux Gazette, February 2010

Talkback

Talkback:170/launderette.html

[ In reference to "/launderette.html" in LG#170 ]

Jimmy O'Regan [joregan at gmail.com]

Tue, 29 Dec 2009 03:29:36 +0000

2009/12/26 Ben Okopnik <[email protected]>:

> On Sat, Dec 26, 2009 at 12:08:04PM +0000, Jimmy O'Regan wrote:

>> http://www.bobhobbs.com/files/kr_lovecraft.html

>>

>> "Recursion may provide no salvation of storage, nor of human souls;

>> somewhere, a stack of the values being processed must be maintained.

>> But recursive code is more compact, perhaps more easily understood–

>> and more evil and hideous than the darkest nightmares the human brain

>> can endure. "

>

> [blink] Are you saying there's anything something wrong with ':(){ :|:&};:'?

> Or, for that matter, with 'a=a;while :;do a=$a$a;done'? Why, I use them all

> the time!

>

> ...Then, after rebooting the poor hosed machine, I talk about

> implementing user quotas and so on. Good illustrative tools for my

> security class, those.

Just started reading the new pTerry, and this mail and the following

quote somehow resonated for me...

'I thought I wasn't paid to think, master.'

'Don't you try to be smart.'

'Can I try to be smart enough to get you down safely, master?'

But then, every Discworld book is full of such nuggets  ...

...

More important right now was what kind of truth he was going to have to

impart to his colleagues, and he decided not on the whole truth, but instead

on nothing but the truth, which dispensed with the need for honesty.

''ow do I know I can trust you?' said the urchin.

'I don't know,' said Ridcully. 'The subtle workings of the brain are a

mystery to me, too. But I'm glad that is your belief.'

--

<Leftmost> jimregan, that's because deep inside you, you are evil.

<Leftmost> Also not-so-deep inside you.

Talkback:170/lan.html

[ In reference to "The Village of Lan: A Networking Fairy Tale" in LG#170 ]

Rick Moen [rick at linuxmafia.com]

Mon, 4 Jan 2010 18:01:08 -0800

Forwarding with Peter's permission.

----- Forwarded message from Peter Hüwe <[email protected]> -----

From: Peter Hüwe <[email protected]>

To: [email protected]

Date: Tue, 5 Jan 2010 02:09:58 +0100

Subject: Thanks for your DNS articles in LG

Hi Rick,

I just stumbled upon your DNS articles in Linux Gazette and although I first

though this topic is not that interesting for me it turned out to be really

really interesting!

I found out that the dns I used to use (dns from my university) was horribly

slow and seemed not to use any caching mechanism - first request 200-300ms,

second request 200-300ms :/

Then I checked out other dns servers like googles and opendns which were quite

fast (1st ->130 2nd ->30ms).

But as I don't like using google for everything (no googlemail account here  and I really dislike the "wrong" answer of opendns for nonexistent domains

(as it breaks google search by typing into firefox url bar)

and I really dislike the "wrong" answer of opendns for nonexistent domains

(as it breaks google search by typing into firefox url bar)

-> I eventually installed Unbound and I'm really really happy with it.

Installation was really easy and the results are tremendous (especially given

the fact that I surf most of the time on only a handful pages) - so speedup

from 300ms to 0(for the second hit) - that's really nice.

The only minor drawback I see is that (as I have to run it locally on my box -

yes I'm one of your oddballs  it looses its cache after reboot. - do you

happen to know if there is something I could do against that?

it looses its cache after reboot. - do you

happen to know if there is something I could do against that?

Anyways - thank you for your great articles and improving my internet

experience even further.

Kind regards,

Peter

----- End forwarded message -----

[ Thread continues here (3 messages/7.07kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 171 of Linux Gazette, February 2010

2-Cent Tips

Two-cent Tip: efficient use of "-exec" in find

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Sun, 31 Jan 2010 16:39:55 +0700

Most CLI aficionados use this kind of construct when executing a

command over "find" results:

$ find ~ -type f -exec du -k {} \;

Nothing is wrong with that, except that "du" is repeatedly called with

single argument (that is the absolute path and the file name itself).

Fortunately, there is a way to cut down the number of execution:

$ find ~ -type f -exec du -k {} +

Replacing ";" with "+" would make "find" to work like xargs does. Thus

du -k will be examining the size of several files at once per

iteration.

PS: Thanks to tuxradar.com for the knowledge!

--

regards,

Mulyadi Santosa

Freelance Linux trainer and consultant

blog: the-hydra.blogspot.com

training: mulyaditraining.blogspot.com

[ Thread continues here (2 messages/1.80kB) ]

Two-cent Tip: creating scaled up/down image with ImageMagick

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Wed, 30 Dec 2009 11:42:36 +0700

Wants a quick way to scale up/down your image file? Suppose you want

to create proportionally scaled down version of abc.png with width 50

pixel. Execute:

$ convert -resize 50x abc.png abc-scaled.png

--

regards,

Mulyadi Santosa

Freelance Linux trainer and consultant

blog: the-hydra.blogspot.com

training: mulyaditraining.blogspot.com

Talkback: Discuss this article with The Answer Gang

Published in Issue 171 of Linux Gazette, February 2010

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to [email protected]. Deividson can also be reached via twitter.

News in General

Linux.com Launches New Jobs Board

Linux.com Launches New Jobs Board

The Linux Foundation has created a new Linux Jobs Board at Linux.com:

http://jobs.linux.com/. The new Linux.com Jobs Board can provide

employers and job seekers with an online forum to find the best and

brightest Linux talent or the ideal job opportunity.

The JobThread Network, an online recruitment platform, reports that

the demand for Linux-related jobs has grown 80 percent since 2005,

showing that Linux represents the fastest growing job category in the

IT industry.

"Linux's increasing use across industries is building high demand for

Linux jobs despite national unemployment stats," said Jim Zemlin,

executive director at the Linux Foundation. "Linux.com reaches

millions of Linux professionals from all over the world. By providing

a Jobs Board feature on the popular community site, we can bring

together employers, recruiters, and job seekers to lay the intellectual

foundation for tomorrow's IT industry."

Employers interested in posting a job opening have two options; they

can post their openings on Linux.com and reach millions of

Linux-focused professionals, or they can use the JobThread Network on

Linux.com to reach an extended audience that includes 50 niche

publishing sites with a combined 9.8 million visitors every month. For

more information and to submit a job posting, please visit:

http://jobs.linux.com/post/.

Job seekers can include their LinkedIn details on their Linux.com

profiles, including their resumes. They can also subscribe to the

Linux.com Jobs Board RSS feed, receive alerts by e-mail, and follow

opportunities about Linux-related jobs on Twitter at

www.twitter.com/linuxdotcom.

Linux.com hosts a collaboration forum for Linux users and developers

to connect with each other. Information on how Linux is being used,

such as in embedded systems, can also be found on the site. To join

the Linux.com community, which currently has more than 11,000

registered members, go to: http://www.linux.com/community/register/.

Google moves to ext4

Google moves to ext4

Search and software giant Google is now in the process of upgrading

its storage infrastructure from the ext2 filesystem to ext4.

In a recent e-mail post, Google engineer Michael Rubin explained that

"...the metadata arrangement on a stale file system was leading to

what we call 'read inflation'. This is where we end up doing many

seeks to read one block of data."

Rubin also noted that "...For our workloads, we saw ext4 and xfs as

'close enough' in performance in the areas we cared about. The fact

that we had a much smoother

upgrade path with ext4 clinched the deal. The only upgrade option we

have is online. ext4 is already moving the bottleneck away from the

storage stack...."

In a related move, Ted Ts'o has joined Google, after concluding his

term as CTO of the Linux Foundation. Ts'o participated in creating the

ext4 filesystem.

Firefox Grows 40% in 2009, 3.5 now most Popular

Firefox Grows 40% in 2009, 3.5 now most Popular

Usage data gathered by Statcounter.com at the end of December showed

Mozilla's Firefox 3.5 finally surpassed both IE 7 and IE 8 to become

the world's most popular Web-browsing software. Holiday sales brought

IE 8 within a hair of overtaking Firefox, but the latter still was ahead in

mid-January. See the graph for all browsers, here:

http://gs.statcounter.com/#browser_version-ww-weekly-200827-201002/.

However, combined usage of both IE 7 and IE 8 was almost 42% vs. 30.5%

for both the 3.0 and 3.5 versions of Firefox.

According to the Metrics group at Mozilla, the worldwide growth rate

for Firefox adoption was 40% for 2009. The number of active daily

users was up an additional 22.8 million for 2009, higher than the 16.4

million users added in 2008.

This increase occurred despite delaying the release of Firefox 3.6

until late January. This next version of the open source browser from

the Mozilla Foundation will feature improvements in stability,

security, and customization, and will also offer features for

location-aware browsing.

A release candidate for 3.6 was available in mid-January. The next

milestone release, version 4.0, has been pushed back until the end of

2010. It will feature separate processes for each tab, like Google

Chrome, and a major UI update.

According to StatCounter, this was Firefox's market share as of Dec '09:

29% Africa

26% Asia

41% Europe

31% North America

32% South America

http://blog.mozilla.com/metrics/2010/01/08/40-firefox-growth-in-2009/.

European Commission Approves Oracle's Acquisition of Sun

European Commission Approves Oracle's Acquisition of Sun

On January 21, Oracle Corporation received regulatory approval from

the European Commission for its acquisition of Sun Microsystems.

Oracle expects approval from China and Russia to follow, and expects

its Sun transaction to close quickly.

The European Commission had been under pressure from some of the

founders of MySQL to separate it from the Sun acquisition on

competitive grounds. However, Marten Mickos, the former CEO of MySQL, and

others had argued that the famed open source database was already on a

dual commercial and open source development model, and that the large

community around it could resist any efforts by Oracle to stunt its

development.

The Commission found that concerns about MySQL were not as serious as

was first voiced. "The investigation showed that although MySQL and

Oracle compete in certain parts of the database market, they are not

close competitors in others, such as the high-end segment", the

Commission said in its announcement. It also concluded that PostgreSQL

could become an open-source alternative to MySQL, if that should prove

necessary, and was already competing with Oracle's database. The

Commission also said that it was now "...satisfied that competition

and innovation will be preserved on all the markets concerned."

Oracle hosted a live event for customers, partners, press, and analysts

on January 27, 2010, at its headquarters in Redwood Shores,

California.

Oracle CEO Larry Ellison, along with executives from Oracle and Sun,

outlined the strategy for the combined companies, product roadmaps,

and the benefits from having all components - hardware, operating

system, database, middleware, and applications - engineered to work

together. The event was broadcast globally, and an archive should be

available at http://www.oracle.com/sun/.

Conferences and Events

- MacWorld 2010

-

February 9 - 13, San Francisco, CA - Priority Code - MW4743

http://www.macworldexpo.com/.

- Open Source Cloud Computing Forum

-

February 10, hosted online by Red Hat

http://www.redhat.com/cloudcomputingforum/.

- Southern California Linux Expo (ScaLE 8x)

-

February 19 - 21, LAX Airport Westin, Los Angeles, CA

The Southern California

Linux Expo (SCALE) returns for its third year to the Westin LAX Hotel.

Celebrating its eighth year, SCALE's success owes, in part, to its unique

format of a main weekend conference with Friday specialty tracks.

This year's main weekend conference consists of five tracks: beginner,

developer, and three general interest tracks. Topics span all interest levels,

with topics such as a review of desktop operating systems, in-depth programming

and scripting with open source languages, and a discussion of the current state

of open source. In addition, there will be over 80 booths hosted by commercial

and non-commercial open source enthusiasts.

The Friday specialty tracks consist of Women In Open Source ("WIOS"), and Open

Source Software In Education ("OSSIE").

http://socallinuxexpo.org/.

- USENIX Conference on File and Storage Technologies (FAST '10)

-

February 23-26, San Jose, CA

Join us in San Jose, CA, February 23-26, 2010, for USENIX FAST '10.

At FAST '10, explore new directions in the design, implementation,

evaluation, and deployment of storage systems. Learn from leaders in

the storage industry, beginning Tuesday, February 23, with ground-

breaking file and storage tutorials by industry leaders such as Brent

Welch, Marc Unangst, Michael Condict, and more. This year's innovative

3-day technical program includes 21 technical papers, as well as two

keynote addresses, Work-in-Progress Reports (WiPs), and a Poster

Session. Don't miss this unique opportunity to meet with premier

storage system researchers and industry practitioners from around the

globe.

Register by February 8 and save! Additional discounts are available!

http://www.usenix.org/fast10/lg

http://www.usenix.org/fast10/lg

- ESDC 2010 - Enterprise Software Development Conference

-

March 1 - 3, San Mateo Marriott, San Mateo, CA

http://www.go-esdc.com/.

- RSA 2010 Security Conference

-

March 1 - 5, San Francisco, CA

http://www.rsaconference.com/events/.

- BIZcon Europe 2010

-

March 4-5, The Westin Dragonara Resort, St'Julians, Malta, Europe

Are you sold on using Open Source but find management holds you back

because they don’t understand it? We come up against this all the time so

we’ve organized a conference for senior execs to help explain the benefits

of Open Source, to be held in the 1st week of March.

We have speakers from vendors and SIs as well as the community to help

get the message across. If nothing else it could be a welcome escape from

the cold, we’re hosting it in sunny Malta :)

Go to http://www.ricston.com/bizcon-europe-2010-harnessing-open-source/

for more info and help us show management how Open Source can be a great

asset to them as well as us. And thanks for helping us out!

http://www.ricston.com/bizcon-europe-2010-harnessing-open-source/

- Enterprise Data World Conference

-

March 14 - 18, Hilton Hotel, San Francisco, CA

http://edw2010.wilshireconferences.com/index.cfm.

- Cloud Connect 2010

-

March 15 - 18, Santa Clara, CA

http://www.cloudconnectevent.com/.

- Texas Linux Fest

-

April 10, Austin, TX

http://www.texaslinuxfest.org/.

- MySQL Conference & Expo 2010

-

April 12 - 15, Convention Center, Santa Clara, CA

http://en.oreilly.com/mysql2010/.

- 4th Annual Linux Foundation Collaboration Summit

-

co-located with the Linux Forum Embedded Linux Conference

April 14 - 16, Hotel Kabuki, San Francisco, CA (by invitation).

- eComm - The Emerging Communications Conference

-

April 19 - 21, Airport Marriott, San Francisco, CA

http://america.ecomm.ec/2010/.

- STAREAST 2010 - Software Testing Analysis & Review

-

April 25 - 30, 2010, Orlando, FL

http://www.sqe.com/STAREAST/.

- Usenix LEET '10, IPTPS '10

-

April 27 - 28, San Jose, CA

http://usenix.com/events/.

- USENIX Symposium on Networked Systems Design & Implementation (USENIX NSDI '10)

-

April 28-30, San Jose, CA

Join us in San Jose, CA, April 28-30, 2010, for USENIX NSDI '10.

At NSDI '10, meet with leading researchers to explore the design

principles of large-scale networked and distributed systems. This

year's 3-day technical program includes 29 technical papers with

topics including cloud services, Web browsers and servers, datacenter

and wireless networks, malware, and more. NSDI '10 will also feature a

poster session showcasing early research in progress. Don't miss this

unique opportunity to meet with premier researchers in the computer

networking, distributed systems, and operating systems communities.

Register by April 5 and save! Additional discounts are available!

http://www.usenix.org/nsdi10/lg

http://www.usenix.org/nsdi10/lg

- Citrix Synergy-SF

-

May 12 - 14, San Francisco, CA

http://www.citrix.com/lang/English/events.asp.

- Semantic Technology Conference

-

June 21 - 25, Hilton Union Square, San Francisco, CA

http://www.semantic-conference.com/.

- O'Reilly Velocity Conference

-

June 22 - 24, Santa Clara, CA

http://en.oreilly.com/velocity/.

- LinuxCon 2010

-

August 10 - 12, Renaissance Waterfront, Boston, MA

http://events.linuxfoundation.org/events/linuxcon/.

- USENIX Security '10

-

August 11 - 13, Washington, DC

http://usenix.com/events/sec10/.

- 2nd Japan Linux Symposium

-

September 27 - 29, Roppongi Academy Hills, Tokyo, Japan

http://events.linuxfoundation.org/events/japan-linux-symposium/.

- Linux Kernel Summit

-

November 1 - 2, Hyatt Regency Cambridge, Cambridge, MA

http://events.linuxfoundation.org/events/linux-kernel-summit/.

Distro News

SimplyMEPIS 8.0.15 Update and 8.5 beta4 Released

SimplyMEPIS 8.0.15 Update and 8.5 beta4 Released

MEPIS LLC has released SimplyMEPIS 8.0.15, an update to the community

edition of MEPIS 8.0. The ISO files for 32- and 64-bit processors are

SimplyMEPIS-CD_8.0.15-rel_32.iso and SimplyMEPIS-CD_8.0.15-rel_64.iso.

SimplyMEPIS 8.0 uses a Debian Lenny Stable foundation, enhanced

with a long-term support kernel, key package updates, and the MEPIS

Assistant applications to create an up-to-date, ready to use system

providing a KDE 3.5 desktop.

This release includes recent Debian Lenny security updates as well as

MEPIS updates that are compatible between MEPIS versions 8.0 and 8.5.

The MEPIS updates include kernel 2.6.27.43, openoffice.org 3.1.1-12,

firefox 3.5.6-1, flashplugin-nonfree 2.8, and bind9 9.6.1P2-1.

Warren Woodford announced that SimplyMEPIS 8.4.96, the beta4 of MEPIS

8.5, is available from MEPIS and public mirrors. The Mepis 8.5 beta

includes a Debian 2.6.32 kernel.

Warren said, "Even though the past two weeks were a holiday time, we

have been busy. In this beta, I did a lot work on the look and feel of

the KDE4 desktop."

The ISO files for 32- and 64-bit processors are

SimplyMEPIS-CD_8.4.96-b4_32.iso and SimplyMEPIS-CD_8.4.96-b4_64.iso

respectively. Deltas, requested by the community, are also available.

ISO images of MEPIS community releases are published to the 'released'

sub-directory at the MEPIS Subscriber's Site, and at MEPIS public

mirrors. Find downloads at http://www.mepis.org/.

Software and Product News

Sun Microsystems Unveils Open Source Cloud Security Tools

Sun Microsystems Unveils Open Source Cloud Security Tools

As part of its strategy to help customers build public and private

clouds that are open and interoperable, Sun Microsystems, Inc. is

offering open source cloud security capabilities and support for the

latest Security Guidance from the Cloud Security Alliance. For more

information on Sun's Cloud Security initiatives, visit

http://www.sun.com/cloud/security/.

Leveraging the security capabilities of Sun's Solaris Operating

Systems, including Solaris ZFS and Solaris Containers, the security

tools help to secure data in transit, at rest, in use in the cloud,

and work with cloud offerings from leading vendors including Amazon

and Eucalyptus.

Along with introducing new security tools in December, Sun also

announced support for the Cloud Security Alliance's "Guidance for

Critical Areas of Focus in Cloud Computing - Version 2.1." Sun privacy

and security experts have been instrumental in the industry-wide

effort to develop the security guidance, and have been active

participants in the Cloud Security Alliance since its inception. The

new framework provides more concise and actionable guidance for secure

adoption of cloud computing, and encompasses knowledge gained from

real world deployments. For more information, visit

http://www.cloudsecurityalliance.org/guidance/.

Sun also published a new white paper, "Building Customer Trust in

Cloud Computing with Transparent Security," that provides an overview

of the ways in which intelligent disclosure of security design,

practices, and procedures can help improve customer confidence while

protecting critical security features and data, improving overall

governance. To download the paper, visit

http://www.sun.com/offers/details/sun_transparency.xml.

Sun announced availability for several open source Cloud Security

tools including:

-

OpenSolaris VPC Gateway: The OpenSolaris VPC Gateway software

enables customers to quickly create a redundant, secure communications

channel to an Amazon Virtual Private Cloud without the need for

proprietary networking equipment. To download the OpenSolaris VPC

Gateway tool, visit http://kenai.com/projects/osolvpc/pages/Home/;

-

Immutable Service Containers (ISC): Incorporating many of the

security features of the OpenSolaris operating system, including

Solaris ZFS, Solaris Containers, and Solaris IP Filter and Auditing,

the ISC architecture leverages service compartmentalization and

improved integration techniques to create virtual machines with

significantly improved security protection and monitoring

capabilities. To download the ISC software or pre-built images, visit

http://kenai.com/projects/isc/pages/OpenSolaris/;

-

Security Enhanced Virtual Machine Images (VMIs): Using many of the

techniques developed for the Immutable Service Container project, Sun

created several security-enhanced VMIs for the Amazon Elastic Compute

Cloud (EC2). These virtual machines leverage industry accepted

recommended practices including non-executable stacks, encrypted swap

and auditing enabled by default. Beyond simple OpenSolaris images, Sun

has also published integrated software stacks such as Solaris AMP and

Drupal built on these security-enhanced images. To download the VMIs,

visit http://www.sun.com/cloud/security/;

-

Cloud Safety Box: Simplifies managing encrypted content in the

Cloud. Using a simple Amazon S3-like interface, the Cloud Safety Box

automates the compression, encryption, and splitting of content being

stored in the cloud on any supported operating system, including

Solaris, OpenSolaris, Linux, and Mac OS X.

To download the Cloud Safety Box, visit

http://kenai.com/projects/s3-crypto/pages/Home/.

For more information on Sun's cloud technologies and Sun Open Cloud

APIs, visit http://www.sun.com/cloud/.

Canonical Announces Bazaar Commercial Services

Canonical Announces Bazaar Commercial Services

Companies and open source projects interested in using Canonical's

popular version control system now have access to a suite of

commercial services - Consultancy & Conversion, Training, and Support -

allowing them to migrate, deploy, and manage Bazaar.

Used by Ubuntu and other open source and commercial projects, Bazaar

(bzr) is a version control system (VCS) that supports distributed

development environments. Many existing version control systems use a

centralised model, a particular challenge to globally distributed

'chase the sun' development teams. Although bzr itself is open source,

there is no requirement for commercial projects to make their code

publicly available.

Sun Microsystems migrated the code for its MySQL database software a

year ago, using bzr conversion and support services. "We wanted to use

a tool which suited our distributed contributor model for MySQL, but

we needed the reassurance of access to the Bazaar development team

through our transition and use of the VCS," said Jeffrey Pugh, vice

president of MySQL Engineering, Sun Microsystems. "We have found bzr

works really well for our model, and that the bzr support team and

developers have been responsive to our requirements."

"The increasingly distributed nature of software development has

allowed Bazaar to grow in popularity," said Martin Pool, project

manager at Canonical. "Our Consultancy & Conversion, Training, and

Support services give new and existing customers piece of mind when

setting up such a vital piece of infrastructure."

Canonical is now offering comprehensive training in Bazaar to get

staff up-to-speed quickly and transfer best practice knowledge. Both

the content and delivery are tailored to meet the needs of individual

teams and development environments, so developers can get the most out

of the training sessions.

Visit the project site here: http://bazaar.canonical.com/en/, or Canonical's Bazaar page here: http://www.canonical.com/projects/bazaar/.

Canonical offers support program for Lotus Symphony

Canonical offers support program for Lotus Symphony

At IBM Lotusphere in January, Canonical announced a dedicated support

program for Lotus Symphony, the no-charge office productivity

component of the IBM Client for Smart Work (ICSW) on Ubuntu. This

support is made available to customers by Canonical through the IBM

and Canonical partner network.

The IBM Client for Smart Work helps organisations save up to 50

percent per seat on software costs versus a Microsoft-based desktop,

in addition to avoiding requisite hardware upgrades. The package

allows companies to use their existing PCs, lower-cost netbooks, and

thin clients.

The open standards-based core solution comprises of Ubuntu 8.04 LTS

Desktop Edition and Lotus Symphony, which includes word processing,

spreadsheets, and presentations, fully supported by Canonical at US $5.50

per user, per month based on 1000 seat deployment.

Optional solution components include desktop virtualisation and

recovery options using VERDE from Virtual Bridges, and a variety of

Lotus collaboration capabilities with choice of delivery model: on

site, on the cloud, or using an appliance.

"The economic case for Ubuntu and the IBM Client for Smart Work is

unarguable," says Shiv Kumar, EVP of Sales at ZSL, one of the first

IBM and Canonical partners to make this solution available. "The

addition of support from Canonical at super-competitive pricing means

companies have the reassurance of world class support through the

entire stack."

IBM Client for Smart Work for Ubuntu core solution is available

(unsupported) at no charge from http://www.ubuntu.com/partners/icsw.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Taming Technology: The Case of the Vanishing Problem

By Henry Grebler

Author's Note

I am trying to compile a body of work on an overall theme that I call

Taming Technology. (I have wrestled with several different names, but

that is my title du jour.) The theme deals with troubleshooting,

problem solving, problem avoidance, and analysis of technology failures.

Case studies are an important part of this ambitious project.

"Installing Fedora Core 10" is an example. It deals with my attempts

to perform a network kickstart install of Fedora Core 10.

Part 1, Lessons from Mistakes, outlines my plans, briefly compares CD

installation with network installation, and proceeds to early success and

unexpected debacle.

In Part 2, Bootable Linux, I discuss some of the uses of the Knoppix Live

Linux CD, before explaining how I used Knoppix to gather information

needed for a network installation. I also use Knoppix to diagnose the

problems encountered in Part 1. This leads to the discovery that I

have created even more problems. Finally, I present an excellent

solution to the major problem of Part 1.

In Part 3 and 4, I finally get back on-track, and present detailed

instructions for installing Fedora Core 10 over a network, using PXE

boot, kickstart, and NFS. Part 3 details PXE boot including

troubleshooting; Part 4 takes us the rest of the way.

I think it's important for people to realise that they are not alone

when they make mistakes, that even so-called experts are fallible.

Isaac Asimov wrote a series of robot stories. (I don't imagine for one

moment that I'm Isaac Asimov.) To me, the most captivating facet of

these stories was the unanticipated consequences of interactions

between the Three Laws of Robotics. I like to think that I write about the

unanticipated consequences of our fallible minds: we want X, we think

we ask for X, but find we've got Y. Why?

[ Highly amusing coincidence: a possible answer to Henry's question is

contained in the "XY

Problem" - except for the terms X and Y being reversed. -- Ben ]

In my real life, I embark on a project, something goes wrong, there is

often the discovery that the problems multiply. After several detours,

I finally get a happy ending.

-- Henry Grebler

The Cowboy materialised at the side of my desk. I wondered uneasily

how long he had been standing there.

"When you've got some time," he began, "can I get you to look at a

problem?"

"Tell me about it," I replied.

He looked around, and pulled up a chair. This was going to be

interesting. His opening gambit is usually, "Quick question," almost

invariably followed by a very long and complicated discussion that

gives the lie both to the idea that the question will be short and the

implication that it won't take long to answer.

To me, a quick question is something like, "Is today Monday?" The

Cowboy's "quick" questions are about the equivalent of, "What's the

meaning of life?" or "Explain the causes of terrorism."

If he had decided to sit down, how momentous was this problem?

By way of preamble, he conceded that The Russian had been trying to

install Oracle on the machine in question. He wasn't sure if there was

a connection, but now The Russian couldn't run VNC. It turned out that,

when he tried to run a vncserver, he got a message like:

no free display number on suseq

Sure enough, when I tried to run a vncserver on suseq I got the

same message. I used ps to tell me how many vnc sessions were actually

running on this machine (ps auxw | grep vnc); a small number (3 or 4).

When I tried picking a relatively high display number, I was told it

was in use:

vncserver :13

A VNC server is already running as :13

Similarly for a really really high number:

vncserver :90013

A VNC server is already running as :90013

This perhaps was enough information to diagnose the problem (it is, in

hindsight), but seemed too startling to be believable.

To get a better understanding of why vncserver was behaving so

strangely, I decided to trace it[1]. I used a bash function called 'truss'[2], so the

session looked a bit like this:

truss vncserver

New 'X' desktop is suseq:6

Starting applications specified in /home/henryg/.vnc/xstartup

Log file is /home/henryg/.vnc/suseq:6.log

This was even more unbelievable - if I traced vncserver, it seemed to

work! (It doesn't really matter whether it actually worked, or just

seemed to work; tracing the application changed its behaviour.) This

may be a workaround, but it explained nothing and raised even more

question than were on the table going in.

At this point, I suggested to The Cowboy that it did not look like the

problem would be solved any time soon, and he might as well leave it

with me. I also thought a few minutes away from my desk wouldn't hurt.

After making myself a coffee, I went over to Jeremy and told him about

the strange behaviour with trace. I wasn't really looking for help;

just wanting to share what a weird day I was having.

I went back to my desk to do something that would succeed. There are

times during the task of problem-solving when you find yourself

picking losers. Whatever you try backfires. Several losses in a row

tend to send your radar out of kilter. At such times, it's a good idea

to get back onto solid ground, to do anything that is guaranteed to

succeed. Then, with batteries recharged after a couple of wins, your

frame of mind for tackling the problem is immensely improved.

A few minutes later, Jeremy came to see me. He had an idea. At first, I

could not make any sense of it. When I understood, I thought it was

brilliant - and certainly worth a try.

He suggested that I trace the bash command-line process from which I

was invoking vncserver.

So, in the xterm window where I had tried to run vncserver, I did

echo $$

5629

The number is the process id (pid) of the bash process.

In another xterm window, I started a trace:

truss -p 5629

Now, back in the first window, I invoked vncserver again:

vncserver

no free display number on suseq

So, Jeremy's idea had worked! (Trussing bash rather than vncserver had

allowed me to capture a trace of vncserver failing.)

I killed the truss and examined the output. What it showed was that

the vncserver process had tried many sockets and failed with "Address

already in use", e.g.:

bind(3, {sa_family=AF_INET, sin_port=htons(6099),

sin_addr=inet_addr("0.0.0.0")}, 16) = -1 EADDRINUSE (Address

already in use)

The example shows an attempt to bind to port 6099. This was near the bottom

of the truss output. Looking backwards, I saw that it had tried ports 6098,

6097, ... prior to port 6099. Clearly vncserver had cycled through a fairly

large number of ports, and had failed on all of them.

Why were all these ports busy? I tried to see which ports were in use:

netstat -na

Big mistake! (I should have known.) I was flooded with output. How

much output? Good question:

netstat -na | wc

After a very long time (10 or 15 seconds - enough time to issue the

same command on another machine), it came back with not much less than

50000! That's astronomical! On the other machine with the problem,

there were more than 40000 responses - still huge. A typical machine

runs about 500 sockets.

After that, the rest was easy. The suspicion was that Oracle was

hogging all the ports. That's probably not how it's meant to behave,

but I don't have a lot of experience with Oracle.

I suggested to The Russian that he try to shut down Oracle and see if

that brought the number of sockets in use down to a reasonable number;

If not, he should reboot.

In either case, he should then start a trivial monitor in an xterm:

while true

do

netstat -na | wc

sleep 1

done

and then do whatever he was doing to get Oracle going. As soon as the

answer from the monitor rose sharply, he would know that whatever he

had been doing at that time was probably responsible. If he was doing

something wrong, he might then have an indication where to look. If

not, he had something to report to the Oracle people.

Lessons

1. Not all exercises in problem-solving result in a solution with all

the loose ends tidied up. In this case, all that had been achieved is

that a path forward had been found. It might lead to a solution, it

might not. If not, they'll be back.

2. Never underestimate the power of involving someone else. In this

case, the someone else came back and provided me with a path forward.

Often, in other cases (and I've seen this dozens of times, both as the

presenter and as the listener), the person presenting the problem

discovers the solution during the process of explaining the problem to

the listener. I have been thanked profusely for helping to solve a

problem I did not understand. It is, however, crucial that the listener

give the impression of understanding the presenter. For some reason,

explaining the problem to the cat is just not as effective.

Notes

[1] "Tracing"

is an activity for investigating what a process (program) is doing. Think

of it as a stethoscope for computer doctors. The Linux command is strace(1)

- "trace system calls and signals."

[2] After

years of working in mixed environments, I now tend to operate in my own

environment. In a classic metaphor for "ontogeny mimics phylogeny", my

abbreviations and functions contain markers for the various operating

systems I've worked with: "treecopy" from my Prime days in the early '80s,

"dsd" from VMS back in the mid '80s, "truss" from Solaris in 1998, etc.

In this case, I used my bash function, "truss", which has all the options I

usually want adjusted depending on the platform on which the command is

running. Under Solaris, my function "truss" will invoke the Sun command

"truss". Since this is a SUSE Linux machine, it will invoke the Linux

command "strace" (with different options). It's about concentrating on

what I want do rather than how to do it.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/grebler.jpg)

Henry was born in Germany in 1946, migrating to Australia in 1950. In

his childhood, he taught himself to take apart the family radio and put

it back together again - with very few parts left over.

After ignominiously flunking out of Medicine (best result: a sup in

Biochemistry - which he flunked), he switched to Computation, the name

given to the nascent field which would become Computer Science. His

early computer experience includes relics such as punch cards, paper

tape and mag tape.

He has spent his days working with computers, mostly for computer

manufacturers or software developers. It is his darkest secret that he

has been paid to do the sorts of things he would have paid money to be

allowed to do. Just don't tell any of his employers.

He has used Linux as his personal home desktop since the family got its

first PC in 1996. Back then, when the family shared the one PC, it was a

dual-boot Windows/Slackware setup. Now that each member has his/her own

computer, Henry somehow survives in a purely Linux world.

He lives in a suburb of Melbourne, Australia.

Copyright © 2010, Henry Grebler. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 171 of Linux Gazette, February 2010

Random signatures with Mutt

By Kumar Appaiah

Introduction

Ever since the start of the Internet, electronic mail has been a

mainstay for communication. Even as new social networking

phenomena such as online-friend connection databases and

microblogging are taking communication on the Web by storm, e-mail still

remains a preferred means of communication for both personal and

official purposes, and is unlikely to fade away soon.

One aspect of e-mail that we often observe is the sender's

signature - the tiny blurb that appears on the bottom. If it's an

official e-mail, then you're most likely constrained to keep it something

sober that talks about your position at work, or similar

details. However, if you are mailing friends, or a mailing list, then

wouldn't it be nice to have a signature that is customized and/or

chosen from a random set of signatures? Several Web-based e-mail services

offer this already; a cursory Web search reveals that services such as

GMail offer this feature. However, the customizability is limited, and

you are restricted in several ways. However, if you use a customizable mail

client like Mutt, several fun things can be done with e-mail

signatures, using more specific details that are within your control.

In this article, I describe one of the possible ways of having random

signatures in your e-mail in the Mutt mail client, and switching

signature themes and styles based on certain characteristics, such

your inbox or recipient. I will, therefore, assume that you have a

working configuration for Mutt ready, though the concept I describe

applies equally well to other mail clients which support this, such as

Pine. I'll make use of the BSD/Unix fortune program

(available in almost all GNU/Linux and *BSD distributions) in this

article, though any solution that chooses random quotes would do.

Preparation: collect your signatures for fortune

The Unix fortune program is a simple program that uses a

database of strings (called "fortunes") to randomly choose a string

and display it onto the screen. The program is named appropriately,

since it uses a collection of files on various subjects to display

messages, much like those on fortune cookies.

It is usually easy to install the program on most GNU/Linux

distributions. For example, on Debian/Ubuntu-like systems, the

following command will fetch the fortune program and several databases

of strings:

apt-get install fortune-mod fortunes

Similarly, yum install fortune-mod should help on Red

Hat/Fedora-like systems. It shouldn't be too hard to figure out how to

install it on your GNU/Linux or BSD distribution.

Once installed, you can test out the existing files. For example, on

my Debian machine, the following command lists a random signature from

one of the /usr/share/games/fortunes/linux or

/usr/share/games/fortunes/linuxcookie files:

fortune linux linuxcookie

If you now open one of the files in

/usr/share/games/fortunes/, such as linux, you'll

observe that they consist of strings which are separated by the "%"

sign, resembling the format of this file:

An apple a day keeps the doctor away.

%

A stitch in time saves nine.

%

Haste makes waste

Also, associated with each file, such as linux, is a

.dat file, e.g. linux.dat. The way fortune

works is that it looks for the file specified at the command line,

(absent such a command line option, choosing a random file among those

in the path searched by default), and looks for a corresponding .dat

file, which has a table of file offsets for locating strings in that

file. If it doesn't find the dat file corresponding to a text file

with signatures, it ignores that file.

While you can use the signatures present already, if you want to

create and use your own signatures, get them from wherever you want,

and place them in a file, separated by % signs as discussed above. For

example, I'll put the above file in a location called

$HOME/Signatures. Let me call my file $HOME/Signatures/adages. To use

it with fortune, I use the strfile program to

generate the table of offsets:

[~/Signatures] strfile adages

"adages.dat" created

There were 3 strings

Longest string: 38 bytes

Shortest string: 18 bytes

Now, running fortune with the full path of the file causes a random

string to be displayed from the above file. (Note that the full path

must be provided, if the file is outside the default fortunes

directory.)

$ fortune $HOME/Signatures/adages

Haste makes waste

The fortune program is versatile, and has several options.

Be sure to read its manual page for more details.

Tailoring fortune output for signatures

The fortune output is a random string, and often, these

strings are too long for use as good signatures in your

e-mail. To avoid such situations, we make use of the -s

option so fortune will display only short strings. The default

definition of a short string is up to 160 characters, but you can

override it with the -n option. For example:

fortune -n 200 -s <files>

could be a suitable candidate as a signature file.

Configuring Mutt

Now, you're ready to use your neat fortunes in Mutt. If you have no

custom signature, and want to uniformly use a random signature from

your newly created signature file, as well as the default

linuxcookie file, then add the following to your

.muttrc:

set signature="fortune -n 200 -s linux $HOME/Signatures/adages|"

If this is what you wanted, you're done. However, if you want to

customize the signatures based on other parameters such as recipient,

or current folder, read on.

Customizing your signature based on different criteria

Let me describe my setup here. I use the procmail program

to filter my mail, and let's say that I've got the following folders

of interest:

- inbox: where all my personal e-mail arrives.

- linux-list: which consists of my local Linux user group mail.

- work-mail: where I read all my work mail.

Now, I want to have the following configuration:

- When I reply to or compose mail in the work mail folder, I want a fixed signature.

- When I send to linux-list, I want a signature from the linux fortunes database.

- Finally, in all other situations, I want a signature from my adages list.

There are simple steps to achieve this using the hooks in Mutt. Note that

I have interspersed it with some other configurations for

demonstration.

# Set the default options

folder-hook . 'set record=+sent; set from=me@my-personal-email; set signature="fortune $HOME/Signatures/adages|"'

# Set the custom hooks

send-hook [email protected] 'set signature="fortune -n 200 -s linux|"'

folder-hook work-mail 'set record=+sent-work; set from=me@my-work-email; set signature="$HOME/.signature-work"'

What the above snippet does is to set, amongst other things, the

default signature to be my adages set, and automatically switch the

signature to a fixed file, $HOME/.signature-work, when I move

to the work-mail folder. Finally, if I send mail to the

[email protected] mailing address, the signature is

altered to display a fortune from the linux fortunes

file. Simple enough, and the possibilities are infinite!

Getting good fortunes

You can scout the Internet to get nice quotations and

interesting messages to add to your signatures collection; all you need to do is

to put each in the right format, use strfile to enable fortune

to use it, and plug it into your Mutt configuration. I often like to

hunt for quotations on Wikiquote,

amongst other Web sites. While I am sure most of us don't mean any harm

while using other people's sentences in our signatures, ensure that

you stay on the right side of the law while doing so.

I wish to make a mention of the Linux One Stanza Tip project,

which provides a very nice set of signatures with GNU/Linux tips, and

more, in their distribution. They also provide templates for you to

generate your own signatures with designs and customizations. Sadly,

the project doesn't seem to be that active now, but the tips and

software provided are very apt and usable even today.

Conclusion

In conclusion, I hope I've managed to show you that with Mutt, you

can customize the way you handle signatures in a neat manner. Should

you have comments or suggestions to suggestions to enhance or improve

this workflow, or just want to say that you liked this article, I'd

love to hear from you!

Talkback: Discuss this article with The Answer Gang

Kumar Appaiah is a graduate student of Electrical and Computer

Engineering at the University of Texas

at Austin, USA. Having used GNU/Linux since 2003, he has settled

with Debian GNU/Linux, and

strongly believes that Free Software has helped him get work done

quickly, neatly and efficiently.

Among the other things he loves are good food, classical music (Indian

and Western), bicycling and his home town, Chennai, India.

Copyright © 2010, Kumar Appaiah. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 171 of Linux Gazette, February 2010

The Next Generation of Linux Games - Word War VI

By Dafydd Crosby

Many people have made the switch to Linux, and the question that has

continued since the kernel hit 0.01 is "where are the games?"

While the WINE project has done a

great job at getting quite a few mainstream games working, there are also

many Linux-native gems that are fantastic at whittling away the time. No

longer content with Solitaire clones, the community is responding with a

wide array of fun games.

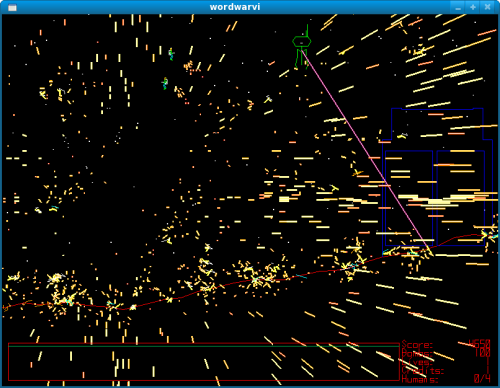

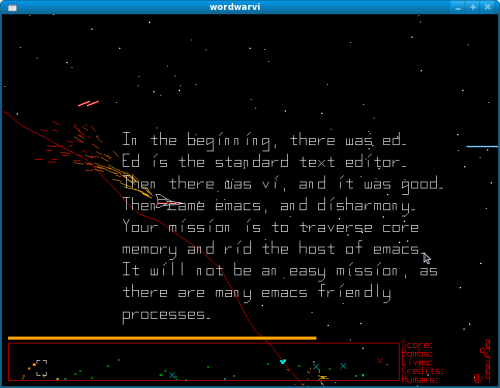

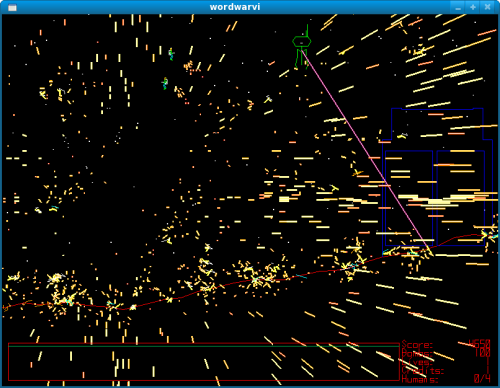

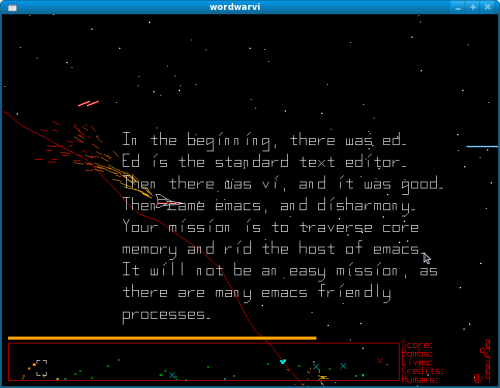

In this month's review, we are looking at Word War VI. With graphics

that initially look straight out of the Atari days, one might be tempted to

overlook the game for something a little showier. However, within minutes I

was hooked with the Defender-like gameplay, and the fireworks and

explosions rippling across the screen had satisfied my eye-candy

requirements.

The graphics look like the old 80's arcade shooters, but the polish and

smoothness of the visuals make it quite clear that this is an intentional

part of the game's aesthetic. Also, a quick look at the game's

man page reveal that there is a wealth of options:

adjusting how the game looks, the difficulty settings, the choice of bigger

explosions (which look awesome), and even a mode where you play as

Santa Claus (wordwarvi --xmas).

The controls are simple, mostly relying on the spacebar and arrow keys.

The goal is to grab as many humans while avoiding various missiles, alien

ships, and other enemies. The real beauty is the 'easy to learn, hard to

master' approach, which suits the space-shooter genre just fine.

Stephen Cameron's little side-project about "The Battle of the Text

Editors" has blossomed into a respectable game. He has done a great job

documenting on how to hack the game and extend it even further, leaving

endless possibilities of more fun and crazy modes. The source is located at

the Word War VI website,

though the game is likely already packaged by your distribution.

If there's a game you would like to see reviewed, or have an update on

one already covered, just drop me a

line.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/crosby.jpg)

Dafydd Crosby has been hooked on computers since the first moment his

dad let him touch the TI-99/4A. He's contributed to various different

open-source projects, and is currently working out of Canada.

Copyright © 2010, Dafydd Crosby. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 171 of Linux Gazette, February 2010

The Gentle Art of Firefox Tuning (and Taming)

By Rick Moen

Linux users tend, I've noticed, to complain about suckiness on the Web

itself, and in their own Web browsers — browser bloat, sites going

all-Flash, brain damage inherent in AJAX-oriented "Web 2.0" sites[1], and

death-by-JavaScript nightmares. However, the fact is: We've come a long

way.

In the bad old days, the best we had was the crufty and proprietary

Netscape Communicator 4.x kitchen sink^W^W"communications suite"

into which Netscape Navigator 3.x, a decent browser for its day, had

somehow vanished. That was dispiriting, because an increasing number of

complex Web pages (many of them Front Page-generated) segfaulted the

browser immediately, and Netscape Communications, Inc. wasn't fixing it.

Following that was a chaotic period: Mozilla emerged in 1998, with Galeon

as a popular variant, and Konqueror as an independent alternative from the

KDE/Qt camp. Mozilla developers made two key decisions, the first being

the move in October 1998 to write an entirely new rendering engine,

which turned out to be a huge success. The rendering engine is now named

Gecko (formerly Raptor, then NGLayout), and produced the first

stunningly good, modern, world-class browsers, the Mozilla 0.9.x series,

starting May 7, 2001. I personally found this to be the first time it

was truly feasible to run 100% open source software without feeling like

a bit of a hermit. So, I consider May 7, 2001 to be open source's

Independence Day.

The second turning point was in 2003, with the equally difficult decision

that Mozilla's feature creep needed fixing by ditching the "Mozilla

Application Suite" kitchen-sink approach and making the browser separate

again: The Mozilla Project thus produced Firefox (initially called

"Phoenix") as a standalone browser based on a new cross-platform front-end

/ runtime engine called XULRunner (replacing the short-lived Gecko Runtime

Environment). At the same time, Galeon faltered and underwent a further

schism that produced the GNOME-centric, sparsely featured Epiphany browser,

and the XULRunner runtime's abilities inspired Conkeror (a light

browser written mostly in JavaScript), SeaMonkey (a revival of the

Communicator kitchen-sink suite), and Mobile Firefox (formerly Fennec,

formerly Minimo).

Anyway, defying naysayers' expectations, Firefox's winning feature

has turned out to be its extensions interface, usable by add-on code

written in XULRunner's XUL scripting language. At some cost in browser

code bloat[2]

when you use it extensively, that interface has permitted development

of some essential add-ons, with resulting functionality unmatched by any

other Web browser on any OS. In this article, I detail several

extensions to tighten up Firefox's somewhat leaky protection of your

personal privacy, and protect you from Web annoyances. (For reasons

I've detailed

elsewhere, you should if possible get software from distro packages

rather than "upstream" non-distro software authors, except in very

exceptional circumstances. So, even though I give direct download links

for three Firefox extensions, below, please don't use those

unless you first strike out with your Linux distribution's own

packages.[1]) I also outline a number of modifications

every Firefox user should consider making to the default configuration,

again with similar advantages in user privacy and tightening of security

defaults. For each such change, I will cite the rationale, so you can

adjust your paranoia to suit.

The "Rich Computing Experience"[4] and Its Discontents

Here begins the (arguable) mild-paranoia portion of our proceedings:

Have you ever noticed how eager Web-oriented companies are to help you?

You suddenly discover that your software goes out and talks across the

Internet to support some "service" you weren't aware you wanted,

involving some commercial enterprise with whom you have no business

relations. There's hazy information about this-or-that information

being piped out to that firm; you're a bit unclear on the extent of it

— but you're told it's all perfectly fine, because there's a

privacy policy.

There's a saying in the Cluetrain Manifesto, written in part by longtime

Linux pundit Doc Searls, that "Markets are conversations." That is,

it's a negotiated exchange: You give something; you get something.

Sometimes you give information, and, oddly enough, Linux people seem to

often miss the key point: information has value, even yours. In a

market conversation, you're supposed to be able to judge for yourself

whether you want what's being offered, and if you want to donate

the cost thereof (such as some of your data). If you have no use

for what's being offered, you can and should turn off the "service" --

and that's what this article will cover (or at least let you decide what

"services" to participate in, instead of letting others decide for you).

The Essential Extensions

NoScript: The name is slightly misleading: This marvelous

extension makes JavaScript, Java, Flash, and a variety of other possibly

noxious and security-risking "rich content" be selectively disabled

(and initially disabled 100% by default), with you being able to enable

on a site-by-site basis, via context menu, which types of scripting you

really want to execute. More and more, those scripts are some variety

of what are euphemistically called "Web metrics", i.e., data mining

attempts to spy on you and track your actions and movements as you

navigate the Web. NoScript makes all of that just not work, letting you

run only the JavaScript, Flash, etc. that you really want. As a

side-benefit, this extension (like many of the others cited) in effect

makes the Web significantly faster by reducing the amount of junk code

your browser is obliged to process. Available from: http://noscript.net/

Adblock Plus ("ABP"): This extension does further filtering, making

a variety of noxious banner ads and other advertising elements

just not be fetched at all. Highly recommended, though some people

prefer the preceding "Adblock" extension, instead. Privacy

implication? Naturally, additional data mining gets disposed of, into

the bargain. Available from:

http://adblockplus.org/en/

ABP's effectiveness can be substantially enhanced through adding subscriptions to

maintained ABP blocklists. I've found that a combination of EasyList and EasyPrivacy is

effective and reliable, and recommend them. (EasyList is currently

an ABP default.) Since these are just URL-pattern-matching blocklists,

subscriptions are not as security-sensitive as are Firefox extensions

themselves, but you should still be selective about which ones to adopt.

CustomizeGoogle: This extension largely defangs Google search

engine lookup of its major advertising and data-mining features, makes

your Google preferences persistent for a change, adds links to

optionally check alternative search engines' results on the same

queries, anonymises the Google userid string sent when you perform a

Google Web search (greatly reducing the ability of Google's data

mining to link up what subjects you search for with who you are), etc.

Be aware that you'll want to go through CustomizeGoogle's preferences

carefully, as most of its improvements are disabled by default.

Available from:

http://www.customizegoogle.com/

For the record, I like the Google, Inc. company very much, even

after its 2007 purchase of notorious spying-on-customers firm

DoubleClick, Inc., which served as a gentle reminder that the parent

firm's core business, really, intrinsically revolves around data

mining/collection and targeted advertising. What I (like, I assume,

LG readers) really want is to use its services only at my

option, not anyone else's, and to negotiate what I'm giving them, the

Mozilla Corporation, and other business partners, rather than having it

taken behind my back. For example, Ubuntu's first alpha release of

10.04 "Karmic Koala" included what Jon Corbet at LWN.net called "Ubuntu's multisearch surprise":

a custom Firefox search bar that gratuitously sent users to a Google

"search partner" page to better (and silently, without disclosure)

collect money-making data about what you and I are up to. (This feature

was removed following complaints, but the point is that we the users

were neither informed nor asked about whether we wanted to be monitored

a bit more closely to make this "service" possible.)

User Agent Switcher: This extension doesn't technically

concern security and privacy, exactly, but is both useful in itself and

as a way to make a statement to Web-publishing companies about

standards. It turns out that many sites query your browser about its

"User Agent" string, and then decide on the basis of the browser's

answer whether to send it a Web page or not — and what Web page to

send. User Agent Switcher lets you pick dynamically which of several

popular Web browsers you want Firefox to claim to be, or you can write

your own. I usually have mine send "W3C standards are important.

Stop f---ing obsessing over user-agent already", for reasons my friend Karsten

M. Self has

cited:

In the finest Alice's Restaurant tradition, if one person does this,

they may think he's sick, and they'll deny him the Web page. If two

people do it, in harmony, well, they're free speech fairies, and they

won't serve them either. If three people do it, three, can you imagine,

three people setting their user-agent strings to "Stop f---ing obsessing

over user-agent...". They may think it's an organization. And can you

imagine fifty people a day? Friends, they may think it's a movement. And

that's what it is... If this string shows up in enough Web server logs,

the message will be felt.

Available from:

http://chrispederick.com/work/user-agent-switcher/

I list a number of other extensions that might be worth considering

on my personal pages.

[ I can also recommend the Web Developer toolbar extension.

Even if you're not a Web developer, the tool can help you to deactivate

obnoxious style sheets and layouts. In addition, you can instantly clear

all cookies and HTTP authentications for the site you are viewing

(by using the menu item Miscellaneous/Clear Private Data/...).

-- René ]

Configuration of the Browser Itself

Edit: Preferences: Content: Select Advanced for "Enable JavaScript"

and deselect all. Reason: There's no legitimate need for JavaScript to

fool with those aspects of your browser. Then uncheck Java, unless you

actually ever use Java applets in your Web browser. (You can always

re-enable if you ever need it.)

Edit: Preferences: Privacy: Uncheck "Accept third-party

cookies." Reason: I've only seen one site where such were essential

to the site's functionality, and even then it was clearly also being

used for data mining. Enable "Always clear my private data when I close

Firefox". Click "Settings" and check all items. Reason: When you

ask to delete private data, it should actually happen. Disable

"Remember what I enter in forms and the search bar". Reason: Your

prior forms data is often security-sensitive. Consider disabling

"Keep my history for n days" and "Remember what I've downloaded".

Reason: You don't get much benefit from keeping this private data

around persistently, so why log it?

Edit: Preferences: Security: Visit "Exceptions" to "Warn me

when sites try to install add-ons" and remove all. Reason: You should

know. Disable "Tell me if the site I'm visiting is a suspected attack

site" and "Tell me if the site I'm visiting is a suspected forgery".

Reason: Eliminate periodic visits to an anti-phishing, anti-malware

nanny site. Really, can't you tell EBay and your bank from fakes, and

can't you deal with malware by just not running it? "Remember passwords

for sites": If you leave this enabled, remember that Firefox will leave

them in a central data store that is only moderately obscured, and then

only if you set a "master password". Don't forget, too, that even the

list of sites to "Never Save" passwords for, which isn't obscured at

all, can be very revealing. The cautious will disable this feature

entirely — or, at minimum, avoid saving passwords for any site that

is security-sensitive.

Edit: Preferences: Advanced On General tab, enable

"Warn me when Web sites try to redirect or reload the page". Reason:

You'll want to know about skulduggery. On Update tab, disable

"Automatically check for updates to: Installed Add-ons" and

"Automatically check for updates to: Search Engines", and select

"When updates to Firefox are found: Ask me what I want to do".

Reason: You really want those to happen when and if you choose.

Now we head over to URL "about:config". You'll see the

condescending "This might void your warranty!" warning. Select the

cutesy "I'll be careful, I promise!" button and uncheck "Show this

warning next time". Reason: It's your darned browser config.

Hypothetically if you totally screw up, at worst you can close Firefox,

delete ~/.mozilla/firefox/ (after saving your bookmarks.html), and try

again.

Set "browser.urlbar.matchOnlyTyped = true": This disables the

Firefox 3.x "Awesome Bar" that suggests searches in the Search box based

on what it learns from watching your bookmarks and history, which is

not (in my opinion) all that useful, and leaves information on your

browsing habits lying around.

Set "browser.tabs.tabMinWidth = 60" and "browser.tabs.tabMaxWidth =

60": Reduce tab width by about 40%. Reason: They're too wide.

Set "bidi.support = 0": Reason: Unless you're going to do data

input in Arabic, Hebrew, Urdu, or Farsi, you won't need bidirectional

support for text areas. Why risk triggering bugs?

Set "browser.ssl_override_behavior = 2" and

"browser.xul.error_pages.expert_bad_cert = true": This reverts

Firefox's handling of untrusted SSL certificates to the 2.x behaviour.

Reason: The untrusted-SSL dialogues in Firefox 3.x

are supremely annoying, "Do you want to add an exception?" prompt and

all.

Set "network.prefetch-next = false": Disables link prefetching of

pages Firefox thinks you might want next. Reason: Saves needless waste

of bandwidth and avoids sending out yet more information about your

browsing.

Set "xpinstall.enabled = false": Globally prevents Firefox from

checking for updates to Firefox and installed extensions. Reason:

This really should happen on your schedule, not Firefox's.

Set "permissions.default.image = 3": This control specifies which