...making Linux just a little more fun!

July 2009 (#164):

- Mailbag

- Talkback

- 2-Cent Tips

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- Layer 8 Linux Security: OPSEC for Linux Common Users, Developers and Systems Administrators, by Lisa Kachold

- Building the GNOME Desktop from Source, by Oscar Laycock

- Joey's Notes: VSFTP FTP server on RHEL 5.x, by Joey Prestia

- Understanding Full Text Search in PostgreSQL, by Paul Sephton

- Fedora 11 on the Eee PC 1000, by Anderson Silva

- Sending and Receiving SMS from your Linux Computer, by Suramya Tomar

- Ecol, by Javier Malonda

- XKCD, by Randall Munroe

- The Linux Launderette

Mailbag

This month's answers created by:

[ Ben Okopnik, Kapil Hari Paranjape, Paul Sephton, Rick Moen, Robos, Steve Brown, Thomas Adam ]

...and you, our readers!

Still Searching

icewm question. maximized windows stick to top of screen can this be changed?

Ben Okopnik [ben at linuxgazette.net]

Tue, 16 Jun 2009 20:16:07 -0500

----- Forwarded message from Mitchell Laks <[email protected]> -----

Date: Mon, 8 Jun 2009 15:13:39 -0400

From: Mitchell Laks <[email protected]>

To: [email protected]

Subject: icewm question. maximized windows stick to top of screen can this

be changed?

Hi Ben,

I noticed your articles on icewm and wondered if you know the answer to this question.

I have been using icewm and am very happy with it. One issue for me is that

maximizing a window, which i do as an expedient way to "make it bigger"

(so that it fills the screen) also seems to make the window 'stick' to the top of the

screen.

Thus the screen if maximized is stuck and I am unable to move the screen around with my mouse.

I have to manually hit 'Restore' (Alt-F5) to get out of the stuck mode. Then I can move it again.

This is not the default behavior in a kde (kwin?) session on my machine. I have

noticed this type

behavior before in gnome. It is my main reason why I have used kde in the past.

Is there any setting that I can set so that I would have 'maximize' but not the

'sticking'?

Is there any preferences I can set to arrange this?

I am willing to recompile icewm from scratch if neccesary. Where is this behavio

r set in the code?

Thanks!

Mitchell Laks

----- End forwarded message -----

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

Our Mailbag

file system related question

ALOK ANAND [alokslayer at gmail.com]

Mon, 22 Jun 2009 12:32:53 +0530

please advice how much space needs to be allocated for each of the directory

of an opensuse 11.1 operating system if you intend to install it by manually

allocating space (i.e by not following the one proposed by yast2) .I'am

looking for a detailed explanation of the following directories and the

space needed (i have a 160 GB Hard Drive).also give me a general explanation

of each of the following directories (example what do they store and the

minimum space needed on a server class system)

/

/bin

/boot

/home

/usr

/var

/tmp

/opt

/sbin

An early reply will be greatly appriciated.

Thank you

[ Thread continues here (6 messages/10.30kB) ]

linux command to read .odt ?

J. Bakshi [j.bakshi at unlimitedmail.org]

Tue, 9 Jun 2009 21:11:52 +0530

Dear all,

Like catdoc ( to read .doc) is there any command to read .odt from

command line ? did a lot googling but not found any such command like

catdoc.

On the other hand I have found that .odt is actually stored in zip

format. So I have executed unzip on a .odt and It successfully

extracted a lot of files including "content.xml" which actually have

the content

Is there any tool which can extract the plain text from .xml ?

Please suggest.

The content.xml looks like

<office:document-content office:version="1.2">

<office:scripts/>

-

<office:font-face-decls>

<style:font-face style:name="Times New Roman" svg:font-family="'Times

New Roman'" style:font-family-generic="roman"

style:font-pitch="variable"/>

<style:font-face style:name="Arial" svg:font-family="Arial"

style:font-family-generic="swiss" style:font-pitch="variable"/>

<style:font-face style:name="Arial1" svg:font-family="Arial"

style:font-family-generic="system" style:font-pitch="variable"/>

</office:font-face-decls>

<office:automatic-styles/>

-

<office:body>

-

<office:text>

-

<text:sequence-decls>

<text:sequence-decl text:display-outline-level="0" text:name="Illustration"/>

<text:sequence-decl text:display-outline-level="0" text:name="Table"/>

<text:sequence-decl text:display-outline-level="0" text:name="Text"/>

<text:sequence-decl text:display-outline-level="0" text:name="Drawing"/>

</text:sequence-decls>

<text:p text:style-name="Standard">This is a test </text:p>

</office:text>

</office:body>

</office:document-content>

Note the content

<text:p text:style-name="Standard">This is a test </text:p>

[ Thread continues here (5 messages/5.31kB) ]

Laptop installation problem

jack [rjmayfield at satx.rr.com]

Wed, 10 Jun 2009 11:57:47 -0500

I have a Dell Latitude 400 .... is there any Linux OS that will work on

this laptop?

[ Thread continues here (4 messages/2.01kB) ]

[Mailman-Users] Virtual domain not quite working on HTTP (but fine on SMTP)

Kapil Hari Paranjape [kapil at imsc.res.in]

Thu, 18 Jun 2009 19:42:43 +0530

[[[ This references a thread that has been moved to the LG Launderette,

re: the various LG mailing lists. -- Kat ]]]

Hello,

Disclaimer: Unlike you I have only been running mailman lists for a

year and a half so I am unlikely to be of any help!

On Wed, 17 Jun 2009, Rick Moen wrote:

> Greetings, good people. Problem summary: After server rebuild,

> virtual hosts work for SMTP, but Mailman's Web pages are appearing

> for the main host only and not the virtual host.

Are the actual pages being generated in /var/lib/mail/archives/ ?

Is the apache virtual domain configured to use the mailman cgi-bin?

These were the only two questions that occurred to me when I read

through your mail.

Regards,

Kapil.

--

[ Thread continues here (2 messages/3.48kB) ]

Quoting question

Robos [robos at muon.de]

Sat, 13 Jun 2009 20:21:08 +0200

Hi TAG,

I need some help.

I'm writing a script in which I use rsync and some functions. The test

script looks like this:

#!/bin/bash

set -x

LOGDATEI=/tmp/blabla

backup_rsync()

{

/usr/bin/rsync -av $@

}

backup_rsync '/tmp/rsync-test1/Dokumente\ und\ Einstellungen'

/tmp/rsync-test2/

This "Dokumente und Einstellungen" is the windows folder Documents and

Settings.

I'm stumped now, I've tried loots of combinations but can't seem to find

the right combination so that the script works. The spaces in the name

"Dokumente und Einstellungen" break the script. How do I have to

escape/quote it?

A little help, please?

Regards and thanks in advance

Udo 'robos' Puetz

[ Thread continues here (3 messages/2.43kB) ]

Fixed. Was: Virtual domain not quite working on HTTP (but fine on SMTP)

Rick Moen [rick at linuxmafia.com]

Thu, 18 Jun 2009 11:48:43 -0700

----- Forwarded message from Rick Moen <[email protected]> -----

Date: Thu, 18 Jun 2009 11:47:57 -0700

From: Rick Moen <[email protected]>

To: Mark Sapiro <[email protected]>

Cc: [email protected]

Subject: Re: [Mailman-Users] Virtual domain not quite working on HTTP (but

fine on SMTP)

Organization: Dis-

Quoting Mark Sapiro ([email protected]):

> Yes it is. 'listinfo' is a CGI. You need a

> ScriptAlias /mailman/ "/usr/local/mailman/cgi-bin/"

The Apache conf has:

ScriptAlias /mailman/ /usr/lib/cgi-bin/mailman/

I didn't harp on that in either of my posts because I had pointed out

that the Mailman Web pages _do work_ for the default host -- and also

invited people to have a look at http://linuxmafia.com/mailman/listinfo

and subpages thereof, if they had any doubts.

-=but=-, the above ScriptAlias was neatly tucked into the stanza for the

default host. And:

> (or whatever the correct path is) in your httpd.conf where it will

> apply to the lists.linuxgazette.net host. I.e. it needs to be in the

> VirtualHost block for each host with lists or it needs to be outside

> of the virtual hosts section so it applies globally.

That was it! Fixed, now.

Damn. Bear in mind, I've just had a forced transition from Apache 1.3.x

to Apache2 (on account of sudden destruction of the old box), so my

excuse is that Debian's Apache2 conffile setup is rather different from

what I was used to.

Thank you, Mark. And thank you, good gentleman all.

--

Cheers, Notice: The value of your Hofstadter's Constant

Rick Moen (the average amount of time you spend each month

[email protected] thinking about Hofstadter's Constant) has just

McQ! (4x80) been adjusted upwards.

[ Thread continues here (2 messages/2.68kB) ]

Jim, HELP needed, 5-minute solution needed by computer industry

Aviongoo Sales [sales at aviongoo.com]

Mon, 15 Jun 2009 01:10:14 -0400

Jim,

I'm looking for a "simple" client server solution. I just want to

upload files from my PC to my server under program control.

The client is Windows XP and the server is Linux.

I was recently forced to write a VB application to collect files on the

Windows PC. Now, I need to upload the files to my Linux server. I

usually use FileZilla, however, there is no easy accepted way to

automate the queue building process in Filezilla, therefore manual

intervention is still required.

I need to get rid of all manual intervention.

My old PHP applications had a custom server to server file transfer

implemented. So, I can use that solution on the server if the client

were cooperative. I setup up an Apache server on the PC client but

dynamic DNS is no longer allowed by my ISP (as far as I can determine).

So, I started looking for a simple custom program solution. VB.NET was

my first choice since I can reuse alot of my recently developed code.

I've looked for days (weeks) now and tried 20 or so pieces of code that

claimed to allow client to server file transfer. None have worked so

far.

So, the challenge is this - come up with a solution (preferably PHP on

Linux and VB.NET on Windows) that a programmer can implement in five

minutes that will upload a file from the VB.NET Windows client machine

to the PHP Linux server machine. I just want to copy the code and have

it work. I can't believe it's that hard!!!!

If you get something to work and document it well, I've got to believe

millions of folks will be viewing your result. The various search terms

I use in Google to try to discover a solution indicate millions are

looking!

Thanks,

Bill

[ Thread continues here (29 messages/75.42kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 164 of Linux Gazette, July 2009

Talkback

Talkback:141/brownss.html

[ In reference to "An NSLU2 (Slug) Reminder Server" in LG#141 ]

Ben Okopnik [ben at linuxgazette.net]

Thu, 4 Jun 2009 08:26:03 -0500

----- Forwarded message from "Silas S. Brown" <[email protected]> -----

Date: Fri, 29 May 2009 20:34:43 +0100

From: "Silas S. Brown" <[email protected]>

To: [email protected]

Subject: Talkback:141/brownss.html

In my article in LG 141, "An NSLU2 (Slug) Reminder

Server", I suggested running a script to check for

soundcard failure and repeatedly beeping the speaker

to draw attention to the problem when this happens.

However, since upgrading from Debian Etch to Debian Lenny

(LG 161 Upgrading Your Slug), I have found this script to

be most unreliable: the NSLU2 can crash completely in the

middle of a beep, leaving the speaker sounding

permanently and the NSLU2 unusable until you cut the

power. The probability of this crash seems to be well

above an acceptable level, and the watchdog somehow fails

to reboot the NSLU2 when it happens.

I have not been able to get any clue about why this

failure now occurs in the new kernel + OS, except to say

that it seems more likely to occur when the operating

system is under load. A "workaround" is to increase the

length of time between the beeps (say, beep every 10

seconds instead of every second), but this merely reduces

the probability of the crash; it does not eliminate it.

Does anyone have any insight into this?

Thanks. Silas

--

Silas S Brown http://people.pwf.cam.ac.uk/ssb22

* Ben Okopnik * Editor-in-Chief, Linux Gazette *

http://LinuxGazette.NET *

157/lg_mail.html

Thomas Adam [thomas.adam22 at gmail.com]

Thu, 4 Jun 2009 13:28:39 +0100

---------- Forwarded message ----------

From: Sigurd Sol�s <[email protected]>

Date: 2009/5/27

Subject: How to get a random file name from a directory - thank you for the tip!

To: [email protected]

Hello Thomas,

I youst wanted to thank you for the shell script you provided in the

Linuxgazette on how

to pick a random file from a directory. Yesterday I was searcing the

Internet for a simple

way to do this, and then I came across your method in a post there.

The script you provided is now in use on a computer that plays wav

audio files from the

hard disk, and the sound card is connected to the stereo, in effect

the computer works

as a conventional CD-changer.

Here you can see how your method is implemented in the script aplay.sh

- it is started

like this from the command line:

$ aplay.sh MusicDirectory

aplay.sh:

------------------------------------------------------------------

#!/bin/sh

while sleep 1; do

myfiles=($1/*.wav)

num=${#myfiles[@]}

aplay -D hw:0,0 "${myfiles[RANDOM %num]}"

done

------------------------------------------------------------------

The aplay.sh script plays music in an endless loop, by pressing Ctrl-C once, it

jumps to the next song. By pressing Ctrl-C twice in quick succession,

the script exits.

I dont know if you know this, but the aplay program that is used

inside aplay.sh is

a program that comes with the new Linux sound system ALSA, so it

should be available

on most of the newer Linux distributions.

Again, thank you wery much for the code, and have a nice day.

Best regards,

Sigurd Sol�s, Norway.

Talkback:133/cherian.html

[ In reference to "Easy Shell Scripting" in LG#133 ]

Papciak, Gerard (Gerry) [Gerard.Papciak at Encompassins.com]

Fri, 5 Jun 2009 21:37:41 -0500

Hello...

I have a number of files in a Unix directory that need certain words

replaced.

For instance, for ever file inside /TEST I need the word 'whs' replaced

with 'whs2'.

I have search and searched the sed command and kornshell scripting...no

luck

Sed 's/whs/whs2/g /TEST*.* > outfile

The above came close but places the contents of all files into one.

Any advice?

--

Gerry Papciak

Information Delivery

[[[Elided content]]]

[ Thread continues here (6 messages/7.95kB) ]

Talkback:141/lazar.html

[ In reference to "Serving Your Home Network on a Silver Platter with Ubuntu" in LG#141 ]

Ben Okopnik [ben at linuxgazette.net]

Thu, 4 Jun 2009 08:08:52 -0500

----- Forwarded message from peter <[email protected]> -----

Date: Fri, 15 May 2009 15:35:22 +0700

From: peter <[email protected]>

Reply-To: [email protected]

To: [email protected]

Subject: Ubuntu Server Setup

In August 2007 ....an age ago, you published an article "Serving Your Home

Network on a Silver Platter with Ubuntu". Quite good really, idiot proof,

more or less. I used the article in the main to set up my first home

server. It has stayed up, only crashes when I do something stupid which is

only once or twice a year.

I was thinking that it is perhaps time to go back to the table and set up the

server again, running 7.10 does sound a bit dated given the noise that

Canonical have been making about how 9.04 is a good option for servers.

Just wondering if you have any plans to update and expand the article?

There really is not much to be found through google. Well actually there is

heaps of it but since it is all written in the words of the mega tech it is of

zero value to people like me. There seem to be a lot of people like me who

run home networks (or really want to) and need some hand holding.

The guide was good to start but it left a lot missing ... things like why you

should partition properly to separate out the /home partition; how to tweak

squid so that it really works well; how to implement dyndns; how to run a

backup / image of the server; how to VPN / VNC..... , how to set up a common

apt update server, useful things like that ......

I know that home servers are not so important in say the States where the

Internet always works and works well. I live in the North West of Thailand.

For entertainment we go and watch the rice grow. Trust me, that really is

fun. The Internet performance sucks badly on a good day and so developing

your own independence is fundamental or at least managing your Internet use.

Squid helps, having your own SMTP is good (since the local ISP is owned by the

government and often has problems).... as you will note there are many topics

that could be covered.

Many writers can only see technology from the perspective of the West. Asia

is a whole different kettle of fish and a huge expanding market.

Do let me know if you have any plans to update this guide ..... even a

comment on the rss feed would be fine ....

Peter

----- End forwarded message -----

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

Talkback: Discuss this article with The Answer Gang

Published in Issue 164 of Linux Gazette, July 2009

2-Cent Tips

2-cent Tip: Wrapping a script in a timeout shell

Ben Okopnik [ben at linuxgazette.net]

Thu, 4 Jun 2009 08:22:35 -0500

----- Forwarded message from Allan Peda <[email protected]> -----

From: Allan Peda <[email protected]>

To: [email protected]

Sent: Wednesday, May 20, 2009 11:34:27 AM

Subject: Two Cent tip

I have written previously on other topics for LG, and then IBM, but it's

been a while, and I'd like to first share this without creating a full

article (though I'd consider one).

This is a bit long for a two cent tip, but I wanted to share a solution

I came up with for long running processes that sometimes hang for an

indefinite period of time. The solution I envisioned was to launch the

process with a specified timeout period, so instead of running the

problematic script directly, I would "wrap" it within a timeout shell

function, which is no-coincidentally called "timeout". This script

could signal reluctant processes that their time is up, allowing the

calling procedure to catch an OS error, and respond appropriately.

Say the process that sometimes hung was called "long_data_load"; instead

of running it directly from the command line (or a calling script), I

would call it using the function defined below.

The unwrapped program might be:

long_data_load arg_one arg_two .... etc

which, for a timeout limit of 10 minutes, this would then become:

timeout 10 long_data_load arg_one arg_two .... etc

So, in the example above, if the script failed to complete within ten

minutes, it would instead be killed (using a hard SIGKILL), and an error

would be retuned. I have been using this on a production system for two

months, and it has turned out to be very useful in re-attempting network

intensive procedures that sometimes seem never to complete. Source code

follows:

#!/bin/bash

#

# Allan Peda

# April 17, 2009

#

# function to call a long running script with a

# user set timeout period

# Script must have the executable bit set

#

# Note that "at" rounds down to the nearest minute

# best to use use full path

function timeout {

if [[ ${1//[^[:digit:]]} != ${1} ]]; then

echo "First argument of this function is timeout in minutes." >&2

return 1

fi

declare -i timeout_minutes=${1:-1}

shift

# sanity check, can this be run at all?

if [ ! -x $1 ]; then

echo "Error: attempt to locate background executable failed." >&2

return 2

fi

"$@" &

declare -i bckrnd_pid=$!

declare -i jobspec=$(echo kill -9 $bckrnd_pid |\

at now + $timeout_minutes minutes 2>&1 |\

perl -ne 's/\D+(\d+)\b.+/$1/ and print')

# echo kill -9 $bckrnd_pid | at now + $timeout_minutes minutes

# echo "will kill -9 $bckrnd_pid after $timeout_minutes minutes" >&2

wait $bckrnd_pid

declare -i rc=$?

# cleanup unused batch job

atrm $jobspec

return $rc

}

# test case:

# ask child to sleep for 163 seconds

# putting process into the background, the reattaching

# but kill it after 2 minutes, unless it returns

# before then

# timeout 2 /bin/sleep 163

# echo "returned $? after $SECONDS seconds."

----- End forwarded message -----

[ ... ]

[ Thread continues here (1 message/3.45kB) ]

2-cent Tip - Poor Man's Computer Books

Ben Okopnik [ben at linuxgazette.net]

Thu, 4 Jun 2009 08:10:13 -0500

----- Forwarded message from Paul Sands <[email protected]> -----

Date: Wed, 20 May 2009 14:43:43 +0000 (GMT)

From: Paul Sands <[email protected]>

Subject: 2-cent Tip - Poor Man's Computer Books

To: [email protected]

If, like me, you can't really afford expensive computer books, find a book in

your bookshop with good examples, download the example code and work through

the examples. Use a reference such as the W3C CSS technical recommendation. My

favourite is Sitepoint's CSS anthology

----- End forwarded message -----

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (3 messages/2.59kB) ]

2-cent Tip: Checking the amount of swapped out memory owned by a process

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Sat, 6 Jun 2009 01:15:58 +0700

Hi all

Recent Linux kernel versions allow us to see how much memory owned by

a process is swapped out. All you need to do is the PID of the process

and grab the output of related /proc entry:

$ cat /proc/<pid of your process>/smaps | grep Swap

To easily sum up all these per-process swap output, simply use below awk script:

$ cat /proc/<pid of your process>/smaps | grep Swap | awk '{ SUM +=

$2 } END { print SUM }'

the unit is in kilobyte.

PS: This is confirmed in Fedora 9 using Linux kernel version

2.6.27.21-78.2.41.fc9.i686.

regards,

Mulyadi.

[ Thread continues here (4 messages/4.29kB) ]

2-cent Tip: ext2 fragmentation

Paul Sephton [paul at inet.co.za]

Thu, 04 Jun 2009 01:52:01 +0200

Hi, all

Just thought I'd share this 2c tip with you (now the mailing list is up

- yay!).

I was reading a forum where a bunch of fellows were griping about e2fs

lacking a defragmentation tool. Now, we all know that fragmentation is

generally quite minimal with ext2/ext3, since the file system does some

fancy stuff deciding where to write new files. The problem though, is

when a file grows over time, it is quite likely going to fragment,

particularly if the file system is already quite full.

There was a whole lot of griping, and lots of "hey you don't need

defragging, its ext3 and looks after iteself, wait for ext4", etc. Not

a lot of happy campers.

Of course, Ted Ts'o opened the can of worms by writing 'filefrag', which

now lets people actually see the amount of fragmentation. If not for

this, probably no-one would have been complaining in the first place!

I decided to test a little theory, based on the fact that when the file

system writes a new file for which it already knows the size, it will do

it's utmost to make the new file contiguous. This gives us a way of

defragging files in a directory like so:

#!/bin/sh

# Retrieve a list for fragmented files, #fragments:filename

flist() {

for i in *; do

if [ -f $i ]; then

ff=`filefrag $i`

fn=`echo $ff | cut -f1 -d':'`

fs=`echo $ff | cut -f2 -d':' | cut -f2 -d' '`

if [ -f $fn -a $fs -gt 1 ]; then echo -e "$fs:$fn"; fi

fi

done

}

# Sort the list numeric, descending

flist | sort -n -r |

(

# for each file

while read line; do

fs=`echo $line | cut -f 1 -d':'`

fn=`echo $line | cut -f 2 -d':'`

# copy the file up to 10 times, preserving permissions

j=0;

while [ -f $fn -a $j -lt 10 ]; do

j=$[ $j + 1 ]

TMP=$$.tmp.$j

if ! cp -p "$fn" "$TMP"; then

echo copy failed [$fn]

j=10

else

# test the new temp file's fragmentation, and if less than the

# original, move the temp file over the original

ns=`filefrag $TMP | cut -f2 -d':' | cut -f2 -d' '`

if [ $ns -lt $fs ]; then

mv "$TMP" "$fn"

fs=$ns

if [ $ns -lt 2 ]; then j=10; fi

fi

fi

done

j=0;

# clean up temporary files

while [ $j -lt 10 ]; do

j=$[ $j + 1 ]

TMP=$$.tmp.$j

if [ -f $TMP ]; then

rm $TMP

else

j=10

fi

done

done

)

# report fragmentation

for i in *; do if [ -f $i ]; then filefrag $i; fi; done

Basically, it uses the 'filefrag' utility and 'sort' to determine which

files are fragmented the most. Then, starting with the most fragmented

file, it copies that file up to 10 times. If the copied file is less

fragmented than the original, the copy gets moved over the original.

Given ext2's continuous attempt to create new files as unfragmented,

there's a good chance with this process, that you end up with a

directory of completely defragmented files.

[ ... ]

[ Thread continues here (1 message/5.63kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 164 of Linux Gazette, July 2009

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to [email protected].

News in General

Power IT Down Day - August 27, 2009

Power IT Down Day - August 27, 2009

Intel, HP, Citrix and others will join together in encouraging

government and industry to reduce energy consumption on the second annual

Power IT Down Day, scheduled for August 27. The IT companies will promote

Power IT Down Day through a series of activities and educational events. A

schedule of those events, along with additional information about Power IT

Down Day and green IT, can be found at http://www.hp.com/go/poweritdown.

"We can't just sit back and leave it to the next person in line to fix

the planet," said Nigel Ballard, federal marketing manager for Intel. "By

turning off our own computers each and every night, we make a positive

environmental impact, and we proved that last year. At the end of the day,

we're in this together so let's power IT down on August 27 and be part of

the solution."

Ubuntu Remix to power Intel Classmate Netbooks

Ubuntu Remix to power Intel Classmate Netbooks

Canonical reached an agreement with Intel in June to deliver Ubuntu Netbook

Remix [UNR] as an operating system for Intel Classmate PCs.

The new Classmate is a netbook specifically designed for the education

market, It features a larger screen, more memory and larger SSD or HDD than

the original classmate PC. It will also feature a modified version of

Ubuntu Netbook Remix for the first time, improving the experience on

smaller screens. The Intel-powered convertible Classmate PC features a

touch screen, converts from a clamshell to a tablet PC and auto-adjusts

between landscape and portrait depending on how the machine is held. Ubuntu

UNR will support all these use cases.

Ubuntu Netbook Remix is designed specifically for smaller screens and to

integrate with touch screen technologies. It has proven to be popular with

consumers on other devices. Ubuntu UNR makes it easy for novice computer

users to launch applications and to access the Internet.

Citrix Contributes Code for Virtual Network Switch

Citrix Contributes Code for Virtual Network Switch

Citrix made several key virtualization announcements at its annual

Synergy Conference in Las Vegas last May. Key among these was the release

of its virtual network switch code to open source, as a way of extending

its low-cost XenServer infrastructure.

Along with the code donation, Citrix also announced version 5.5 of

Xenserver, its free hypervisor. Citrix also revised its virtual desktop

and virtual application products and released a new version of its bundled

virtualization infrastructure product, Xen Essentials. Finally, Citrix is

also offering virtual versions of its NetScaler web accelerator product,

the VPX virtual appliance for X86 hardware, allowing lower entry costs for

single and clustered NetScalers. These are described in the product

section, below.

The virtual switch code reportedly will run on both the Xen and KVM

hypervisors. This code can be used by 3rd party network vendors to develop

virtual switches for XenServer that can compete with the Cisco Nexus 1000V

virtual switch for VMware vSphere 4.0.

A virtual switch would be a good way to get more detailed insight into

virtual network traffic or to partition VM traffic and this helps complete

needed virtual infrastructure.

New Community Website for Growing XenServer Customer Base

New Community Website for Growing XenServer Customer Base

In addition to general availability of the 5.5 product releases, Citrix

announced in June the XenServer Central community website, a new resource

to help users with their use of the free virtualization platform. The

XenServer Central website will feature the latest product information, tips

and tricks, access to the Citrix KnowledgeCenter and web-based support

forums. Twitter and blog postings will also be integrated.

Offers from Citrix partners for free or discounted licenses for

complementary products will also be featured, as well as the latest

XenServer content on CitrixTV. XenServer Central went live on June 19 at

http://www.citrix.com/xenservercentral.

Dell and Goodwill Expand Free Computer Recycling Program

Dell and Goodwill Expand Free Computer Recycling Program

Dell and Goodwill Industries International are expanding Reconnect, a

free drop-off program for consumers who want to responsibly recycle any

brand of unwanted computer equipment, to 451 new donation sites in seven

additional states - Colorado, Illinois, Indiana, Missouri, New Mexico,

Oklahoma, and West Virginia. They are also expanding the program in

Wisconsin. Consumers can now drop off computers at more than 1,400 Goodwill

Locations in 18 states, plus the District of Columbia.

Goodwill, which is focused on creating job opportunities for individuals

with disabilities or others having a hard time finding employment, plans to

hire additional staff to oversee the expanded recycling program.

Reconnect offers consumers a free, convenient and responsible way to

recycle used computer equipment. Consumers can drop off any brand of used

equipment at participating Goodwill donation centers in their area and

request a donation receipt for tax purposes. For a list of participating

Goodwill locations across the U.S., visit http://www.reconnectpartnership.com.

Conferences and Events

- Cisco Live/Networkers 2009

-

June 28 - July 2, San Francisco, CA

http://www.cisco-live.com/.

- Kernel Conference Australia

-

July 15 - 17, Brisbane

http://au.sun.com/sunnews/events/2009/kernel/.

- OSCON 2009

-

July 20 - 24, San Jose, CA

http://en.oreilly.com/oscon2009.

- BRIFORUM 2009

-

July 21 - 23, Hilton, Chicago, IL

http://go.techtarget.com/.

- Open Source Cloud Computing Forum

-

July 22, online

http://www.redhat.com/cloud-forum/attend.

- Black Hat USA 2009

-

July 25 - 30, Caesars Palace, Las Vegas, NV

https://www.blackhat.com/html/bh-usa-09/bh-us-09-main.html.

- OpenSource World [formerly LinuxWorld]

-

August 10 - 13, San Francisco, CA

http://www.opensourceworld.com/.

- USENIX Security Symposium

-

August 10 - 14, Montreal, QC, Canada

Join us at the 18th USENIX Security Symposium, August 10 - 14, 2009, in

Montreal, Canada.

USENIX Security '09 will help you stay ahead of the game by offering

innovative research in a 5-day program that includes in-depth tutorials by

experts such as Patrick McDaniel, Frank Adelstein, and Phil Cox; a

comprehensive technical program, including a keynote address by Rich

Cannings and David Bort of the Google Android Project; invited talks,

including the "Top Ten Web Hacking Techniques of 2008: 'What's possible,

not probable,' " by Jeremiah Grossman, WhiteHat Security; a refereed papers

track, including 26 papers presenting the best new research;

Work-in-Progress reports; and a Poster session. Learn the latest in

security research, including memory safety, RFID, network security, attacks

on privacy, and more.

http://www.usenix.org/sec09/lga

Register by July 20 and save! Additional discounts available!

- PLUG HackFest: Puppet Theatre

-

August 15, Foundation for Blind Children - 1224 E. Northern, Phoenix, AZ

August's Phoenix Linux User Group HackFest Lab will center around puppet.

We will have a one-ish hour of presentation, a puppet test lab

including a fully fleshed out securely setup system. We will take

some fest time to show how easy it is to pull strings, maintain

configurations and standards in any network comprising two or more

systems. We might even turn the tables and attempt "evil" puppetry.

Please see the LinuxGazette's July "Linux Layer 8 Security" article

for the lab presentation materials. Bring your recipes to wow us,

since all PLUG events are full duplex, allowing for individual

expansion and enrichment as we follow our critical thought, rarely

constrained by anything but time. "Don't learn to hack; hack to

learn".

Everything starts at around 10:00 and should break down around 13:00!

Arizona PLUG

- VMworld 2009

-

August 31 - September 3, San Francisco, CA

www.VMworld.com.

- Digital ID World 2009

-

September 14 - 16, Rio Hotel, Las Vegas, NV

www.digitalidworld.com.

- SecureComm 2009

-

September 14 - 18, Athens, Greece

http://www.securecomm.org/index.shtml.

- 1st Annual LinuxCon

-

September 21 - 23, Portland, OR

http://events.linuxfoundation.org/events/linux-con/.

- SOURCE Barcelona 2009

-

September 21 - 22, Museu Nacional D'art de Catalunya, Barcelona, Spain

http://www.sourceconference.com/index.php/source-barcelona-2009.

- 2nd Annual Linux Plumbers Conference

-

September 23 - 25, Portland, OR

http://linuxplumbersconf.org/2009/.

- European Semantic Technology Conference

-

September 30 - October 2, Vienna, Austria

http://www.estc2009.com/.

Distro News

New Features listed for Forthcoming Ubuntu 9.10

New Features listed for Forthcoming Ubuntu 9.10

The next version of the popular Ubuntu Linux operating system is already taking shape

and will have among its planned enhancements:

- Faster Boot times. The target is a boot in under 10 seconds;

- Better audio and video support, including PulseAudio;

- New NVIDIA drivers add VDPAU and CUDA support for better quality and speed;

- New kernel: Linux Kernel 2.6.31 will be used, which includes support for kernel-mode

configuration of memory and graphics;

- Gnome 2.28, with preview testing of Gnome 3;

- Ext4 support will be the default for new installs of 9.10;

- Grub 2.0.

Here is a link to a YouTube demo of the Ubuntu 9.10 Alpha [running in a Parallels VM]:

http://www.youtube.com/watch?v=urITb_SBLjI

A naming contest for Ubuntu 10.04 and the South African Linux community

A naming contest for Ubuntu 10.04 and the South African Linux community

A fun little competition may interest the South African Linux

community. It surrounds the codename of Ubuntu 10.04. There are some great

prizes to be won. Details can be found at:

http://www.bravium.co.za/win

Fedora 11: Many Virtualization Enhancements

Fedora 11: Many Virtualization Enhancements

The Fedora project released the next version of the popular Linux

operating system in June. The new Fedora 11 release, known as "Leonidas",

showcases recent enhancements to virtualization technology including

management, performance and security.

Among the new virtualization features in Fedora 11, one is a redesign

of 'virt-manager', an end-to-end desktop UI for managing virtual

machines. The 'virt-manager' feature manages virtual machines no

matter what type of virtualization technology they are using. New

features within "virt-manager" include:

- a new VM creation wizard;

- improved support for host-to-host migration of VMs;

- an interface for physical device assignment for existing VMs,

easing allocation of physical resources tied to VMs;

- an upgraded statistics display that shows fine grained disk and

network I/O stats;

- improved VNC authentication to connect to VMs, which allow clients

to securely connect to remote VMs.

Learn more about this new tool at http://virt-manager.et.redhat.com/.

Also in Fedora's latest release is a sneak peak at some functional

improvements to the Kernel Virtual Machine (KVM) that can set the

performance bar higher for virtualized environments.

And the new integration of SELinux with the virtualization stack

via sVirt provides enhanced security. Virtual machines now run

effectively isolated from the host and one another, making it harder

for security flaws to be exploited in the hypervisor by malicious guests.

Fedora 11 includes the MinGW Windows cross compiler, which allows Linux-based

developers to cross-compile software for a Windows target. Other new features

in Fedora 11 include better support for fingerprint readers and the inclusion

of the ext4 file system.

To check out KVM, sVirt, Virt Manager and other new technologies included in

Fedora 11, download Fedora 11 at http://get.fedoraproject.org.

The Fedora Project showed its release schedule for Fedora 12, which does not

show the customary public alpha release. This will leave testers just two

public development builds to try. The first beta is planed for late August,

while the second one is expected in early October. The final release of Fedora

12 is currently scheduled for November 3rd, 2009. For further information,

check the Fedora 12 release schedule at:

http://fedoraproject.org/wiki/Releases/12/Schedule.

Software and Product News

Opera 10 beta: new features, new speed

Opera 10 beta: new features, new speed

Opera has released the first beta of Opera 10, which sports new features, a new look and

feel, and enhanced speed and performance. Opera 10 is completely free for Linux, Mac and Windows

users from http://www.opera.com/next/.

Using state-of-the-art compression technology, Opera Turbo delivers 3x to 4x the speed on

slower connections and can offer broadband-like speeds on dial-up. Opera 10 is also much

faster on resource intensive pages such as Gmail and Facebook and is more than 40% faster

than Opera 9.6.

Web developers can enjoy Web Fonts support, RGBA/HSLA color and new SVG improvementsalong

with the new features in Opera Dragonfly, Opera's on-board Web development tools. Opera

Dragonfly alpha 3 now allows editing of the DOM and inspection HTTP headers in more than

36 languages.

Another updated feature is the tab bar, updated from the original design, and is now

resizeable, but with a twist. Pull down the tab bar (or double-click the handle) to

reveal full thumbnails of all open tabs.

Oracle 11g Sets New Price/Performance Record with TPC-C Benchmark

Oracle 11g Sets New Price/Performance Record with TPC-C Benchmark

Oracle and HP have set a new world record for Oracle Database 11g Standard Edition One

running on Oracle Enterprise Linux. With this result, Oracle now holds the top five

record benchmark positions in the Top Ten TPC-C price/performance category.

The winning HW/SW combination - including Oracle Database 11g, Oracle Enterprise Linux

and the HP ProLiant ML350 G6 Server - was posted in late May.

The result was 232,002 transactions per minute with a price/performance of $.54USD/tpmC,

using Oracle Database 11g Standard Edition One with Oracle Enterprise Linux running on an

HP ProLiant ML350 G6 server with a single-socket Intel Xeon E5520 quad-core processor and

HP Smart Array P411 controller. This package delivered the best price-per-transaction-per-minute

ever achieved with the TPC-C benchmark, in addition to delivering the fastest result for a one

socket system.

TPC-C is an OLTP (online transaction processing) benchmark developed by the Transaction

Processing Performance Council (TPC). The TPC-C benchmark defines a rigorous standard

for calculating performance and price/performance measured by transactions per minute

(tpmC) and $/tpmC, respectively. More information is available at http://www.tpc.org/.

To see the Top Ten TPC-C benchmarks by Price, go here:

http://www.tpc.org/tpcc/results/tpcc_price_perf_results.asp

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Layer 8 Linux Security: OPSEC for Linux Common Users, Developers and Systems Administrators

By Lisa Kachold

As users of Linux each of us is in a unique position with a powerful tool.

Use of any tool without regard for security is dangerous. Developers

likewise carry a great responsibility to the community to maintain systems

in a secure way. Systems Administrators are often placed in the

uncomfortable role of holding a bastion between insecurity or pwnership and

uptime.

Let's evaluate just one standard security methodology against our use of

Linux as a tool: OPSEC.

Operations security (OPSEC) is a process that identifies critical information

to determine if friendly actions can be observed by adversary intelligence

systems, determines if information obtained by adversaries could be

interpreted to be useful to them, and then executes selected measures that

eliminate or reduce adversary exploitation of friendly critical information.

"If I am able to determine the enemy's dispositions while at the same time

I conceal my own, then I can concentrate and he must divide." - Sun Tzu

While we might not realize it, because we are firmly rooted

intellectually in the "linux security matrix", a great many

international, national and local "enemies" exist who are happily

exploiting the linux TCP/IP stack while laughing maniacally. If you

don't believe me, on what basis do you argue your case? Have you ever

tested or applied OPSEC Assessment methodologies to your (select one):

a) Laptop SSH

b) Application Code or db2/mysql/JDBC

c) Server (SMTP, DNS, WEB, LDAP, SSH, VPN)

OPSEC as a methodology was developed during the Vietnam War, when Admiral

Ulysses Sharp, Commander-in-chief, Pacific, established the "Purple Dragon"

team after realizing that current counterintelligence and security measures

alone were insufficient. They conceived of and utilized the methodology of

"Thinking like the wolf", or looking at your own organization from an

adversarial viewpoint. When developing and recommending corrective actions

to their command, they then coined the term "Operations Security".

OPSEC is also a very good critical assessment skill to teach those who are

learning to trust appropriately and live in this big "dog eat dog" world.

A psychologist once suggested to me that "thinking of all the things you

could do (but wouldn't)" was a technique invaluable to understanding human

nature, personal motivations and chaos/order in natural systems. Since linux

people tend to be interested in powerful computing, glazed eye techno-sheik,

and s-hexy solutions that just work - they also are generally extremely

ethical and take reasonable responsibility for computer security, once they

know where to start.

Now, we all know that computer security is a layered process, wherein we, as

users, developers and administrators form one of the layers.

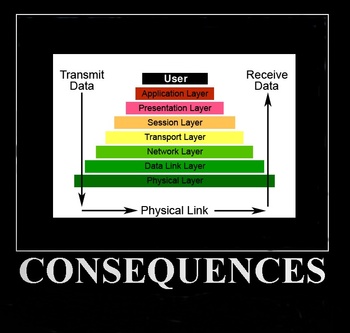

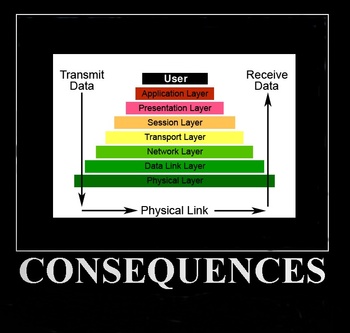

The OSI model is a 7-layer abstract model that describes an architecture of

data communications for networked computers. The layers build upon each other,

allowing for abstraction of specific functions in each one. The top (7th)

layer is the Application Layer describing methods and protocols of software

applications.

Layer 8 is Internet jargon used to refer to the "user" or "political"

layer as an extension of the OSI model of computer networking.

Since the OSI layer numbers are commonly used to discuss networking topics,

a troubleshooter may describe an issue caused by a user to be a layer 8 issue,

similar to the PEBKAC acronym and the ID-Ten-T Error.

We can see that SSH keys (or a lack of them) alone is not any big

security issue. However add root users, fully routable internet

addressing (rather than NAT), no iptables or other firewall, no

password management or security policy and a curiously Ettercap armed

angry underpaid antisocial personality disorder user, and well, there

might be a problem?

The OPSEC Assessment Steps are:

1. Identify critical information

Identify information critically important to the organization, mission,

project or home [intellectual property, mission details, plans, R&D,

capabilities, degradations, key personnel deployment data, medical records,

contracts, network schematics, etc.]

But "Wait", you say, "I am just a kid with a laptop computer!" Do you frequent

the coffee shop? Do you use shared networking? Do you allow others to watch

you login to your bank information from over your shoulder. If so, OPSEC is

certainly for you.

While this step is a great deal more dire to a systems administrator,

who clearly knows how easy it is to crash a system and lose all of the

work from 30 or more professionals with a single command, or one who

realizes that pwnership means never being able to define stable

uptime, each and every computer user knows what it's like not to be

able to depend on any data stability. Security = stability in every

venue.

2. Identify potential adversaries

Identify the relevant adversaries, competitors or criminals with both

intent and capability to acquire your critical information.

If you have not taken a moment to look at your logs to see all the

attempts to gain access via SSH or ftp, or sat seriously

in a coffee shop evaluating wireless traffic or watched tcpdump to see

what is occuring on a University network, this might be the time to

start. It's not just people from China and Russia (scripts including

netcat, nmap, and MetaSploit can be trivially configured to spoof

these addresses). Wake up and look around at conferences and ask

yourself seriously, who is a competitor, who is a criminal. This is a

required step in OPSEC. In the 1990's in the Pacific Northwest,

adversarial contract Linux Systems Administrators regulary attacked each

other's web servers.

But, you say, "why would they want to get into my little laptop?". You have

24x7 uptime, correct? Your system can be configured via Anacron, as a vague

untraceable BOT net and you would never even know it? That BOT net can hog

your bandwidth and steal your processing power and eventually be used to

take down servers.

3. Identify potential vulnerabilities

From the adversary's, competitor's, or thief's perspective, identify

potential vulnerabilities and means to gain access to the results of step 1.

Interview a representative sample of individuals.

If you have not googled to ensure that the version of Firefox you are running

is secure from known exploits, you have not completed this step. If you don't

know the current vulnerabilites of the version of OpenSSH and Apache or Java

or other mydriad of binary source code installed with your Ubuntu or Fedora,

you have no basis for using linux technology wisely.

While I don't recommend that everyone attend DefCon, reading their

public website might be sufficient to impress you with the importance

of OPSEC. Better yet, burn yourself a BackTrack4 LiveCD and run some

of the tools against your own systems.

There are two basic ways to get into Linux using the OSI stack models:

"top down" or "bottom up".

From: OSI Model

Layer:

- Physical Layer

- Data Link Layer

- WAN Protocol architecture

- IEEE 802 LAN architecture

- IEEE 802.11 architecture

- Network Layer

- Transport Layer

- Session Layer

- Presentation Layer

- Application Layer

- Human Layer

Most issues are due to Layer 8!

4. Assess risk

Assess the risk of each vulnerability by its respective impact to mission

accomplishment / performance if obtained.

After testing your SSH via an outside network or scanning your J2EE

application cluster from a Trial IBM WatchFire AppScan key, or fuzzing your

Apache 1.33/LDAP from the shared (no VLAN public network) or accessing/cracking

your own WEP key in five minutes, you will clearly see that you essentially own

nothing, can verify no stability, and as soon as your systems are encroached

all bets are off.

This is, for instance, the point where the iPhone/Blackberry user/server

Administrator realizes that his phone is perhaps doing more than he planned

and wonders at the unchecked, unscanned pdf attachment inclusion policy set

in Layer 8/9, but without OPSEC, this point always occurs too late. Phones

potentially integrate with everything, have IP addresses and are generally

ignored!

Again, leaving off printers, for instance, is folly, since many HP printers

and IPP protocol could be trivially encroached or spoofed via something

unleashed from an innocous attachment.

This step must be all inclusive including every adjacent technology that

each system interfaces with.

5. Define counter measures

Generate / recommend specific measures that counter identified

vulnerabilities. Prioritize and enact relevant protection measures.

Now before you all go kicking and screaming, running from this process

due to the pain of "limitations" on your computing freedom, be assured

that there are "solutions".

6. Evaluate Effectiveness

Evaluate and measure effectiveness, adjust accordingly.

These solutions can be as simple as a wrapper for SSH, upgrading to OpenVPN

(which is really trivial to implement), using NoScript on a browser, or never

using a browser via root from a production server. They can include a server

based IPTABLE implemented once, or be as all inclusive as a Layer 7 application

switch (which can be cheaper in a "house of cards" development shop than a

complete code review (for PCI compliance) or rewrite. For Tomcat fuzzing for

instance, an inline IDS or mod_security or mod_proxy architectural change will

save months of DoS.

If you must engage in social networking or surfing to warez sites accepting

javascript, doing so from a designated semi insecure system might be a good

measure.

Thinking outside of the box at layered solutions is key, and at the

very least, quit immediately continuing the Layer 8 behavior

identified as dangerous. Turn off SSH at the coffee shop; you aren't

using it anyway. In fact, turn off Bluetooth as well, which is

probably still on from your build?

A great deal of data will be uncovered through this investigation, so an

organized documented approach will allow you to sort it out. You will

find that it might be simpler to replace or upgrade, rather than

attempt to protect. It's not unrealistic to expect to have to upgrade at

least every four years, considering that you are applying standard patches,

based on the past 10+ years of Linux history.

If (when) you identify dangerous Layer 9 (management) policies, document and

escalate, so that your responsibility as an ethical technology user has been

passed along or up. Apathy and attitudes such as "security reporting is

unpopular" or "everyone ignored it this long, if I say something they will

think I am silly" are destructive to all. Use references if you must;

security is everyone's responsibility.

The single most dangerous Layer 8/9 policy is one of computer security

compartmentalization. Prime examples of compartmentalization include:

- Ubuntu is a secure distro right out of the box

- Linux is more secure than Windows, so I don't have to worry

- Our security guy is Ted; it's not my job

- Our firewall department watches all security issues, it's not a webmaster

function

While in a healthy corporate enviroment responsibility travels up,

in a healthy open source environment responsibility is an organized process;

and everywhere security cannot be ignored.

Zero vulnerabilities are completely unrealistic. If

you are working in a shop that functions as if there is complete

systems security without OPSEC or catching yourself thinking that

there are no security risks, this is a serious red flag. 100%

awareness is the only realistic approach. As a general rule, those

aware of what to protect have a better chance of protecting sensitive

information as opposed to those unaware of its value.

Obtain threat data from the experts, don't try to perform all

the analysis on your own.

This can be as simple as getting a list of the data from CERT related to your

technologies, be it Cisco IOS for your Pix or simply OpenWRT.

And much as you are not going to want to believe it, usually there will still

be 8% of computer security exploit experts who can, after you have mitigated

all known risks, still get into your systems. Feature this truth into your

analysis.

Focus on what can be protected versus what has been revealed.

For instance, you might realize, after playing with BackTrack3 SMB4K that all

this time your SAMBA was allowing Wireless file sharing to Windows neighbors

and your private information including your personal photos were available to

all. Simply close the door. Paranoia in security is optional and certainly

not recommended.

Observations, findings and proposed counter measures are best

formatted in a plan of action with milestones to mitigate

vulnerabilities. In a production environment, this plan would be

forwarded in a complete brief to decision makers, adjacent team users

and anyone with a stake in uptime.

Integrate OPSEC into planning and decision processes from the

beginning. Waiting until the last minute before taking a product to

market (or from market) to conduct an assessment may be too late and

costly.

"What QA department?" you might ask. "We are the QA department and we don't

have time to scan". It's simple to run a Wikto/Nikto or evaluation scanner

against your application.

Put up a nice php/Mysql CMS or another sharing portal? Using SVN? You might

have just opened a nice encrypted tunnel directly into your systems. If you

don't test it, you won't know! Did you evaluate that SugarCRM version before

building? Did you look into the known exploits of that open source tool before

adopting it?

Regular assessments ensure your best protection.

Like disaster recovery, systems OPSEC Assessments are built upon. Revealed

information is retained as a resource; regularly scheduled assessments

continue as a group team coordinated event.

Systems security is not a secret; OPSEC reminds us that all systems are only

as sick as their secrets.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/kachold.jpg)

Lisa Kachold is a Linux Security/Systems Administrator, Webmistress,

inactive CCNA, and Code Monkey with over 20 years Unix/Linux production

experience. Lisa is a past teacher from FreeGeek.org, a presenter at

DesertCodeCamp, Wikipedia user and avid LinuxChix member. She organized

and promotes Linux Security education through the Phoenix Linux Users

Group HackFEST Series labs, held second Saturday of every month at The

Foundation for Blind Children in Phoenix, Arizona. Obnosis.com, a play

on a words coined by LRHubbard, was registered in the 1990's, as a "word

hack" from the Church of Scientology, after 6 solid years of UseNet news

administration. Her biggest claim to fame is sitting in Linux Torvald's

chair during an interview with OSDL.org in Oregon in 2002.

Copyright © 2009, Lisa Kachold. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 164 of Linux Gazette, July 2009

Building the GNOME Desktop from Source

By Oscar Laycock

First, I must say that the GNOME

documentation "strongly recommends" against building from

source files. If you do, it suggests using the GARNOME scripted

system. Even GARNOME it says, is only for the "brave and

patient".

I started building programs from source as I wanted to upgrade a

very old distribution and did not have enough disk space to install a

Fedora or Ubuntu. The memory on my old PC is probably a bit low too,

at 128 Meg Also, it is fun to run the latest versions, be on the

leading edge, and get ahead of the major distributions. You could not

run Windows Vista (tm) on my old PC!

I took the files from the latest GNOME release I could find, being

version 2.25. This was probably a mistake. I later found out that odd

numbered minor releases like 25 are development releases and may be

unstable. The stable 2.26 series should have come out in March. In

fact, when I built the file manager "Nautilus", I could not

find a dependency, "libunique", so I went back to version

2.24. The GNOME sources are at

http://ftp.gnome.org/pub/gnome.

I used the Beyond Linux from Scratch (BLFS) guide to know where to

find packages and in what order to build them. You can find it at

http://www.linuxfromscratch.org

(LFS). Unfortunately the BLFS guide uses versions from the summer of

2007, GNOME version 2.18. Also, I installed the libraries and

programs in the default location /usr/local, but looking back it

would have been better to have put them in their own directory and

give a --prefix option to "configure", as the BLFS project

recommends.

It took me about a month to build a basic GNOME desktop in my

spare time. Disappointingly, you don't see anything until the last

piece, "gnome-session", is in place.

When I ran my first applications like the image-viewer

"eye-of-gnome", some appeared on the desktop and then

core-dumped. The core file said the last function called was in GTK,

in the libgtk-x11 library. I had had to upgrade my GTK when building

gnome-panel. I had chosen the latest version 2.15.2. This is probably

a development releases too. When I switched back to my year-old GTK,

everything was fine. I finally settled for the stable version

recommended by GARNOME. It took me a few days to sort this out!

There is a free book on programming GNOME, "GTK+ / Gnome

Application Development" by Havoc Pennington available at

http://developer.gnome.org/doc/GGAD.

This is somewhat old.

Overall, I like GNOME because it is truly free and lighter than

KDE.

GNOME versus KDE

Last year (2008), I built the 4.0 release of KDE

from the source files. GNOME is made up of around sixty packages, KDE

about twenty. Qt (the user

interface framework) and KDE are much larger than GNOME. The

compressed source of Qt is about three times the size of the

equivalent GTK+, GLib, Cairo and Pango put together. But, of course,

KDE includes the web browser Konqueror. GNOME needs the Gecko layout

engine from Mozilla.org that you see in Firefox.

Qt and KDE's C++ code is slower to compile than GNOME's C, at

least on my old laptop - a Pentium III with only 128 Meg RAM! KDE

uses CMake rather than

"configure" to generate the makefiles. It took a while to

learn how to use CMake, but its files are much clearer.

The BLFS instructions only covered KDE 3.5, so I had to adjust

things for KDE 4.0. In GNOME, if you wanted to find out where to find

some unusual packages, you could look at the makefiles of the GARNOME

project. Its website is at

http://projects.gnome.org/garnome.

GARNOME automatically fetches and builds a given GNOME release.

For KDE, I had to change a little code when building the

"strigi"

library. (It searches through files for the semantic desktop). To be

exact, I had to add definitions for "__ctype_b" and

"wstring". For GNOME, it was easier. I mostly had to go off

and find required libraries that were not in the BLFS notes.

KDE has trouble starting up on my laptop, although it is all right

on my PC. There is a lot of swapping in and out and thrashing with my

low memory. In fact, both computers have 128 Megabytes. The laptop

even has a faster processor. It has a Pentium III processor whereas

than the PC has a Pentium II. The disks even seem about the same

speed in simple tests. It is a puzzle. Anyway, KDE takes over ten

minutes to start on my laptop! GNOME takes one minute. (KDE takes

just over a minute on my PC.)

Browsers

On KDE, I could get the Konqueror browser running fairly easily.

But I had to add about 250 Meg of swap space to get the WebKit

library to link with the low memory on my PC. WebKit is the rendering

engine. I like the way Konqueror uses many of the same libraries as

other parts of KDE. It makes Konqueror a bit lighter when running

inside of KDE. (I have put the commands I use to add swap at the

end).

With Firefox 3, I had

to turn the compiler optimization off with the "-O0" switch

to avoid thrashing with the small memory on my old laptop. I also had

to leave out a lot of the components in "libxul". Otherwise

the link of libxul ends up just swapping in and out as well. (I

understand XUL is the toolkit used to build the user interface of

Mozilla). Firefox does not work without a real libxul though.

I thought I would try building GNOME's own browser -

Epiphany.

But I had trouble configuring it to use the Gecko rendering libraries

in the Mozilla Firefox 3 browser I had just built. Epiphany offers a

simpler interface than Firefox and concentrates just on web browsing,

taking features like mail from other GNOME applications.

Unfortunately Epiphany seems to expect "pkg-config" entries

for Gecko, which Firefox 3 had not generated. (When you install a

library, a file in /usr/lib/pkgconfig is created, listing the

compiler flags (-L, -l and -I) needed by a program using the

library.) I manually added in all the Firefox shared libraries I

could find. But there seemed to be some core Firefox functions that

Epiphany still could not access. However, Epiphany does really

recommend Firefox 2. But Firefox 2 uses a lot of deprecated functions

in my more modern version of Cairo. I got lost in all the errors.

Then I noticed that Epiphany's web page mentions an

experimental

version using WebKit as the

browser engine. But the configuration seemed to have incomplete

autoconf/automake files and use the Apple xcodebuild tool. It was too

difficult for me. You can begin to see there is some skill required

to build a complete distribution!

I later learnt from a GNOME

blog

that the Mozilla version of Epiphany is not actively developed any

more, because about a year ago GNOME started to switch to WebKit. But

the switch has not been completed. It was originally scheduled to be

delivered with GNOME 2.26, but has been postponed to GNOME 2.28. I

remembered that WebKit is a fork of KDE's KHTML browser engine used

by Konqueror. It was made by Apple for their Safari browser, and more

recently used by Google in its Chrome browser.

In the end, I built the Dillo

browser. It used to use GTK and Glib. But it now uses the

FLTK

(Fast Light Tool Kit) - a C++ GUI toolkit. Of course, it does not

support Javascript or Java.

Strangely enough, Konqueror runs quite well within GNOME! I think

I will use it when developing complicated web pages which use

Javascript and AJAX.

Problems compiling packages

Would you enjoy building programs from the source tarballs? I

think you probably need some knowledge of C. Not too much, as I

didn't know a lot about C++ when I built KDE. There always seem to

be a few problem with compiling packages. Here are a few to give you

an idea.

A simple problem occurred when I was building the

"dbus-glib-bindings" package:

DBUS_TOP_BUILDDIR=.. dbus-send --system --print-reply=literal --dest=org.freedesktop.DBus /org/freedesktop/DBus org.freedesktop.DBus.Introspectable.Introspect > dbus-bus-introspect.xml.tmp && mv dbus-bus-introspect.xml.tmp dbus-bus-introspect.xml

Failed to open connection to system message bus: Failed to connect to socket /usr/local/var/run/dbus/system_bus_socket: Connection refused

I guessed it wanted the dbus system bus running. I remembered

something from building D-Bus last year, had a look around, and ran

"dbus-daemon --system".

A typical problem was with gnome-terminal. It included the

"dbus-glib-bindings.h" header file which itself included

the glib header "glib-2.0/glib/gtypes.h". But glib did not

want its header file directly included and had put a "#error"

statement in to give a warning. I solved this by crudely commenting

out the #error.

The strangest problem I got was when running GStreamer's configure

script:

./configure: line 32275: syntax error near unexpected token `('

./configure: line 32275: ` for ac_var in `(set) 2>&1 | sed -n 's/^\([a-zA-Z_][a-zA-Z0-9_]*\)=.*/\1/p'`; do'

I tried narrowing it down by putting in some simple echo statements

and then I changed some back ticks to the more modern "$()".

But, I had the same problem with the GStreamer Plugins package. So I

decided to upgrade my version of Bash, which was a year old. The LFS

project suggests using the "malloc" (memory allocation)

from the libc library rather than that bundled with Bash, so I also

ran configure with the option "--without-bash-malloc". All

this luckily fixed the problem.

Out of interest, I have added the commands I typed (over sixty

times) to build the packages, at the end of this

article.

GNOME libraries

I enjoy reading about the libraries as I build them. I also wanted

to draw a diagram showing which packages needed to be built first.

The libraries are, in fact, described very well in the GNOME

documentation at

http://library.gnome.org/devel/platform-overview.

The libraries shown below are used in various combinations by the

GNOME applications you see on the desktop.

My diagrams show the dependencies between packages. For example

GTK requires Pango and Cairo. A package usually generates many

libraries, so it is nice to think at the package level. I believe you

can generate graphs of dependencies automatically from the GNOME

build systems.

I have included some notes that I found in the README file or

documentation that came with the packages.

I did not build most of the audio or video libraries, as I don't

play music or watch videos on my laptop! It also keeps things simple.

Base libraries

/|\ /|\

+-------------------+

| pygtk |

| pycairo |

| pygobject | /|\ /| |\

| (python bindings) | +---- gail ----+ +-----------+ +------+

+-------------------+ | (GNOME | | dbus-glib | |libxml|

/|\ |accessibility)| | bindings | | |

+----------------------- gtk+ -----------------------+ +-----------+ +------+

| (GIMP Tool Kit - graphical user interfaces) | | D-Bus |

|-- pango --+--------+ +---- atk -----+ | (message |

|(rendering)| libpng | +---------+(accessibility| | passing |

| of text) | libjpeg| | libtiff | interfaces) | | system) |

+----------------------- cairo ----------------------+ +-----------+

| (2D graphics library for multiple output devices) |

+------------------+------------ pixman -------------+

| (low level pixel |

| manipulation, e.g. compositing) |

+----------------------------------

/|\

+-------- gvfs ---------+

| (backends include |

/|\ | sftp, smb, http, dav) |

+----------------------- glib -----------------------+

|(data structures, portability wrappers, event loop, |

|threads, dynamic loading, algorithms, object system,|

|GIO - the virtual file system replacing gnome-vfs) |

+----------------------------------------------------+

Notes

The GIO library in GLib

tries not to clone the POSIX API like the older gnome-vfs package,

but instead provides higher-level, document-centric interfaces. The

GVFS backends for GIO are run in separate processes, which minimizes

dependencies and makes the system more robust. The GVFS package

contains the GVFS daemon, which spawns further mount daemons for each

individual connection.

The core Pango layout engine

can be used with different font and drawing backends. GNOME uses

FreeType, fontconfig, and Cairo. But on Microsoft Windows, Pango can

use the native Uniscribe fonts and render with the Win32 API.

The Cairo documentation

says it is "designed to produce consistent output on all

output media while taking advantage of display hardware acceleration

when available (for example, through the X Render Extension) ... The

cairo API provides operations similar to the drawing operators of

PostScript and PDF. Operations in cairo include stroking and filling

cubic Bezier splines, transforming and compositing translucent

images, and antialiased text rendering. All drawing operations can be

transformed by any affine transformation (scale, rotation, shear,

etc."

GTK+ was first developed as a

widget set for the GIMP (GNU Image Manipulation Program). The FAQ

mentions one of the original authors, Peter Mattis, saying "I

originally wrote GTK which included the three libraries, libglib,

libgdk and libgtk. It featured a flat widget hierarchy. That is, you

couldn't derive a new widget from an existing one. And it contained a

more standard callback mechanism instead of the signal mechanism now

present in GTK+. The + was added to distinguish between the original

version of GTK and the new version. You can think of it as being an

enhancement to the original GTK that adds object oriented features."

The GTK+ package contains GDK, the GTK+ Drawing Kit, an easy to use

wrapper around the standard Xlib function calls for graphics and

input devices. GDK provides the same API on MS Windows.

The GAIL

documentation says "GAIL provides an implementation of the

ATK interfaces for GTK+ and GNOME libraries, allowing accessibility

tools to interact with applications written using these libraries ...

ATK provides the set of accessibility interfaces that are implemented

by other toolkits and applications. Using the ATK interfaces,

accessibility tools have full access to view and control running

applications." GAIL stands for GNOME Accessibility

Implementation Layer. It has just been moved into GTK.

D-BUS is a simple inter

process communication (IPC) library based on messages. The

documentation says "a core concept of the D-BUS

implementation is that 'libdbus' is intended to be a low-level API,

similar to Xlib. Most programmers are intended to use the bindings to

GLib, Qt, Python, Mono, Java, or whatever. These bindings have

varying levels of completeness."

PyGObject allows you to use GLib, GObject and GIO from Python

programs.

Platform libraries

gnome-icon-theme

/|\

icon-naming-utils

(xml to translate old icon names)

libgnomeui*

(a few extra gui widgets, many now in gtk)

/|\

+---------------------------+

| |

libbonoboui* gnome-keyring

(user interface controls (password and secrets daemon

for component framework) and library interface)

/|\ |

| libtasn1

| (Abstract Syntax Notation One)

|

+--------------------------------------+

| |

libgnome* libgnomecanvas*

(initialising applications, starting programs, (structured graphics:

accessing configuration parameters, activating polygons, beziers, text

files and URI's, displaying help) pixbufs, etc. Rendered

/|\ by Xlib or libart)

+--------------------+----------+ |

| | /|\