...making Linux just a little more fun!

June 2006 (#127):

- Talkback, by Kat Tanaka Okopnik

- The Mailbag, by Kat Tanaka Okopnik

- The Mailbag (page 2), by Kat Tanaka Okopnik

- The Mailbag (page 3), by Kat Tanaka Okopnik

- News Bytes, by Howard Dyckoff

- FVWM: How Styles are Applied, by Thomas Adam

- FvwmEvent: conditional window checking by example, by Thomas Adam

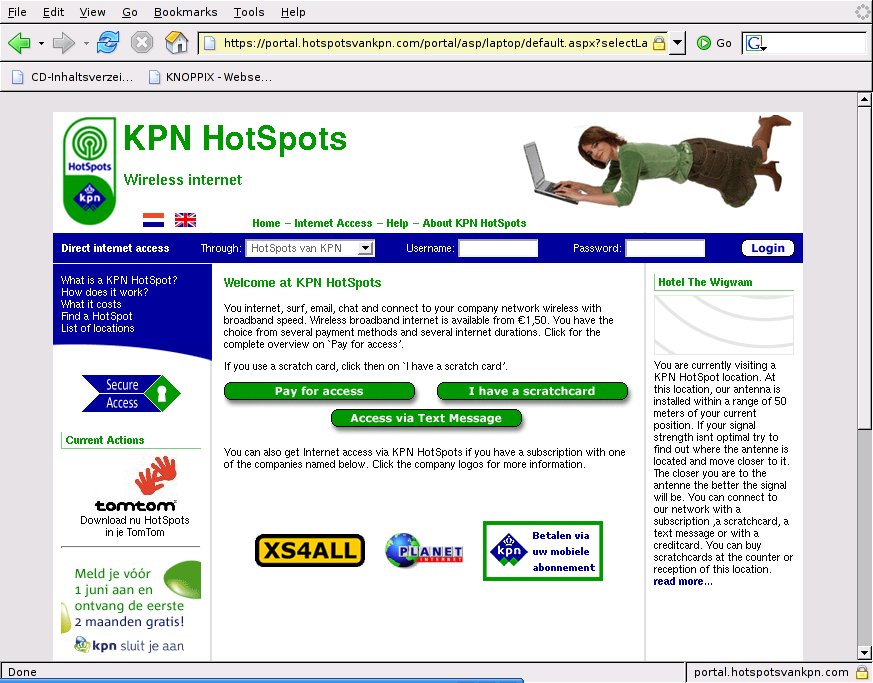

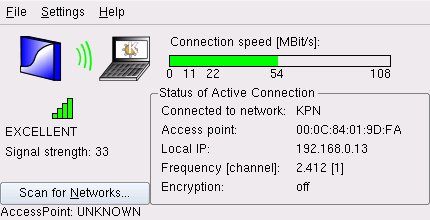

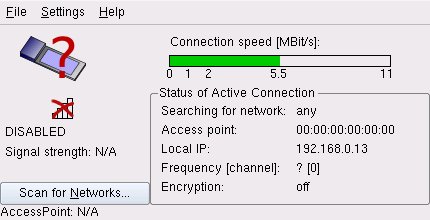

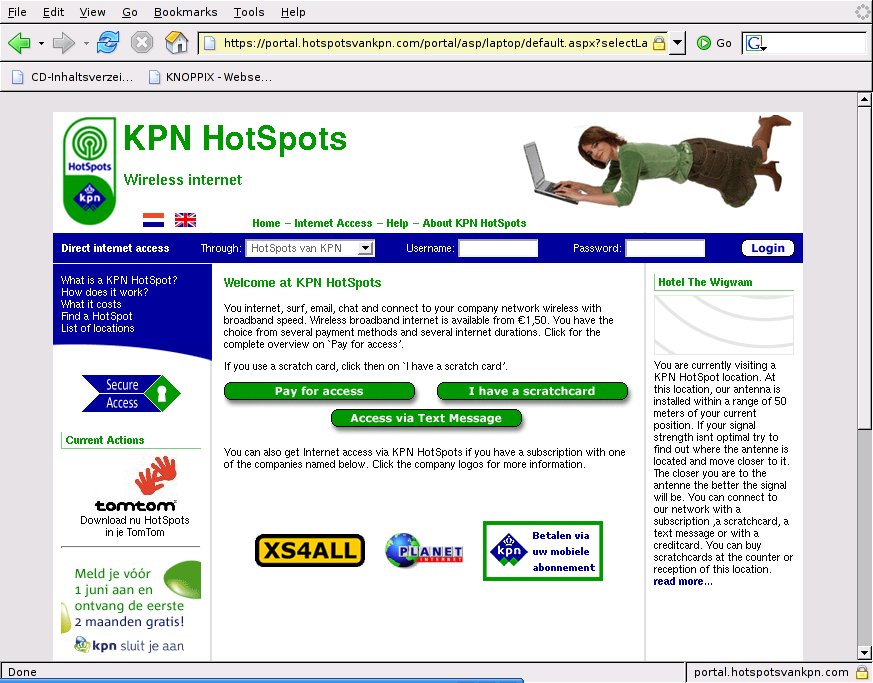

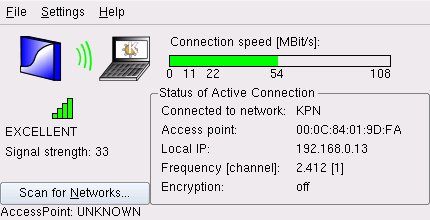

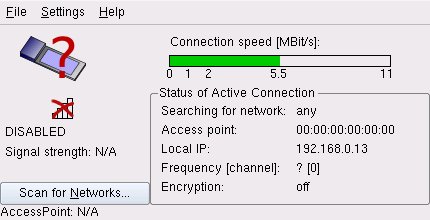

- With Knoppix at a HotSpot, by Edgar Howell

- Review: amaroK (audio player for KDE), by Pankaj Kaushal

- State of the antispam regime, by Rick Moen

- Ecol, by Javier Malonda

- The Linux Launderette

Talkback

By Kat Tanaka Okopnik

TALKBACK

Submit comments about articles, or articles themselves (after reading our guidelines) to The Editors of Linux Gazette, and technical answers and tips about Linux to The Answer Gang.

Talkback:123/bechtel.html

Talkback:123/bechtel.html

[In reference to the article Re-compress your gzipp'ed

files to bzip2 using a Bash script (HOWTO) - By Dave Bechtel]

Dmitri Rubinstein (rubinste at graphics.cs.uni-sb.de)

Thu Feb 9 01:28:46 PST 2006

There is following code in the script:

# The Main Idea (TM)

time gzip -cd $f2rz 2>>$logfile \

|bzip2 > $f2rzns.bz2 2>>$logfile

# rc=$?

[ $? -ne 0 ] && logecho "!!! Job failed."

# XXX Currently this error checking does not work, if anyone can fix it

# please email me. :-\

The gzip error code will be always ignored so error checking will not work

correctly.

"set -o pipefail" should help in this case, however it is available

only since bash 3.0.

Maybe better to use PIPESTATUS variable. e.g:

> true | false | true

> echo ${PIPESTATUS[*]}

0 1 0

Talkback:123/smith.html

Talkback:123/smith.html

[In reference to the article A

Short Tutorial on XMLHttpRequest() - By Bob Smith ]

Willem Steyn (wsteyn at pricetag.co.za)

Mon Feb 20 09:30:41 PST 2006

This example in the 4th exercise works great.Just one problem that

I have with all these AJX & XMLHTTPREQUEST is that the user's browser (IE)

prompts every time at the call-back that the page is trying to access off-site

pages that are not under its control, and that it poses a security risk. How

do you get past this without asking the user to turn off the security settings?

Take for example a real-world scenario where a transactional page must

get a real-time transaction back from another server-based page. Then upon clicking

something like a SUBMIT button, the user is going to get this little message

popping up, stating that it is a security risk. Any ideas?

Thanks in advance.

[Ben] -

Hi, Willem -

First off, please turn off the HTML formatting when emailing TAG. Many people

in help forums, including a number of people here, will not take the trouble

to answer you if you require them to wade through HTML markup, and will discard

your mail unread; others will just be annoyed at having to clean up the extraneous

garbage. In addition, you've tripled the size of your message. I've corrected

the above problems, but some information may have been lost in the process.

Pardon me... you've used 'IE' and 'security' in the same sentence, without

the presence of modifiers such as 'bad' or 'non-existent'. I'm afraid that

makes your sentence unparseable. :)

As I sometimes point out to people, the term "Linux" in "Linux

Gazette" tends to imply certain things - one of which is that answering

questions like "How do I make IE do $WHATEVER" comes down to "use

Linux". Asking us how to fix broken crapware in a legacy OS is, I'm afraid,

an exercise in futility; the very concept of "security settings"

in a *browser*, a client-end application designed for viewing content, is

something that can only be discussed after accepting the postulate that water

does indeed run uphill, and the sun rises in the west.

Perhaps your client can mitigate the problem somewhat by running a browser

that isn't broken-as-designed; Mozilla Firefox is a reasonable example of

such a critter. For anything beyond that, I'd suggest asking in Wind0ws- and

IE-related forums.

Talkback:124/orr.html

Talkback:124/orr.html

[In reference to the article PyCon 2006 Dallas - By Mike

Orr (Sluggo) ]

David Goodger (goodger at python.org)

Sun Mar 5 07:49:28 PST 2006

There was a grocery store (Tom Thumb's) a 30-minute walk away, or you could

take the hotel's free shuttle service. Alternatively, a Walmart with a large

grocery section was a 10-minute walk away, just behind the CompUSA store (not

to be confused with the CompUSA headquarters building beside the hotel).

Talkback:124/smith.html

(1)

Talkback:124/smith.html

(1)

[In reference to the article Build a Six-headed, Six-user Linux System - By Bob Smith ]

Richard Neill rn214 at hermes.cam.ac.uk

Sat Mar 4 09:23:16 PST 2006

Re multi-seat computers, I think you are heading for much pain to use the

/dev/input/X devices directly!

If USB devices are powered on in a different order, these will move around!

Use Udev rules, and symlinks....

I have 4 mice on my system (don't ask why!); here is my config in case it is

useful:

/etc/udev/rules.d/10-local.rules

------------------------------------------------------

#Rules for the various different mice on the system (the PS/2, Synaptics and Trackpoint)

#PS/2 mouse (usually /dev/input/mouse0)

#Symlink as /dev/input/ps2mouse

BUS="serio", kernel="mouse*", SYSFS{description}="i8042 Aux Port",

NAME="input/%k", SYMLINK="input/ps2mouse"

#Synaptics touchpad (in mouse mode) (usually /dev/input/mouse1)

#Symlink as /dev/input/synaptics-mouse

BUS="usb", kernel="mouse*", DRIVER="usbhid",

SYSFS{bInterfaceClass}="03", SYSFS{bInterfaceNumber}="00",

SYSFS{interface}="Rel", NAME="input/%k", SYMLINK="input/synaptics-mouse"

#Synaptics touchpad (in event mode) (usually /dev/input/event5)

#Symlink as /dev/input/synaptics-event

BUS="usb", kernel="event*", DRIVER="usbhid",

SYSFS{bInterfaceClass}="03", SYSFS{bInterfaceNumber}="00",

SYSFS{interface}="Rel", NAME="input/%k", SYMLINK="input/synaptics-event"

#Trackpoint (usually /dev/input/mouse2)

#Symlink as /dev/input/trackpoint

BUS="usb", kernel="mouse*", DRIVER="usbhid",

SYSFS{bInterfaceClass}="03", SYSFS{bInterfaceNumber}="01",

SYSFS{interface}="Rel", NAME="input/%k", SYMLINK="input/trackpoint"

#WizardPen (usually /dev/input/event6)

#Symlink as /dev/input/wizardpen

BUS="usb", kernel="event*", DRIVER="usbhid",

SYSFS{bInterfaceClass}="03", SYSFS{bInterfaceNumber}="00",

SYSFS{interface}="Tablet WP5540U", NAME="input/%k", SYMLINK="input/wizardpen"

-----------------------------------------

Then I can configure paragraphs in xorg.conf like this:

Section "InputDevice"

Identifier "ps2mouse"

Driver "mouse"

Option "Device" "/dev/input/ps2mouse"

Option "Protocol" "ExplorerPS/2"

Option "ZAxisMapping" "6 7"

EndSection

Section "InputDevice"

Identifier "trackpoint"

Driver "mouse"

Option "Device" "/dev/input/trackpoint"

Option "Protocol" "ExplorerPS/2"

Option "ZAxisMapping" "6 7"

#We want to use emulatewheel + emulatewheelltimeout. But need Xorg 6.9 for that

#Option "EmulateWheel" "on"

#Option "EmulateWheelButton" "2"

#Option "EmulateWheelTimeout" "200"

#Option "YAxisMapping" "6 7"

#Option "XAxisMapping" "4 5"

#Option "ZAxisMapping" "10 11"

EndSection

[Bob] - Picture Bob smacking his head and saying "Doh!" You are right

and the next article, the one where I get it *all* working, will use udev and

symlinks.

Using /dev/input was not too bad since the USB bus is enumerated the same way

on each power up. I only had to move things around once right after the initial

set up.

[[RichardN]] - I shall be interested to see how it goes, especially if you

can make the resulting system stable. Also, I wonder whether you can use udev

to distinguish between 6 different copies of a nominally identical item? Some

may let you use a serial number. Using the BUS id would be cheating - one ought

to be able to plug it together randomly and have it work!

P.S. An interesting challenge would be to get separate audio channels working

(eg a 5.1 channel motherboard could give 2 1/2 stereo outputs!) Or you could

use alsa to multiplex all the users together so that everyone can access the

speakers (and trust the users to resolve conflicts).

[Ben] -

> I have 4 mice on my system (don't ask why!); here is my config in case

> it is useful:

All right, I won't ask.

...

OK, maybe I will. :) So, why do you have _4_ mice on your system? And what's

the difference between 'mouse mode' and 'event mode'?

[[RichardN]] - OK. I have an IBM ultranav keyboard containing a trackpoint

and a touchpad.

1)The trackpoint is my primary mouse.

2)The touchpad is also used as a mouse (mainly for scrolling). It appears

as 2 different devices, so you can either treat it as a mouse (I called this

mouse-mode), or as an event-device. The latter is the way to use its advanced

features with the synaptics driver, but I'm not actually doing this for now.

3)A regular, PS2 mouse, for things like PCB design.

4)A Graphics tablet

[Ben] - Perhaps you should just write an article on Udev. :)

[[RichardN]] - You're very kind. But there is one already: http://reactivated.net/udevrules.php

[Ben] - [snip code] I can see a lot of sense in that. Judging from this example,

I should be able to figure out a config file that will keep my flash drive at

a single, consistent mountpoint (instead of having to do "tail /var/log/messages"

and mounting whatever random dev it managed to become.) I've heard of Udev before,

and even had a general idea of what it did, but never really explored it to

any depth. Nice!

[[RichardN]] - Indeed. You'll get a random /dev/sdX, but a consistent symlink

/dev/my_flash_drive (which you specify). Then, you can specify where it should

be mounted via options in fstab.

Even better: you can specify that a digital camera should have umask and

dmask (in fstab), which will fix the annoying tendency of JPGs to be marked

as executable!

Talkback:124/smith.html

(2)

Talkback:124/smith.html

(2)

[In reference to the article Build a Six-headed, Six-user Linux System - By Bob Smith ]

Andy Lurig (ucffool at comcast.net)

Sun Mar 5 10:19:42 PST 2006

Have you tried a USB audio solution for each station as well, and how

would that be worked into the configuration?

[Bob] - I have not tried it yet and do not know. The keyboards in my system

have a built in, 1 port hub. I was going to try to tie that hub to the user

at that keyboard. So, a flash drive plugged in would only be visible by that

user. Kind of the same with audio; they would have to bring their own audio

set but it would work if they plugged it in.

BTW: Two reader have mentioned http://linux-vserver.org

as a way to securely isolate users from each other.

Talkback:124/smith.html

(3)

Talkback:124/smith.html

(3)

[In reference to the article Build a Six-headed, Six-user Linux System - By Bob Smith ]

Richard June (rjune at bravegnuworld.com)

Tue Mar 7 06:37:46 PST 2006

I do a lot with LTSP, so I'm familiar with sharing a machine between multiple

users. KDE's kiosktool will let you do quite a bit to disallow the more CPU

intensive screensavers. Verynice is also helpful in keeping rogue processes

in check.

Talkback:124/smith.html

(4)

Talkback:124/smith.html

(4)

[In reference to the article Build a Six-headed, Six-user Linux System - By Bob Smith ]

vinu (vinu at hcl.in )

Wed Mar 8 22:24:36 PST 2006

I had worked on this multi terminal setup for around 6 months(from

dec 2004 - july 2005) with RHEL 3.0 and RHEL 4.0, I made this setup with ati

and nvidia cards. But my biggest problem was that many application makes problems

on running on suh a setup especially sound applications. I rectified many of

the things thru some wiered ways. But some apps like the SWF plugin for mfirefox

made the big problems as only one instance of the plugin can be used, bcos the

sound is redirected to the same device always, say /dev/dsp.To solve this problem

we used a very ugly way,that we mounted the same /tmp folder for all the users

and made the app thinks that it was using a seperate /temp and the device is

always /dev/hda.This actually ruins the security of linux to a big extend.while

some other apps like tuxracer, konquest etc,which using the videocards directly(DRI

enabled).So I think the application design plays a vital role in such a setup.

Though the last kernel i used was 2.9.11, I didn't tried any new patches or

solutions aroused in last 8-10 months,but i am just sharing My other observations

also:

1) I tried to make this setup on mother boards that of Intel make,

whic was an unsuccessful attempt, for which i don't get a good explanation

2)These setups are working good on AMD,and Some MSI mother boards,

with Intel or NVIDIA chipset with Nvidia cards.

3)The NVIDIA AMD combination shows excellent performance over others

4)The applications like mplayer shows certain level of uncertainity

even we configured it properly

5)If u are using audio/visual applications u the application should

be configurable(eg: mplayer on which we can select the audio device from the

configuration options whic also supports the alsa devices).

6) For a normal user it's not possible to configure applications like

swf plugin for firefox for all the six users.

7)this setup is best suited for a lab environment,and newbies bcos

for advanced users the CPU speed may not be sufficient(like a kernel compilation

fatally degrades the performance of the whole system)

I Dont know these observations are correct or not as per current situation.but

I think most are still relevent.

regards

vineesh

[Bob] - Thanks, Vineesh.

My solution to the sound problem was to turn it off for everyone -- not a very

elegant solution. A couple of readers have pointed out a system that would break

the PC into six separate systems, each with its own sound, X, and especially

important, security. The virtual server page is at http://linux-vserver.org.

I have not tried it yet but it looks promising.

BTW: another web page dedicated to multi-head Linux PC can be found here:

http://www.c3sl.ufpr.br/multiterminal/index-en.php.

Please let me know if there is anything I can do to help you with your project.

Talkback:124/smith.html

(5)

Talkback:124/smith.html

(5)

[In reference to the article Build a Six-headed, Six-user Linux System - By Bob Smith ]

Chuck Sites (syschuck at kingtut.spd.louisville.edu)

Wed Mar 15 13:31:29 PST 2006

Hi Bob,

I've been posting on Chris Tylers blog regarding my Multiseat configuration.

I've also had problems with a kernel Opps when the last person (seat 1 of a

two seat configuration) logs out. I was looking at you kernel opps message and

the call trace is very similar to yours. I was wondering if you still have that

system running, and if so could you send me a copy of an 'lspci -vvv'. Also,

I'm interested in hearing your experiences using the 'nv' driver. Were you having

a similar Opps?

Best Regards,

Chuck Sites

[Bob] - The system is not running right now but I can set it up again fairly

easily. What did you want to know from the 'lspci -vvv'?

I tried both the nv and vesa drivers. Neither could successfully get all

six heads working. The nVidia driver was easily the best driver I tested.

Talkback:124/smith.html

(6)

Talkback:124/smith.html

(6)

[In reference to the article Build a Six-headed, Six-user Linux System - By Bob Smith ]

Claude Ferron (cferron at gmail.com)

Fri Mar 17 19:23:24 PST 2006

When I try to start a screen with the -sharevts option CPU climb up

to 100%....

I have the following in the system:

Kernel 2.6.15.6

6 x nVidia Corporation NV5M64 RIVA TNT2

Xorg version 6.9 on slackware 10.2

[Bob] - Could you give a little more information.

What was the full line to invoke X?

Does the error occur on the first head or the last?

Could you send the x.org configuration for one head?

I saw this problem several times, but each time it was because I mis-typed

a line in the x.org config file.

thanks

This page edited and maintained by the Editors of Linux Gazette

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/tanaka-okopnik.jpg)

Kat likes to tell people she's one of the youngest people to have learned

to program using punchcards on a mainframe (back in '83); but the truth is

that since then, despite many hours in front of various computer screens,

she's a computer user rather than a computer programmer.

When away from the keyboard, her hands have been found full of knitting

needles, various pens, henna, red-hot welding tools, upholsterer's shears,

and a pneumatic scaler.

Copyright © 2006, Kat Tanaka Okopnik. Released under the

Open Publication license

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 127 of Linux Gazette, June 2006

The Mailbag

By Kat Tanaka Okopnik

GAZETTE MATTERS

"Open

Source State of the Union" in Boston

"Open

Source State of the Union" in Boston

Benjamin A. Okopnik (ben at linuxgazette.net)

Wed Mar 29 00:50:24 PST 2006

Hi, Gang -

Anyone interested in hearing Bruce Perens do his "Open Source

State of the Union" speech in Boston (4/5/2006), please let me know and

I'll arrange a press pass for you (it would be a Very Nice Thing if you sent

in a conference report as a result.) Do note that this usually requires a recently-published

article with your name on it.

In fact, if you'd like to attend any other industry conferences, the

same process applies. I will ask that you do a little research in those cases

and send me the contact info for the group that's running the particular con

you're interested in.

FOLLOWUPS from Previous Mailbags

Submit comments about articles, or articles themselves (after reading

our guidelines) to The

Editors of Linux Gazette, and technical answers and

tips about Linux to The Answer Gang.

USB MIDI

USB MIDI

Bob van der Poel (bvdp at uniserve.com)

Sun Feb 26 18:31:54 PST 2006

Following up on a previous

discussion

Just to follow up on this ... I have it working perfectly now. A few

fellows on the ALSA mailing list gave me hand and found out that for some reason

the OSS sound stuff was being loaded and then the ALSA was ignored (still, I

don't see why a cold plug did work). But, the simple solution was to delete

or rename the kernel module "usb-midi.ko.gz" so that the system can't

find it. Works like a damn.

GENERAL MAIL

Default inputs for program

at cmd line

Default inputs for program

at cmd line

Brian Sydney Jathanna (briansydney at gmail.com)

Tue Mar 14 18:18:19 PST 2006

Hi all,

I was just wondering if there is a way to enter the default inputs

at the cmdline recursively when a program asks for user input. For eg. while

compiling the kernel it asks for a whole lot of interactions wherein you just

keep pressing Enter. The command 'Yes' is not an option in this case cos at

some stages the default input would be a No. Help appreciated and thanks in

advance.

[Thomas] - Sure. You can use 'yes' of course -- just tell it not to actually

print any characters:

yes "" | make oldconfig

That will just feed into each stage an effective "return key press" that

accepts whatever the default is.

[[Ben]] - Conversely, you can use a 'heredoc' - a shell mechanism designed

for just that reason:

program <<!

yes

no

maybe

!

In the above example, "program" will receive four arguments via

STDIN: 'yes<Enter>', 'no<Enter>', 'maybe<Enter>', and the

<Enter> key all by itself.

Help

with file transfer over parallel port.

Help

with file transfer over parallel port.

sam luther (smartysam2003 at yahoo.co.in)

Sat Mar 18 12:32:22 PST 2006

I want to develop C code to transfer files from one PC to another PC

over the parallel ports(DB 25 on my machine) of linux machines. I got the 18

wire cable ready but not sure with the connections and how to open and control

the parallel port. Plz help...advices, sample codes, relevant material will

b very helpful. Thx

[Thomas] - Well, if this is Linux -> Linux, setup PLIP.

(Searching linuxgazette.net for 'PLIP' will yield all the answers you need).

Having done that you can setup NFS or sshfs, or scp, in the normal way.

[Jimmy] - Hmm. Advice, sample code, relevant material...

http://linuxgazette.net/issue95/pramode.html

http://linuxgazette.net/118/deak.html

http://linuxgazette.net/122/sreejith.html

http://linuxgazette.net/112/radcliffe.html

are just some of the articles published in previous issues of LG that discuss

doing various different things with the parallel port. The article in issue

122 also has diagrams, which may be of help.

Can

a running command be modified?

Can

a running command be modified?

Brian Sydney Jathanna (briansydney at gmail.com)

Tue Mar 21 14:44:57 PST 2006

Is there a way to modify the commandline of a running process? By

looking at /proc/<pid>/cmdline it looks as though it is readonly even

to root user. It would be helpful to add options or change arguments to a running

command, if this was possible. Is there a way to get around with it? Thanks

in advance.

[Thomas] - I don't see how. Most applications only parse what they're told

to at init time, via --command-line options. This means you would have to effectively

restart the application.

However, if a said application receives its options via a config file, then

sending that application a HUP signal might help you -- providing the said

application supports the command-line options in the config file.

[[Ben]] - Not that I can make a totally authoritative statement on this, but

I agree with Thomas: when the process is running, what you have is a memory

image of a program _after_ it has finished parsing all the command-line options

and doing all the branching and processing dependent on them; there's no reason

for those command-line parsing mechanisms to be active at that point. Applications

that need this kind of functionality - e.g., Apache - use the mechanism that

Thomas describes to achieve it, with a "downtime" of only a tiny fraction

of a second between runs.

I have

a question about rm command.

I have

a question about rm command.

manne neelima (manne_neelima at yahoo.com)

Wed Mar 22 12:08:12 PST 2006

I have a question about rm command. Would you please tell me how to

remove all the files excepts certain Folder in Unix?

Thanks in Advance

Neelima

[Thomas] - Given that the 'rm' command is picky about removing non-empty directories

anyway (unless it is used with the '-f' flag) I suspect your question is:

"How can I exclude some file from being removed?"

... to which the answer is:

"It depends on the file -- what the name is, whether it has an 'extension',

etc."

Now, since you've provided next to nothing about any of that, here's a contrived

example. Let's assume you had a directory "/tmp/foo" with some files

in it:

```

[n6tadam at workstation foo]$ ls

a b c

'''

Now let us add another directory, and add some files into that:

```

[n6tadam at workstation foo2]$ ls

c d e f g

'''

Let's now assume you only wanted to remove all the files in foo:

```

[n6tadam at workstation foo]$ rm -i /tmp/foo/*

'''

Would remove the files 'a', 'b', and 'c'. It would not remove "foo2"

since that's a non-empty directory.

Of course, the answer to your question is really one of globbing. I don't

know what kind of files you want removing, but for situations such as this,

the find(1) command works "better":

```

find /tmp/foo -type f -print0 | xargs -0 rm

'''

... would remove all files in /tmp/foo _recursively_ which means the files

in /tmp/foo2 would also be removed. Woops. Note that earlier with the "rm

-i *" command, the glob only expands the top-level, as it ought to. You

can limit find's visibility, such that it will only remove the files from

/tmp/foo and nowhere else:

```

find /tmp/foo -maxdepth 1 -type f -print0 | xargs -0 rm

'''

Let's now assume you didn't want to remove file "b" from /tmp/foo,

but everything else. You could do:

```

find /tmp/foo -maxdepth 1 -type f -not -name 'b' -print0 | xargs -0 rm

'''

... etc.

[Ben] - Well, first off, Unix doesn't have "Folders"; I presume that

you're talking about directories. Even so, the 'rm' command doesn't do that

- there's no "selector" mechanism built into it except the (somewhat

crude) "-i" (interactive) option. However, you can use shell constructs

or other methods to create a list of files to be supplied as an argument list

to 'rm', which would do what you want - or you could process the file list and

use a conditional operator to execute 'rm' based on some factor. Here are a

few examples:

# Delete all files except those that end in 'x', 'y', or 'z'

rm *[^xyz]

# Delete only the subdirectories in the current dir

for f in *; do [ -d "$f" ] && rm -rf "$f"; done

# Delete all except regular files

find /bin/* ! -type f -exec /bin/rm -f {} \;

[[Ben]] - Whoops - forgot to clean up after experimenting. :) That last should,

of course, be

find * ! -type f -exec /bin/rm -f {} \;

[[[Francis]]] - My directory ".evil" managed to survive those last

few. And my file "(surprise" seemed to cause a small problem...

[[[[Ben]]]] - Heh. Too right; I got too focused on the "except for"

nature of the problem. Of course, if I wanted to do a real search-and-destroy

mission, I'd do something like

su -c 'chattr -i file1 file2 file3; rm -rf `pwd`; chattr +i *'

[evil grin]

No problems with '(surprise', '.evil', or anything else.

[[[[[Thomas]]]]] - ... that won't work across all filesystems, though.

[[[[[[Ben]]]]]] - OK, here's something that will:

1) Copy off the required files.

2) Throw away the hard drive, CD, whatever the medium.

3) Copy the files back to an identical medium.

There, a nice portable solution. What, you have more objections? :)

Note that I said "if *I* wanted to do a real search-and-destroy mission".

I use ext3 almost exclusively, so It Works For Me. To anyone who wants to

include vfat, reiserfs, pcfs, iso9600, and malaysian_crack_monkey_of_the_week_fs,

I wish the best of luck and a good supply of their tranquilizer of choice.

[[[Francis]]] - (Of course, you knew that.

[[[[Ben]]]] - Actually, I'd missed it while playing around with the other stuff,

so I'm glad you were there to back me up.

[[[Francis]]] - But if the OP cares about *really* all-bar-one, it's worth

keeping in mind that "*" tends not to match /^./, and also that "*"

is rarely as useful as "./*", at least when you don't know what's

in the directory. I suspect this isn't their primary concern, though.)

[[[[Ben]]]] - Well, './*' won't match dot-files any better than '*' will, although

you can always futz around with the 'dotglob' setting:

# Blows away everything in $PWD

(GLOBIGNORE=1; rm *)

...but you knew that already. :)

[Martin] - try this perl script... This one deletes all hidden files apart

from the ones in the hash.

#!/usr/bin/perl -w

use strict;

use File::Path;

#These are the files you want to keep.

my %keepfiles = (

".aptitude" => 1,

".DCOPserver_Anne__0" => 1,

".DCOPserver_Anne_:0" => 1,

".gconf" => 1,

".gconfd" => 1,

".gnome2" => 1,

".gnome2_private" => 1,

".gnupg" => 1,

".kde" => 1,

".kderc" => 1,

".config" => 1,

".local" => 1,

".mozilla" => 1,

".mozilla-thunderbird" => 1,

".qt" => 1,

".xmms" => 1,

".bashrc" => 1,

".prompt" => 1,

".gtk_qt_engine_rc" => 1,

".gtkrc-2.0" => 1,

".bash_profile" => 1,

".kderc" => 1,

".ICEauthority" => 1,

".hushlogin" => 1,

".bash_history" => 1,

);

my $inputDir = ".";

opendir(DIR,$inputDir) || die("somemessage $!\n");

while (my $file = readdir(DIR)) {

next if ($file =~ /^\.\.?$/); # skip . & ..

next if ($file !~ /^\./); #skip unless begins with .

# carry on if it's a file you wanna keep

next if ($keepfiles{$file});

# Else wipe it

#print STDERR "I would delete $inputDir/$file\n";

# you should probably test for outcome of these

#operations...

if (-d $inputDir . "/" . $file) {

#rmdir($inputDir . "/" . $file);

print STDERR "Deleteing Dir $file\n";

rmtree($inputDir . "/" . $file);

}

else {

print STDERR "Deleting File $file\n";

unlink($inputDir . "/" . $file);

}

}

closedir(DIR);

[[Ben]] - All that, and a module, and 'opendir' too? Wow.

perl -we'@k{qw/.foo .bar .zotz/}=();for(<.*>){unlink if -f&&!exists$k{$_}}'

:)

writing

to an NFS share truncates files

writing

to an NFS share truncates files

Ramon van Alteren (ramon at vanalteren.nl)

Wed Feb 8 15:44:14 PST 2006

Hi All,

I've recently built a 9Tb NAS for our serverpark out of 24 SATA disks

& 2 3ware 9550SX controllers. Works like a charm, except....... NFS

We export the storage using nfs version 3 to our servers. Writing onto

the local filesystem on the NAS works fine, copying over the network with scp

and the like works fine as well.

However writing to a mounted nfs-share at a different machine truncates

files at random sizes which appear to be multiples of 16K. I can reproduce the

same behaviour with a nfs-share mounted via the loopback interface.

Following is output from a test-case:

On the server in /etc/exports:

/data/tools 10.10.0.0/24(rw,async,no_root_squash) 127.0.0.1/8

(rw,async,no_root_squash)

Kernelsymbols:

Linux spinvis 2.6.14.2 #1 SMP Wed Feb 8 23:58:06 CET 2006 i686 Intel (R) Xeon(TM)

CPU 2.80GHz GenuineIntel GNU/Linux

Similar behaviour is observed with gentoo-sources-2.6.14-r5, same options.

CONFIG_NFS_FS=y

CONFIG_NFS_V3=y

CONFIG_NFS_V3_ACL=y

# CONFIG_NFS_V4 is not set

# CONFIG_NFS_DIRECTIO is not set

CONFIG_NFSD=y

CONFIG_NFSD_V2_ACL=y

CONFIG_NFSD_V3=y

CONFIG_NFSD_V3_ACL=y

# CONFIG_NFSD_V4 is not set

CONFIG_NFSD_TCP=y

# CONFIG_ROOT_NFS is not set

CONFIG_NFS_ACL_SUPPORT=y

CONFIG_NFS_COMMON=y

#root at cl36 ~ 20:29:44 > mount

10.10.0.80:/data/tools on /root/tools type nfs

(rw,intr,lock,tcp,nfsvers=3,addr=10.10.0.80)

#root at cl36 ~ 20:29:56 > for i in `seq 1 30`; do dd count=1000 if=/dev/

zero of=/root/tools/test.tst; ls -la /root/tools/test.tst ; rm /root/

tools/test.tst ; done

1000+0 records in

1000+0 records out

dd: closing output file `/root/tools/test.tst': No space left on device

-rw-r--r-- 1 root root 163840 Feb 8 20:30 /root/tools/test.tst

1000+0 records in

1000+0 records out

dd: closing output file `/root/tools/test.tst': No space left on device

-rw-r--r-- 1 root root 98304 Feb 8 20:30 /root/tools/test.tst

1000+0 records in

1000+0 records out

dd: closing output file `/root/tools/test.tst': No space left on device

-rw-r--r-- 1 root root 98304 Feb 8 20:30 /root/tools/test.tst

1000+0 records in

1000+0 records out

dd: closing output file `/root/tools/test.tst': No space left on device

-rw-r--r-- 1 root root 131072 Feb 8 20:30 /root/tools/test.tst

1000+0 records in

1000+0 records out

dd: closing output file `/root/tools/test.tst': No space left on device

-rw-r--r-- 1 root root 163840 Feb 8 20:30 /root/tools/test.tst

<similar thus snipped>

I've so far found this.

Which seems to indicate that RAID + LVM + complex storage and 4KSTACKS

can cause problems. However I can't find the 4KSTACK symbol anywhere in my config.

For those wondering.... no it's not out of space:

10.10.0.80:/data/tools 9.0T 204G 8.9T 3% /

root/tools

Any help would be much appreciated.......

Forgot to mention:

There's nothing in syslog in either case (loopback mount or remote machine

mount or server)

We're using reiserfs 3 in case you're wondering. It's a raid-50 machine based

on two raid-50 arrays of 4,55 Tb handled by the hardware controller.

The two raid-50 arrays are "glued" together using LVM2:

--- Volume group ---

VG Name data-vg

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 2

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 2

Act PV 2

VG Size 9.09 TB

PE Size 4.00 MB

Total PE 2384134

Alloc PE / Size 2359296 / 9.00 TB

Free PE / Size 24838 / 97.02 GB

VG UUID dyDpX4-mnT5-hFS9-DX7P-jz63-KNli-iqNFTH

--- Physical volume ---

PV Name /dev/sda1

VG Name data-vg

PV Size 4.55 TB / not usable 0

Allocatable yes (but full)

PE Size (KByte) 4096

Total PE 1192067

Free PE 0

Allocated PE 1192067

PV UUID rfOtx3-EIRR-iUx7-uCSl-h9kE-Sfgu-EJCHLR

--- Physical volume ---

PV Name /dev/sdb1

VG Name data-vg

PV Size 4.55 TB / not usable 0

Allocatable yes

PE Size (KByte) 4096

Total PE 1192067

Free PE 24838

Allocated PE 1167229

PV UUID 5U0F3v-ZUag-pRcA-FHvo-OJeD-1q9g-IthGQg

--- Logical volume ---

LV Name /dev/data-vg/data-lv

VG Name data-vg

LV UUID 0UUEX8-snHA-dYc8-0qLL-OSXP-kjoa-UyXtdI

LV Write Access read/write

LV Status available

# open 2

LV Size 9.00 TB

Current LE 2359296

Segments 2

Allocation inherit

Read ahead sectors 0

Block device 253:3

[Kapil] - I haven't used NFS in such a massive context so this may not be the

right question.

[[Ramon]] - Doesn't matter, I remember once explaining why I was still at work

and with what problem to the guy cleaning our office (because he asked). He

asked one question which put me on the right track solving the issue....in half

an hour after banging my head against it for two days ;-)

Not intending to compare you to a cleaner... sometimes it helps a lot if

you get questions from a different mindset.

[Kapil] - Have you tested what happens with the user-space NFS daemon?

[[Ramon]] - Urhm, not clear what you mean.... Tested in what sense ?

[[[Kapil] ]]] - Well. I noticed that you had compiled in the kernel NFS daemon.

So I assumed that you were using the kernel NFS daemon rather than the user-space

one. Does your problem persist when you use the user-space daemon?

[[[[Ramon]]]] - Thanx, no it doesn't.

[[[Kapil]]] - The reason I ask is that it may be the kernel NFS daemon that

is leading to too many indirections for the kernel stack to handle.

[[[[ Ramon]]]] - That appears to be the case. I'm writing a mail to lkml &

nfs list right now to report on the bug.

[[[[[Ramon]]]]] - Turned out it did, but with a higher threshold. As soon as

we started testing with files > 100Mb the same behaviour came up again.

It turned out to be another bug related to reiserfs.

For those interested more data is here

and here.

In short: Although reiserfs reports in its FAQ that it supports filesystems

up to 16Tb with the default options (4K blocks) it supports only 8Tb. It doesn't

fail however and appears to work correctly when using the local filesystem,

the problems start showing up when using NFS

I fixed the problem by using another filesystem. Based on comments on the

nfs-ml and the excellent article in last months linuxgazette we switched to

jfs.

So far it's holding up very good. We haven't seen the problems reappearing

with files in excess of 1Gb

Thanx for the help though.

Answer

Blog/Ad-hoc Wireless Network

Answer

Blog/Ad-hoc Wireless Network

fahad saeed (fahadsaeed11 at yahoo.com )

Thu Feb 9 20:22:37 PST 2006

Hello,

I am an linux enthusiastic and I would like to be on the answer blog team. How

may this be possible. I have one linux article to my credit.

http://new.linuxfocus.org/English/December2005/article390.html

Regards

FAHAD SAEED

[Ben] -

Hi, Saeed -

Yep, I saw that - very good article and excellent work! Congratulations to

your entire team.

You're welcome to join the Linux Gazette Answer Gang; simply go to http://lists.linuxgazette.net/mailman/listinfo/tag

and sign up. As soon as you're approved, you can start participating.

[Kapil] -

Hello,

On Fri, 10 Feb 2006, fahad saeed wrote:

> <html><div style='background-color:'><DIV class=RTE>

> <P>Thankyou all for the warm welcome.I will try to be of use.</P>

> <P>Kind Regards</P>

I somehow managed to read that but please don't send HTML mail!

I started reading your article.

Since I'm only learning the ropes with wireless could you send me some URL

indicating how the wireless Ad-Hoc network (the links) are set up? Just to

give you an idea of how clueless I am, I didn't get beyond "iwconfig

wifi mode ad-hoc".

Regards,

Kapil.

P.S. Its great to see such close neighbours here on TAG.

[Kapil] -

Dear Fahad Saeed,

First of all you again sent HTML mail. You need to configure your webmail

account to send plain text mail or you will invite the wrath of Thomas and

Heather [or Kat] (who edit these mails for inclusion in LG) upon

you. This may just be a preference setting but do it right away!

On Fri, 10 Feb 2006, fahad saeed wrote:

> the command you entered is not very right i suppose.The cards are

> usually configured( check it with ifconfig) as ath0 etc.The command

> must be (assuming that the card configured is 'displayed' as ath0)

> iwconfig ath0 mode ad-hoc.You can also do the same if u change the

> entry in ifcfg-ath0 to ad-hoc.

Actually, I just used the "named-interface" feature to call my

wireless interface "wifi". So "iwconfig wifi mode ad-hoc"

is the same as "iwconfig eth0 mode ad-hoc" on my system.

> Hope this helps.I didnt get your question exactly.Please let me know

> what exactly are you trying to do and looking so that i can be of

> more specific help.

My question was what one does after this. Specifically, is the IP address

of the interface configured statically via a command like ifconfig eth0 192.168.0.14

and if so what is the netmask setting?

Just to be completely clear. I have not managed to get two laptops to communicate

with each other using ad-hoc mode. Both sides say the (wireless) link is up,

but I couldn't get them to send IP packets to each other. I have only managed

to get an IP link when there is a common access point (hub).

The problem is that getting wireless cards to work with Linux has been such

a complicated issue in the past that most HOWTO's spend a lot of time in explaining

how to download and compile the relevant kernel modules and load firmware.

The authors are probably exhausted by the time they got to the details of

setting up networking :)

[[Ben]] - That's something I'd really like to learn to do myself. I've thought about

it on occasion, and it always seemed like a doable thing - but I never got

any further than that, so a description of the actual setup would be a really

cool thing.

When I read about those $100 laptops that Negroponte et al are cranking out,

I pictured a continent-wide wireless fabric of laptops stretching across,

say, Africa - with some sort of a clever NAT and bandwidth-metering setup

on each machine where any host within reach of an AP becomes a "relay

station" accessible to everyone on that WAN. Yeah, it would be dead slow

if there were only a few hosts within reach of APs... but the capabilities

of that kind of system would be awesome.

I must say that, in this case, "the Devil is not as bad as he's painted".

In my experience of wireless cards under Linux has consisted of

1) Find the source on the Net and download it;

2) Unpack the archive in /usr/src/modules;

3) 'make; make install' the module and add it to the list in

"/etc/modules".

Oh, and run 'make; make install' every time you recompile the kernel. Not

what I'd call terribly difficult.

[[[Martin]]] - When my Dad had a wireless card it wasn't that simple...

It was a Netgear something or other USB - I don't think we ever got it work

in Linux in the end.

Its not a problem now though as he is using Ethernet over Power and it's

working miles better in both Windows and Linux.

[[[[Ben]]]] - Hmm, strange. Netgear and Intel are the only network hardware with which

I haven't had any problems - whatever the OS. Well, different folks, different

experiences...

[[[Saeed]]] - I would have to agree with Kapil here.Yes the configuration process

is sometimes extremely difficulty.As Benjamin potrayed it ...it seems pretty

easy and in theory it is. But when done practically it is not that straightforward.

The main problem is that the drivers available are for different chipsets.The

vendors do not care about the chipsets and change the chipsets without changing

the product ID. It happened in our case with WMP11 if I am remembering correctly.

Obviously once you get the correct sets of drivers, kernel and chipsets it

is straightforward.

The lab setup that we did in UET LAHORE required the cards to work in ad-hoc

mode.We used madwifi drivers. Now as you may know that there is beacon problem

in the madwifi drivers and the ad-hoc mode itself does not work reliably.The

mode that we implemented was Ad-hoc mode Cluster Head Routing. In simple words

it meant that one of the PC's were configured to be in Master mode and there

were bunch of PCs around.It would have been really cool if we could get it

work in 'pure ad-hoc' mode nevertheless it served the LAB purposes.

[[[Jason]]] - Huh. Weird. I've got an ad-hoc network set up with my PC and

my sister's laptop, and it "just works". The network card in my PC

is a Netgear PCI MA311, with the "Prism 2.5" chipset. ("orinoco_pci"

is the name of the driver module.)

:r!lspci | grep -i prism

0000:00:0e.0 Network controller: Intersil Corporation Prism 2.5 Wavelan chipset (rev 01)

The stanza in /etc/network/interfaces is:

auto eth1

iface eth1 inet static

address 10.42.42.1

netmask 255.255.255.0

wireless-mode ad-hoc

wireless-essid jason

wireless-key XXXX-XXXX-XXXX-XXXX-XXXX-XXXX-XX

FWIW, the commands I was using to bring up the interface before I switched

to Debian were:

sbin/ifconfig eth1 10.42.42.1 netmask 255.255.255.0

/usr/sbin/iwconfig eth1 mode Ad-Hoc

/usr/sbin/iwconfig eth1 essid "jason"

/usr/sbin/iwconfig eth1 key "XXXX-XXXX-XXXX-XXXX-XXXX-XXXX-XX"

I have dnsmasq providing DHCP and DNS. The laptop is running Windows 2000

with some off-brand PCMCIA wifi card.

[[[[Kapil]]]] - I get it. While the link-layer can be setup in ad-hoc mode

by the the cards using some WEP/WPA mechanism, there has to be some other mechanism

to fix the IP addresses+netmasks. For example, one could use static IP addresses

of each of the machines as Fahad Saeed (or Jason) has done. Of course, in a

server-less network one doesn't want one machine running a DHCP server. Alles

ist klar.

I will try this out the next time I have access to two laptops...

[[Saeed]] - First of all I am sorry abt the html format thing.

Dear Kapil what is your card's chipset type and what drivers you used to

configure them with linux.

[[[Kapil]]] - The card is the Intel one and it works with the driver ipw2200

that is built into the default kernel along with the firmware from the Intel

site.

In fact, I have had absolutely no complaints about getting the card to work

with wireless access stations with/without encryption and/or authentication.

But all these modes are "Managed" modes.

I once tried to get the laptop to communicate with another laptop (a Mac)

in Ad-Hoc mode and was entirely unsuccessful. So when you said that you managed

to get an Ad-Hoc network configured for your Lab, I thought you had used the

cards in Ad-Hoc mode.

However, from your previous mail it appears that you configured one card

in "Master" mode and the remaining cards in "Managed"

mode, so that is somehow different. In particular, I don't think the card

I have can be set up in "Master" mode at all.

[[[[Saeed]]]] - You got it right; we did used the master mode type in the lab

for demonstration purposes because it was more reliable than the pure 'ad-hoc'

mode and it served the lab purposes.However we did use the ad-hoc mode in the

lab and it worked fine but wasn't reliable enough for lab purposes ...which

has to work reliably at all times.

This page edited and maintained by the Editors of Linux Gazette

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/tanaka-okopnik.jpg)

Kat likes to tell people she's one of the youngest people to have learned

to program using punchcards on a mainframe (back in '83); but the truth is

that since then, despite many hours in front of various computer screens,

she's a computer user rather than a computer programmer.

When away from the keyboard, her hands have been found full of knitting

needles, various pens, henna, red-hot welding tools, upholsterer's shears,

and a pneumatic scaler.

Copyright © 2006, Kat Tanaka Okopnik. Released under the

Open Publication license

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 127 of Linux Gazette, June 2006

The Mailbag (page 2)

By Kat Tanaka Okopnik

MAILBAG #2

Submit comments about articles, or articles themselves (after reading our guidelines) to The Editors of Linux Gazette, and technical answers and tips about Linux to The Answer Gang.

questions

about discover

questions

about discover

J. Bakshi (j.bakshi at icmail.net)

Sat Mar 25 22:06:39 PST 2006

Dear list,

I am using discover 2.0.6 in a debian sarge(testing) machine with Linux

2.6.8-2-k7 kernel. There is no */etc/discover.conf* file. I can't understand

where discover is looking after for its default configuration. Pls let me know

so that I can change its scanning options. *discover -t modem* or *discover

-t printer* don't detect my modem or printer BUT hwinfo detect these H/W perfectly.

What may be the problem ?

I have presently the files in my machine are

/usr/bin/discover

/etc/discover.d

/lib/discover

/usr/share/discover

/usr/share/man/man1/discover.1.gz

discover.conf-2.6

discover-modprobe.conf

discover.conf.d

discover-v1.conf

discover.d

What to do so that discover can load the drivers module automatically

during boot ? Kindly solve my doubts. Please CC to me with best complements

[Rick] - The Debian systems I have at my disposal at this moment don't have

discover installed, so I'm limited to the information available on-line. However,

searching http://packages.debian.org/ for "discover" turns up a number

of packages including the main "discover" one, providing this list

of contents on i386 sarge (which is _not_ the testing branch, by the way: sarge=stable,

etch=testing -- for quite some time, now):

http://packages.debian.org/cgi-bin/search_contents.pl?searchmode=filelist&word=discover&version=stable&arch=i386

I notice in that list a file called "etc/discover-modprobe.conf".

Perhaps that's what you're looking for? Its manpage (also findable on the

Internet) describes it as "the configuration file for discover-modprobe,

which is responsible for retrieving and loading kernel modules".

> *discover -t modem* or *discover -t printer* don't detect my modem

or

> printer BUT hwinfo detect these H/W perfectly. What may be the problem?

Ah, now _that_ is a completely different question. J. Bakshi, you really

would be well advised to be careful of overdefining your problem, when you're

asking for help. If your _real_ problem is that you haven't yet figured out

how to configure Debian-sarge to address your modem and printer, please _say

so_ and give us relevant details.

If, by contrast, you claim the problem is that you need to find discover's

configuration file, _that_ is all you'll get help with, even if your problem

has nothing to do with discover in the first place.

I have a suggestion: Why don't you back up and start over? Tell us about

your modem and printer's nature and configuration, what relevant software

is installed on your system, what is an is not happening, what you've tried,

and exactly what happened when you tried that.

_Note_:

You should not attempt to recall those things from memory, but rather attempt

them again while taking contemporaneous notes. Post information from those

notes, instead of from vague recollections and reconstructions of events in

your memory. Thank you.

[[jbakshi]] - My installed sarge is still in testing mode that's why I have

explicitly mention * sarge(testing) * I have no broad-band to upgrade my system.

Dial-up is too poor.

Automatically You have given answear of a question which was asked latter

-:) Yes, discover-modprobe automatically loads kernel module for the discover_detected

H/W .

*cat /etc/discover-modprobe.conf* shows as below

# $Progeny: discover-modprobe.conf 4668 2004-11-30 04:02:26Z licquia $

# Load modules for the following device types. Specify "all"

# to detect all device types.

types="all"

# Don't ever load the foo, bar, or baz modules.

#skip="foo bar baz"

# Lines below this point have been automatically added by

# discover-modprobe(8) to disable the loading of modules that have

# previously crashed the machine:

But I am still looking for the configuration_file which define how the command

*discover* detects buses.

I thought this issue is also related with the same doscover_configuration_file

but now I have the answear. please see below

I have Epson C20SX parallel inkjet printer.

running *hwinfo --printer* detects my printer correctly. below is the o/p

of the command

14: Parallel 00.0: 10900 Printer

[Created at parallel.153]

Unique ID: ffnC.GEy3qUgdsRD

Parent ID: YMnp.ecK7NLYWZ5D

Hardware Class: printer

Model: "EPSON Stylus C20"

Vendor: "EPSON"

Device: "Stylus C20"

Device File: /dev/lp0

Config Status: cfg=new, avail=yes, need=no, active=unknown

Attached to: #9 (Parallel controller)

but *discover -t printer* returns nothing. I have founded the answear. man

pages of discover (version 2.0.6) says that discover scans only ati, pci,

pcmcia, scsi, usb. That's why it can't detect my parallel printer and PS/2

mouse, serial modem

[[[Rick]]] - [Quoting J. Bakshi (j.bakshi at icmail.net):]

> My installed sarge is still in testing mode

No. It's really not.

[Snip bit where I suggest that you start over with a fresh approach, and

where you don't do that. Oh well. If you decide you want to be helped, please

consider following my advice, instead of ignoring it.]

[[[[jbakshi]]]] -

Please Try to understand

1) I installed Sarge from CD pack. When I did the installation *sarge was

in testing mode THEN*

2) Now sarge has become stable

3) As I don't have broadband and the dialup is also too poor, I couldn't

upgrade my *installed* sarge hence *that installed sarge in my box is still

a testing release*

4) I have no intention to ignore any advice as I need those seriously.

[[[[[Rick]]]]] - [Quoting J. Bakshi (j.bakshi at icmail.net):]

> 2) Now sarge has become stable

I know all this.

[no broadband]

> hence *that installed sarge in my box is still a testing release*

i knew what you were saying, but it's was somewhat misleading to refer to

sarge as the testing branch. Irrespective of the state of your system's software

maintenance, it's 2006, and sarge is now the stable branch. Shall we move

on?

> 4) I have no intention to ignore any advice as I need those seriously.

OK, so, when you wish to do that, you'll be starting fresh by describing

relevant aspects of your hardware and system, and saying what actual problem

you're trying to solve, what you tried, what the system did when you tried

various things, etc. You may recall that _that_ was my advice.

I said you weren't electing to follow my advice because you weren't doing

that. So far, you still aren't. But that is still my suggestion. To be more

explicit: You started out by defining your problem as "discover"

configuration -- but there was actually no reason to believe that was the

case. Therefore, you were asking the wrong question. Start over, please.

[Thomas] - I'm jumping in here to say that "discover" is a real PITA.

It's the most annoying package ever, and should die after installation. It conflicts

no end with anything held in /etc/modules, and actively disrupts hotplug for

things like USB devices.

You don't need it for anything post-installation. If you somehow think you

do, you're wrong.

Please

solve a doubt about linux H/W detection

Please

solve a doubt about linux H/W detection

J. Bakshi (j.bakshi at icmail.net)

Wed Mar 29 04:19:05 PST 2006

Dear list,

Please help me to solve a doubt. In linux world *discover* *hwinfo*

*kudzu* are the H/W detection technology. Do they directly probe the *raw* piece

of H/W to collect information about it ? what about lspci, lsusb, lshw ?? Do

they also provide H/W information by probing the *raw* piece of H/W or just

retrieve the information which are already provided by some H/W detection technology

as mentioned above ?? kindly cc to me.

[Thomas] - Not as such. They tend to query the kernel which itself has ""probed""

the devices beforehand.

> lsusb, lshw ?? Do they also provide H/W information by probing the

> *raw* piece of H/W or just retrieve the information which are already

> provided by some H/W detection technology as mentioned above ??

What difference does it make to you if it has or has not? I'll reiterate

once more that you DO NOT need discover at all. In fact, it's considered harmful

[1]

[1] Just by me, for what that's worth.

[[jbakshi]] - Nothing as such but my interest to know those technologies, specially

from those who have already used those and quite aware the advantage and disadvante.

Thanks for your response.

[[jbakshi]] - Just find out from man page. *lspci -H1* or *lspci -H2* do direct

H/W access. *lspci -M* enable bus mapping mode

[[Kapil]] - In case that makes you feel happier Thomas, I saw a mail (which

I can't find right now) that indicates debian-installer team is in agreement

with you. However, the replacement "udev/hotplug/coldplug" may also

make you unhappy :)

[[[Thomas]]] - Ugh. :) Udev is a fad that perpetuates the dick-waving wars

of "Oooh, look at me, I only have three entries in /dev. You have 50000,

so mine's much better". It has absolutely *no* real advantage over a static

/dev tree whatsoever.

[[[[Pedro]]]] - What about the symlink magic explained here

(http://reactivated.net/writing_udev_rules.html)?

To hotplug different scanners, digital cameras or other usb devices in no

particular other, and make them appear always in the same known point inside

the filesystem, looks like a good feature. How would that be done without

udev?

[[[[[Thomas]]]]] - You can use a shell-script to do it. I did something similar

a few years ago.

[[[[Ben]]]] - Are you sure, Thomas? Not that I'm a huge fan of Udev - it smacks

too much of magic and unnecessary complexity - but I like the idea of devices

being created as they become necessary. Expecting some Linux newbie to know

enough to create '/dev/sr*' or '/dev/audio' when the relevant piece of software

throws a cryptic message is unreasonable. Having them created on the fly could

eliminate a subset of problems.

[[[[[Thomas]]]]] - Well, pretty much. At least you have some kind of assurance

that the device "exists" whether it's actully attached to some form

of physical device or not. Plus, one the permissions are set on that device

and you have the various group ownerships sorted out... it's trivial.

I realise Udev does all of this as well, but you have to have various node

mappings and layered complexity not observed with a static /dev tree. Then

there's all sorts of shenanigans with things like initrds and the line for

some kernels. It's just not worth the effort to effectivelty allow a "ls

/dev" listing to show up without the use of a pager. ;)

[[[[Ben]]]] - Well, actually, now that I'm thinking about it - so could a '/dev'

that's pre-stuffed with 50,000 entries. Hmm, I wonder why that's not the default

- since, theoretically, these things take no space other than their own inode

entry.

OS/X - "Just like

real BSD"

OS/X - "Just like

real BSD"

The following originally came under the thread "Please solve a doubt about

linux H/W detection". Given the drift, I've renamed this subthread for benefit of findability.

OBTW - I would like to get my hands around the throat of the person

who decided that '/dev/dsp' and '/dev/mixer' are unnecessary in OS/X. Really,

it would only be for a few moments... is that too much to ask?

(Installing X11+xmms on the latest OS/X is a HUGE PAIN IN THE ASS,

for anyone who's interested.)

[Thomas] - That's not the only thing that's unneccesary in OS/X from what I

hear. :)

[[Ben]]- [rolling eyes] Don't get me started. "Just like real BSD"...

yeah, *RIGHT*.

At least they have Perl, Python, ssh, tar, and find (well, some freakin'

weird version of 'find', but at least it's there) pre-installed. That part

keeps me sane when dealing with Macs. Last night, a friend of mine who uses

one asked my opinion of various $EXPENSIVE_BACKUP_PROGRAMS for his Mac. My

response was "why would you want to actually spend *money* on this? You

have 'tar', 'cpio', and 'pax' already installed." Made him happy as a

lark; 'tar cvzf' was right up his alley even though he'd never so much as

seen an xterm before.

[[[Kapil]]] - There is also "fink" which (in conjunction with "xterm")

is a lifesaver when it comes to turning a Mac into something almost normal.

(For those not in the know---"fink" is "apt" for OS/X which

actually uses a .deb packaging format as well).

This alone makes OS/X far-far better than its predecessors. Sample of earlier

conversion with typical Mac user whose computer I wished to use for a brief

while.

[[[[Ben]]]] - Well... more or less. As in "more broken or less useful".

You get a subset of the APT utilities for the command line, as well as Fink

Commander which is a GUI; "apt-get", or at least the repository behind

it, is badly broken since at least a quarter (IME) of the "available"

packages have unsatisfiable dependencies. It also throws persistent errors (something

like "/usr/foo/bar/zotz/cc1plus not executable") which can't be fixed

by installing any of the available software. As well, "apt-cache search"

provides a meager subset of the listings shown at Fink.org - e.g., there are

several dozen OpenOffice packages shown at the latter, whereas the former has

never heard of OO.

Fink Commander is even more "wonderful": when you hit the 'Upgrade

Fink Commander' button, it breaks itself _and_ the entire APT kit due to a

stupid interface error (the CLI app that it's wrapping blocks on a question

- you can see it in the dialog area - and there's no way to feed its STDIN;

all you can do is kill the GUI, which interrupts the process half-way through

installation.) To fix it, you have to remove the '/sw' directory - which contains

*all the software you ever installed with Fink* - and start from scratch.

That's the recommended procedure. Cute, eh?

Building a compiler toolchain on a Mac with Fink, BTW, is impossible. They've

got GCC - both 3.3 and 4.0 - but literally almost *nothing* else that's required.

In short, pretty bloody awful. It kinda smells like *nix, even sorta resembles

it... but falls way short when there's any real work to be done.

[[[Kapil]]]

Me: I'd just like a terminal window.

Mac user: What's that?

Me: A window where you type commands.

Mac user: What are commands?

Me: Things you tell the computer to do.

Mac user: You can actually tell the computer to do things? The Mac doesn't

work that way. You point and click and it guesses what you want to do and

just goes ahead and does it.

At this point each of us walks off in disgust+surprise at having met an alien

right here on earth.

[[[[Ben]]]] - Don't even bother asking; they won't have any idea. Just hit

'Apple-F' in Finder, type 'Terminal', click on the icon, and do your work as

they sit there in utter bafflement. :)

[[[[[Breen]]]] - Don't forget to drag Terminal.app to the Dock so that it's

there when you need to fix something the next time...

Viewing homestead.com web

pages

Viewing homestead.com web

pages

Bob van der Poel (bvdp at uniserve.com)

Sun Feb 19 12:51:53 PST 2006

Just wondering if there is a simple (complex? Any?) solution to viewing

certain websites which rely way too much on absolute positioning code? Most

sites on www.homestead.com are quite un-viewable on my system with Firefox.

They render somewhat better with Opera.

I think the problem is one of expected font and font size. I use a

fairly large min. size ... but don't know if this is the problem or not.

Here's an example of something completely impossible to read: http://www.yahkkingsgate.homestead.com/

using Firefox. Again, it fares better (but still pretty ugly) in Opera. I don't

have a windows box handy, but am assuming that it renders okay using IE.

[Lew] - For what it's worth, I tried several sites from the "site sampler"

on www.homestead.com, and didn't find any readability (or operability) issues

with Firefox 1.5.0.1 running in Slackware Linux 10.1

From my Firefox Preferences window, my

Default Font -> Bitstream Vera Serif (size 12)

Proportional -> Serif (size 12)

Serif -> Bitstream Vera Serif

San Serif -> Bitstream Vera Sans

Monospace -> Bitsream Vera Sans Mono (size 12)

Display Resolution -> 96 dpi

Minimum Font size -> 12

"Allow pages to choose their own fonts" checked

Default Character Encoding -> Western (ISO-8859-1)

(http://www.yahkkingsgate.homestead.com/) Renders well for me, same settings

as above.

[[Bob]] - Thanks for taking time to have a look. I'm wondering if I'm missing

some fonts for firefox? I'm using the same firefox version. My font settings

were different, but changing them to what you have doesn't seem to make much

difference. Certainly, my min. is much larger ... 16 as opposed to your 12.

I'm using a 19" monitor ... and the 12 is completely unreadable.

In my /etc/X11/fs/config I have the following:

#

# Default font server configuration file for Mandrake Linux workstation

#

# allow a max of 10 clients to connect to this font server

client-limit = 10

# when a font server reaches its limit, start up a new one

clone-self = on

# alternate font servers for clients to use

#alternate-servers = foo:7101,bar:7102

# where to look for fonts

#

catalogue = /usr/X11R6/lib/X11/fonts/misc:unscaled,

/usr/X11R6/lib/X11/fonts/drakfont,

/usr/X11R6/lib/X11/fonts/drakfont/Type1,

/usr/X11R6/lib/X11/fonts/drakfont/ttf,

/usr/X11R6/lib/X11/fonts/100dpi:unscaled,

/usr/X11R6/lib/X11/fonts/75dpi:unscaled,

/usr/X11R6/lib/X11/fonts/Type1,

/usr/X11R6/lib/X11/fonts/TTF,

/usr/X11R6/lib/X11/fonts/Speedo,

/usr/share/fonts/default/Type1,

/usr/share/fonts/default/Type1/adobestd35,

/usr/share/fonts/ttf/decoratives,

/usr/share/fonts/ttf/dejavu,

/usr/share/fonts/ttf/western

# in 12 points, decipoints

default-point-size = 120

# 100 x 100 and 75 x 75

default-resolutions = 100,100,75,75

# use lazy loading on 16 bit (usually Asian) fonts

deferglyphs = 16

# how to log errors

use-syslog = on

# don't listen to TCP ports by default for security reasons

no-listen = tcp

Does anything here leap out?

[Francis] - Is that because text 1/6th of an inch high is too small for you

to read, or because 12pt-text on your display is not 1/6th of an inch high?

If the latter, you may see some benefit from telling your X how many dots-per-inch

your screen actually uses. For example, a 19" diagonal on a 4:3 aspect

ratio monitor (a TFT screen, for example) means the horizontal size is about

15.2 inches; if you display at 1600x1200 pixels, that's about 105 pixels per

inch.

If X then believes you're displaying at 100 pixels per inch, everything measured

in inches will be about 5% smaller than it should be. For text at the edge

of legibility, that's enough to push it over.

[[Ben]] - Not only that, it'll usually result in a bad case of the jaggies

(aliasing) in text when X tries to interpolate that fractional size difference.

This definitely _will_ make marginally-legible text unreadable.

[[Bob]] - Well, probably a bit of both. I can not read 1/6" lettering

anymore. Used to ... but that was when I was younger can could do a lot of things

I can't now. Of course, I could break down and get glasses to see the screen,

but I'm resisting. I already need to put on cheaters when I read a book, etc

(mind you, I seem to manage not to badly in bright light). Ahh, the joys of

aging. But as someone told me: aging beats the alternatives.

[Francis] - "xdpyinfo" will tell you what X thinks the current dimensions

and resolution are. If they don't match what your ruler tells you, you should

consider reconfiguring.

[[Ben]] - [Nod] If there's one thing I've learned from playing around with

all the X resolution configuration options, it's exactly that. As a testimonial

from the positive side of things, once I got X resolution properly synched up

with the actual screen size, I was able to switch to 1280x1024 without any problems.

Previously, that mode produced unreadable text in many applications.

(Yes, I'm aware that I could tweak ~/.gtkrc, etc. I found it ridiculous that

I would have to - and, once I got X configured correctly, I didn't have to.

I like for the world to make sense, at least sometimes. :)

[[[Bob]]] - And I learn that the interface between user programs and X is hopelessly

broken. Now, we are not going to fix that little issue :)

I have discovered one thing in Firefox: in the menu bar there is a <view><page

style> which (I think) lets you diable the css stuff (not clear, but I

think that is what it does). At least, doing that I can get rid of all overlaying

text.

But, the interesting thing is that now matter what I do the page I was trying

to view presents itself with overlaying text. If I turn off the min. text

size completely I can get text too small for any mortal to read, but it still

overlays. I've also installed additional fonts (namely some msfonts collection),

but that doesn't seem to make any difference.

Oh well, perhaps we should just let ugly find its own home :)

[Francis] - If the problem is broken absolute positioning code, the quick answer

is to use a browser which ignores absolute positioning code.

I don't see anything obvious in the firefox about:config page to disable

that bit of their rendering or layout engine, though. If you accept that (a)

you will see their content in a layout they did not expect or desire (which

is already the case); and (b) you *will* see their content (which is not already

the case), use a browser with CSS support which tries to be perfect at the

"ignore it completely" end of the scale, rather than any which try

to get to the "implement it all correctly" end. I'll mention w3m

and dillo, but I'm sure you'll be able to find one that suits you. (These

other browsers do have their own quirks and configuration requirements, so

depending on how your distribution set things up, you may have some testing

and learning to do before things work the way you want them to. And it's also

possible that things *won't* work the way you want them to, no matter what.)

w3m -- the content seems all there, but the links are a bit tough to follow

(because of the design choices they made: <a href=link><img alt=""

src=img></a> doesn't leave much clickable space for the link).

dillo -- the content seems all there, and the images seem all there. Just

not both where the designer might have hoped. Keep scrolling down...

For contrast: my firefox, in my "normal" config -- some text/text

overlap, but nothing major obviously unreadable; with 16pt minimum text --

lots of unreadable bits because of text/text and text/image overlapping.

[Ben] - There's a large number of pages on the Net that are badly broken WRT

layout; however, over time, I've discovered (much to my surprise) that

*other*, less- (or even un) broken pages exist as well. Based on this amazing

fact, I've developed a strategy: as soon as I encounter the former, I do a bit

of clicking, or even typing, and I'm soon looking at the latter. :)

Seriously - truly bad layout can make a page nearly unusable, which is much

like not having the info in the first place. Given that much of the data on

the Net is available in multiple places, I consider finding an alternate site

- rather than trying to curse my way through

{

font: 3px bold italic "Bloody Unreadable";

text-background: bright-white;

color: white;

}

that's half-hidden behind a blinking yellow "THIS SITE UNDER CONSTRUCTION!!!"

GIF - to be a perfectly valid strategy, unless the info on that specific site

is truly unique. For me, it saves lots of wear and tear - and time.

The above may seem obvious as hell to some, but lots and lots of people tend

to get hyperfocused on Must Fix This Problem instead of looking at alternatives

- I've done it myself, lots of times, and still catch myself doing it on occasion.

Larger context is something that usually bears consideration.

My own default browsing technique consists of firing off my "google"

script, which invokes "w3m" and points it at Google.com with a properly-constructed

query. I page through the hits that Google provides, search until I find what

I want, and - if I really need a graphical browser to examine the content,

which is only true in about 5% of the cases - I hit '2-shift-m', which fires

up Mozilla and feeds it the current URL (since I've set "Second External

Browser" in 'w3m' to 'mozilla'.) I very, *very* rarely have to deal with

really bad layout since text mode prevents many problems by its very nature.

(If the page really *is* unique, _and_ is badly broken, _and_ you really

need to view it in a graphical browser, _and_ you have to keep going back

there, Mozilla and Firefox have a plugin that allows you to "edit"

the HTML of a given site and remembers your edits locally. Whenever you go

to that page, your edits are auto-applied. Unfortunately, I don't recall the

name of the plugin... Jimmy, I think I learned about it from you. Do you recall

the name?)

[[Jimmy]] - Mozilla and Firefox let you override the CSS for any page you want

(user.css IIRC); there's also Greasemonkey for Firefox, which lets you

run your own javascript on any page.

Recently, I came across something that allows you to edit a web page and

save your edits as a Greasemonkey script: I'll pass on the link as soon as

I can dig it up.

[[[Predrag]]] - Platypus(http://platypus.mozdev.org)?

I also like Web Developer(http://www.chrispederick.com/work/webdeveloper/)

[[[[Jimmy]]]] - That's it!

[Ben] -

> > I think the problem is one of expected font and font size. I use

a

> > fairly large min. size ... but don't know if this is the problem

or not.

It would often be a problem, yes. Many sites are laid out so that there's

no room for the expansion necessary to accomodate larger text.

( http://www.yahkkingsgate.homestead.com/) Looks totally fine in 'w3m'. Oh,

and if I want to view it in a larger font, I simply Ctrl-right-click my xterm

and set the fontsize to 'Large', or even 'Huge'.

This is why I prefer to use the 'Tab' key in 'w3m' rather than clicking the

links: it never misses, no matter how small the link. :)

[[[Bob]]] - Yes, reformatting and rewriting broken code is always an option

:)

But, really, the "problem" is that a guy using a cheap (relatively)

out-of-the-box windows system can view these sites just fine (I'm assuming

here since I have not tried it). And, one fellow here says it views fine with

his Firefox. So, I have to assume that something on my setup is buggering

up things.

I get lots of overlays when viewing the site. Nope, not the end of the world

by any means. But, this is not the only one and I guess I was in an easily-annoyed

mood yesterday :)

Since starting this thread I have added extra TT fonts, played with my resolutions

and the font settings in firefox. Gotten some "interesting" results,

but none which fix the problem.

Oh well, life does go on without reading badly formatted html!

[[[Karl-Heinz]]] - opera also has that option of using user-css -- which means

switching off the regular css and having basically no styling at first. Then

you can swtich on some default CSS sheets like high-contrast b/w or w/b,...

This helps on many sites to make them quite readable and usable -- if the site

works without css that is. If they broke the layout so badly that the page (links,...)

won't work unstyled I usually refuse to stay at that site.

And yes: I also have a rather large min. font size and that *does* lead

to trouble when boxes stay fixed but the text gets (much) larger then intended

by the designer. I'm a strong advocate of fluid box layout which adapts to

font changes ;-)

[[[[Bob]]]] - I'm quite convinced that being able to specify absolute positioning

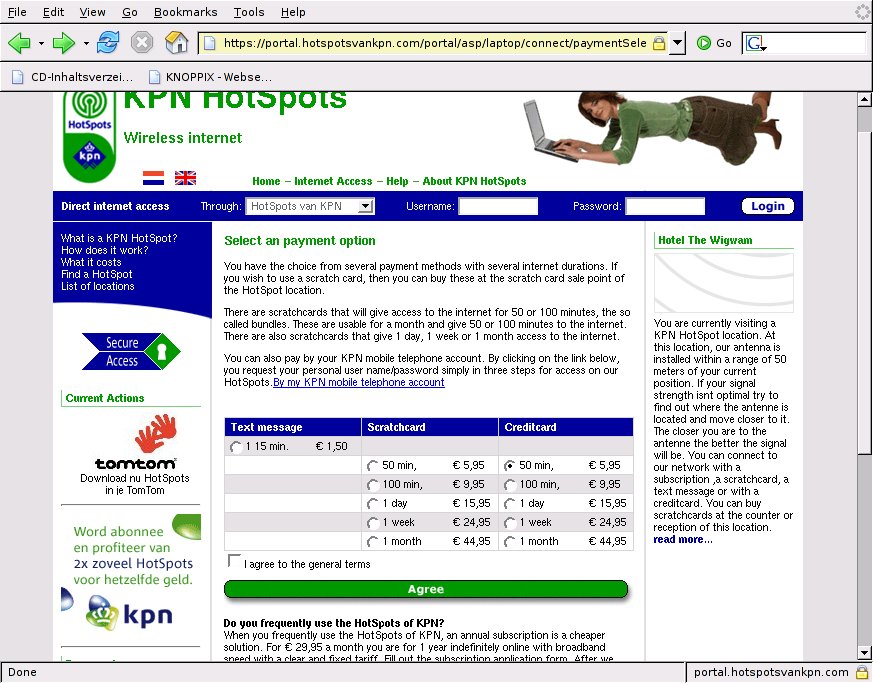

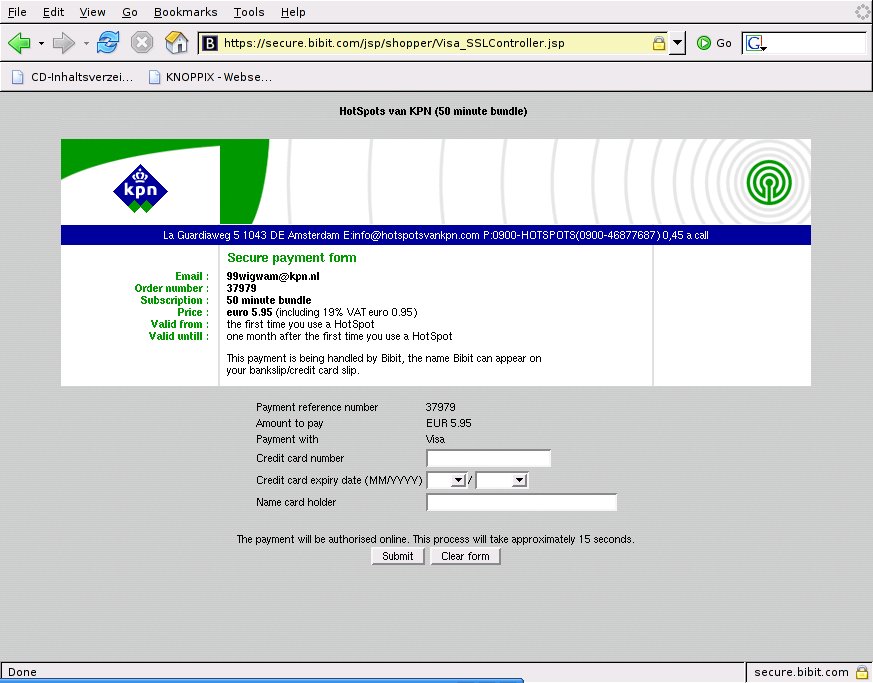

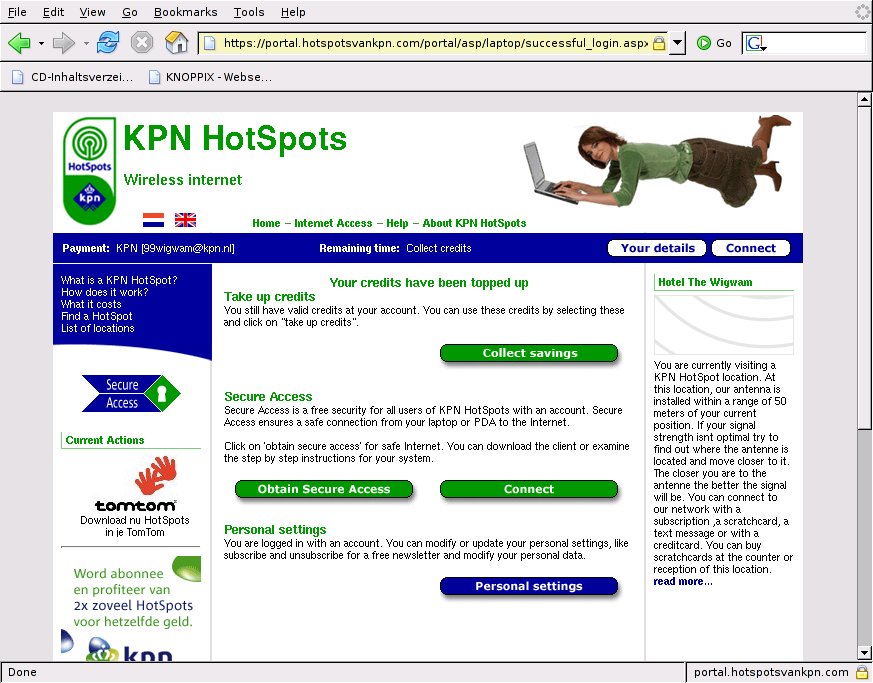

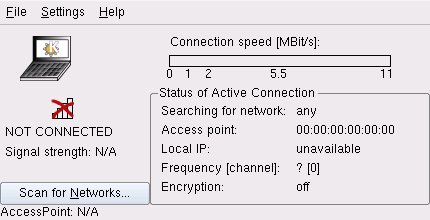

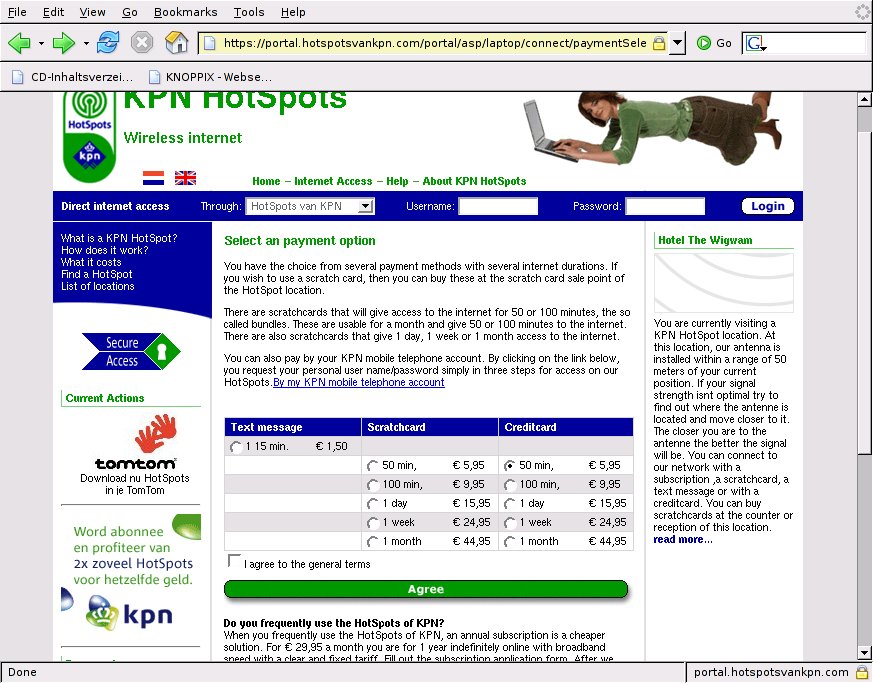

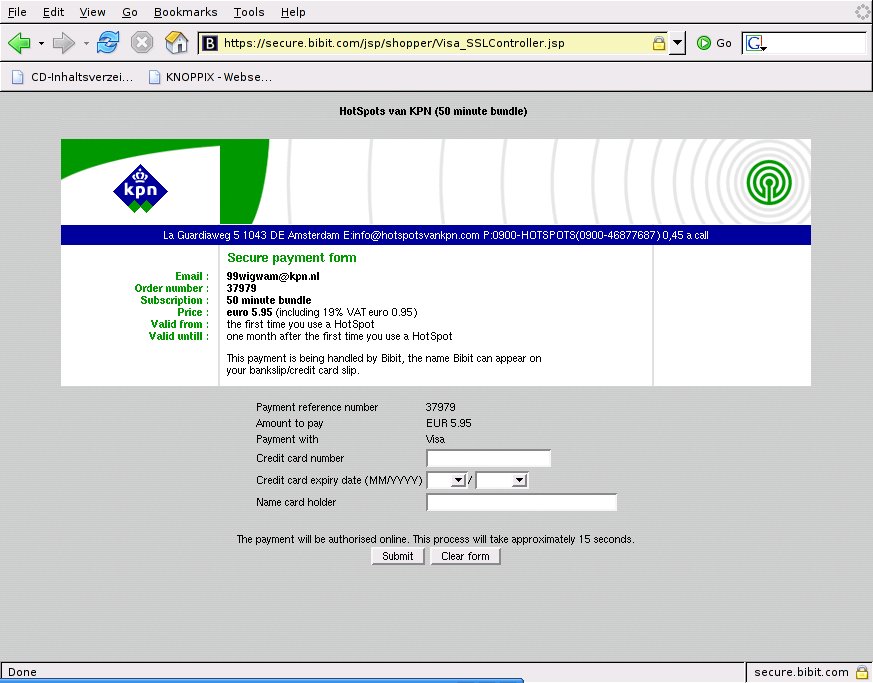

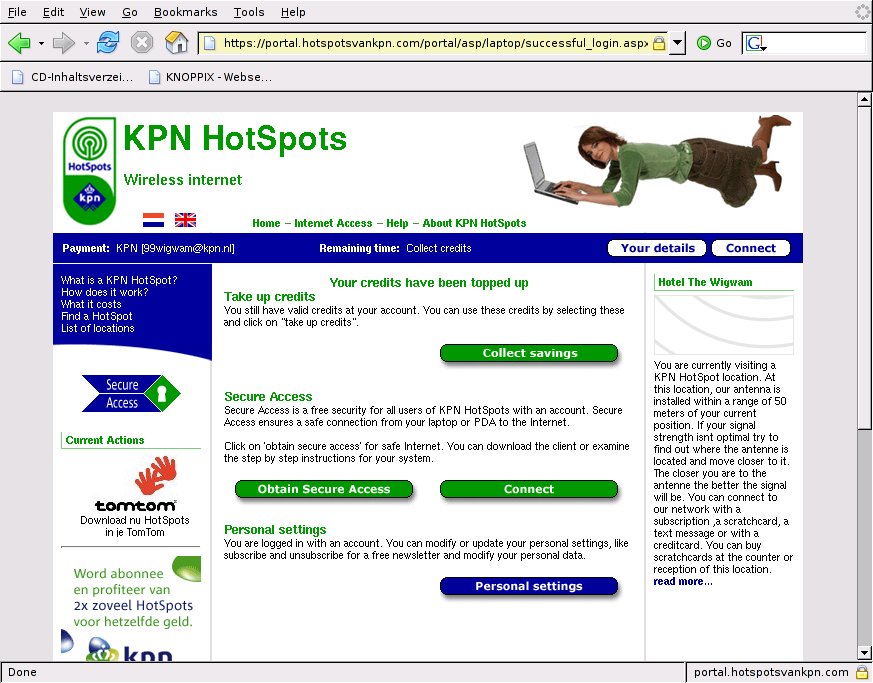

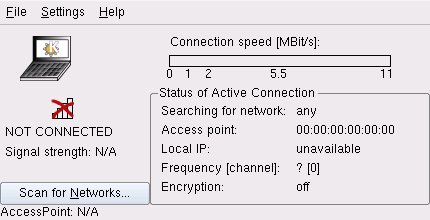

or absolute font size in html is a dumb thing. No idea why people think it